Andyʼs working notes

About these notesVision Pro

Notes on Apple’s 2023-06-05 reveal announcement

The hardware seems faintly unbelievable—a computer as powerful as Apple’s current mid-tier laptops (M2), plus a dizzying sensor/camera array with dedicated co-processor, plus displays with 23M 6µm pixels (my phone: 3M 55µm pixels; the PSVR2 is 32µm) and associated optics, all in roughly a mobile phone envelope.

But that kind of vertical integration is classic Apple. I’m mainly interested in the user interface and the computing paradigm. What does Apple imagine we’ll be doing with these devices, and how will we do it?

Paradigm

Given how ambitious the hardware package is, the software paradigm is surprisingly conservative. visionOS is organized around “apps”, which are conceptually defined just like apps on iOS:

- to perform an action, you launch an app which affords that activity; no attempt is made to move towards finer-grained “activity-oriented computing”

- apps present interface content, which is defined on a per-app basis; app interfaces cannot meaningfully interact, with narrow carve-outs for channels like drag-and-drop

- (inferred) apps act as containers for files and documents; movement between those containers is constrained

I was surprised to see that the interface paradigm is classic WIMP. At a high level, the pitch is not that this is a new kind of dynamic medium, but rather that Vision Pro gives you a way to use (roughly) 2D iPad app UIs on a very large, spatial display. Those apps are organized around familiar UIKit controls and layouts. We see navigation controllers, split views, buttons, text fields, scroll views, etc, all arranged on a 2D surface (modulo some 3D lighting and eye tracking effects). Windows, icons, menus, and even a pointer (more on that later).

These 2D surfaces are in turn arranged in a “Shared Space”, which is roughly the new window manager. My impression is that the shared space is arranged cylindrically around the user (moving with them?), with per-window depth controls, but I’m not yet sure of that. An app can also transition into “Full Space”, which is roughly like “full screening” an app on today’s OSes.

In either mode, an app can create a “volume” instead of a “window”. We don’t see much of this yet: the Breathe app spreads into the room; panoramas and 3D photography is displayed spatially; a CAD app displays a model in space; an educational app displays a 3D heart. visionOS’s native interface primitives don’t make use of a volumetric paradigm, so anything we see here will be app/domain-specific (for now).

Input

For me, the most interesting part of visionOS is the input part of the interaction model. The core operation is still pointing. On NLS and its descendants, you point by indirect manipulation: moving a cursor by translating a mouse or swiping a trackpad, and clicking. On the iPhone and its descendants, you point by pointing. Direct manipulation became much more direct, though less precise; and we lost “hover” interactions. On Vision Pro and its descendants, you point by looking, then “clicking” your bare fingers, held in your lap.

Sure, I’ve seen this in plenty of academic papers, but it’s quite wild to see it so central to a production device. There are other VR/AR devices which feature eye tracking, but (AFAIK) all still ship handheld controllers or support gestural pointing. Apple’s all in on foveation as the core of their input paradigm, and it allows them to produce a controller-free default experience. It reminds me of Steve’s jab at styluses at the announcement of the iPhone.

My experiences with hand tracking-based VR interfaces have been uniformly unpleasant. Without tactile feedback, the experience feels mushy and unreliable. And it’s uncomfortable after tens of seconds (see also Bret’s comments). The visionOS interaction model dramatically shifts the role of the hands. They’re for basically-discrete gestures now: actuate, flick. Hands no longer position the pointer; eyes do. Hands are the buttons and scroll wheel on the mouse. Based on my experiences with hand-tracking systems, this is a much more plausible vision for the use of hands, at least until we get great haptic gloves or similar.

But it does put an enormous amount of pressure on the eye tracking. As far as I can tell so far, the role of precise 2D control has been shifted to the eyes. The thing which really sold the iPhone as an interface concept was Bas’s and Imran’s ultra-direct, ultra-precise 2D scrolling with inertia. How will scrolling feel with such indirect interaction? More importantly, how will fine control feel—sliders, scrubbers, cursor positioning? One answer is that such designs may rely on “direct touch”, akin to existing VR systems’ hand tracking interactions. Apple suggests that “up close inspection or object manipulation” should be done with this paradigm. Maybe the experience will be better than on other VR headsets I’ve tried because sensor fusion with the eye tracker can produce more accuracy?

By relegating hands to a discrete role in the common case, Apple reinforces the 2D conception of the visionOS interface paradigm. You point with your eyes and “click” with your hands. One nice benefit of this change is that we recover a natural “hover” interaction. But moving incrementally from here to a more ambitious “native 3D” interface paradigm seems like it would be quite difficult.

For text, Apple imagines that people will use speech for quick input and a Bluetooth keyboard for long input sessions. They’ll also offer a virtual keyboard you can type on with your fingertips. My experience with this kind of virtual keyboard has been uniformly bad—because you don’t have feedback, you have to look at the keyboard while you type; accuracy feels effortful; it’s quickly tiring. I’d be surprised (but very interested) if Apple has solved these problems.

Strategy

Note how different Apple’s strategy is from the vision in Meta’s and MagicLeap’s pitches. These companies point towards radically different visions of computing, in which interfaces are primarily three-dimensional and intrinsically spatial. Operations have places; the desired paradigm is more object-oriented (“things” in the “meta-verse”) than app-oriented. Likewise, there are decades of UIST/etc papers/demos showing more radical “spatial-native” UI paradigms. All this is very interesting, and there’s lots of reason to find it compelling, but of course it doesn’t exist, and a present-day Quest / HoloLens buyer can’t cash in that vision in any particularly meaningful way. Those buyers will mostly run single-app, “full-screen” experiences; mostly games.

But, per Apple’s marketing, this isn’t a virtual reality device, or an augmented reality device, or a mixed reality device. It’s a “spatial computing” device. What is spatial computing for? Apple’s answer, right now, seems to be that it’s primarily for giving you lots of space. This is a practical device you can use today to do all the things you already do on your iPad, but better in some ways, because you won’t be confined to “a tiny black rectangle”. You’ll use all the apps you already use. You don’t have to wait for developers to adapt them. This is not a someday-maybe tech demo of a future paradigm; it’s (mostly) today’s paradigm, transliterated to new display and input technology. Apple is not (yet) trying to lead the way by demonstrating visionary “killer apps” native to the spatial interface paradigm. But, unlike Meta, they’ll build their device with ultra high-resolution displays and suffer the premium costs, so that you can do mundane-but-central tasks like reading your email and browsing the web comfortably.

On its surface, the iPhone didn’t have totally new killer apps when it launched. It had a mail client, a music player, a web browser, YouTube, etc. The multitouch paradigm didn’t substantively transform what you could do with those apps; it was important because it made those apps possible on the tiny display. The first iPhone was important not because the functionality was novel but because it allowed those familiar tools to be used anywhere. My instinct is that the same story doesn’t quite apply to the Vision Pro, but being generous for a moment, I might suggest its analogous contribution is to allow desktop-class computing in any workspace: on the couch, at the dining table, etc. “The office” as an important, specially-configured space, with “computer desk” and multiple displays, is (ideally) obviated in the same way that the iPhone obviated quick, transactional PC use.

Relatively quickly, the iPhone did acquire many functions which were “native” to that paradigm. A canonical example is the 2008 GPS-powered map, complete with local business data, directions, and live transit information. You could build such a thing on a laptop, but the amazing power of the iPhone map is that I can fly to Tokyo with no plans and have a great time, no stress. Rich chat apps existed on the PC, but the phenomenon of the “group chat” really depended on the ubiquity of the mobile OS paradigm, particularly in conjunction with its integrated camera. Mobile payments. And so on. The story is weaker for the iPad, but Procreate and its analogues are compelling and unique to that form factor. I expect Vision Pro will evolve singular apps, too; I’ll discuss a few of interest to me later in this note. Will its story be more like the iPhone, or more like the iPad and Watch?

It’s worth noting that this developer platform strategy is basically an elaboration of the Catalyst strategy they began a few years ago: develop one app; run it on iOS and macOS. With the Apple Silicon computers, the developer’s participation is not even required: iPad apps can be run directly on macOS. Or, with SwiftUI, you can at least use the same primitives and perhaps much of the same code to make something specialized to each platform. visionOS is running with the same idea, and it seems like a powerful strategy to bootstrap a new platform. The trouble here has been that Catalyst apps (and SwiftUI apps, though somewhat less so) are unpleasant to use on the Mac. This is partially because those frameworks are still glitchy and unfinished, but partially because an application architecture designed for a touch paradigm can’t be trivially transplanted to the information/action-dense Mac interface. Apple makes lots of noises in their documentation about rethinking interfaces for the Mac, but in practice, the result is usually an uncanny iOS app on a Mac display. Will visionOS have the same problem with this strategy? It benefits, at least, from not having decades of “native” apps to compare against.

Dreams

If I find the Vision Pro’s launch software suite conceptually conservative, what might I like to see? What sorts of interactions seem native to this paradigm, or could more ambitiously fulfill its unique promise?

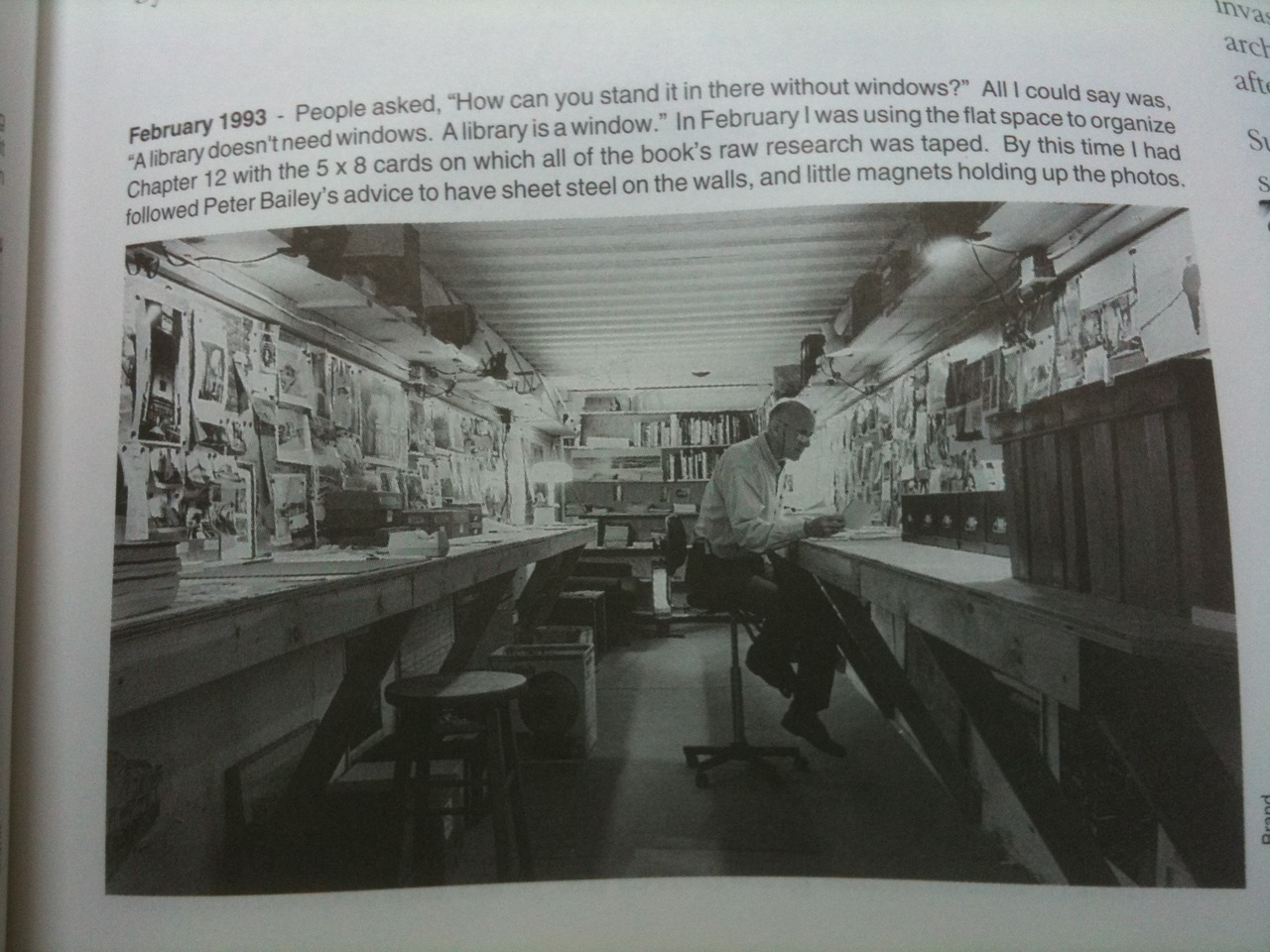

Huge, persistent infospaces: I love this photo of Stewart Brand in How Buildings Learn. He’s in a focused workspace, surrounded by hundreds of photos and 3”x5” cards on both horizontal and vertical surfaces. It’s a common trope among writers: both to “pickle” yourself in the base material and to spread printed manuscript drafts across every available surface. I’d love to work like this every day, but my “office” is a tiny corner of my bedroom. I don’t have room for this kind of infospace, and even if I did, I wouldn’t want to leave it up overnight in my bedroom. There’s tremendous potential for the Vision Pro here. And unlike the physical version, a virtual infospace could contend with much more material than could actually fit in my field of view, because the computational medium affords dynamic filtering, searching, and navigation interactions (see Softspace for one attempt). And you could swap between persistent room-scale infospaces for different projects. I suspect that visionOS’s windowing system is not at all up to this task. One could prototype the concept with a huge “volume”, but it would mean one’s writing windows couldn’t sit in the middle of all those notes. (Update: maybe a custom Shared Space would work?)

Ubiquitous computing, spatial computational objects: The Vision Pro is “spatial computing”, insofar as windows are arranged in space around you. But it diverges from the classic visions along these lines (Ubiquitous computing, Dynamicland) in that the computation lives in virtual windows, without more than a loose spatial connection to anything physical in the world. What if programs live in places, live in physical objects in your space? For instance, you might place all kinds of computational objects in your kitchen: timers above your stove; knife work reference overlays above your cutting board; a representation of your fridge’s contents; a catalog of recipes organized by season; etc. Books and notes live not in a virtual 2D window but “out in space”, on my coffee table (solving problems of Peripheral vision). When physical, they’re augmented—with cross-references, commentary from friends, practice activities, etc. Some are purely digital. But both signal their presence clearly from the table while I’m wearing the headset. My memory system is no longer stuck inside an abstract practice session; practice activities appear in context-relevant places, ideally integrating with “real” activities in my environment, as I perform them.

Shared spatial computing: Part of these earlier visions of spatial computing, and particularly of Dynamicland, is that everything I’m describing can be shared. When I’m interacting with the recipe catalog that lives in the kitchen, my wife can walk by, see the “book” open and say “Oh, yeah, artichokes sound great! And what about pairing them with the leftover pork chops?” I’ll reserve judgment about the inherent qualities of the front-facing “eye display” until I see it in person, but no matter how well-executed that is, it doesn’t afford the natural “togetherness” of shared dynamic objects. Particularly exciting will be to create this kind of “togetherness” over distance. I think a “minimum viable killer app” for this platform will be: I can stand at my whiteboard, and draw (with a physical marker!), and I see you next to me, writing on the “same surface”—even though you’re a thousand miles away, drawing on your own whiteboard. FaceTime and Freeform windows floating in my field of view don’t excite me very much as an approximation, particularly since the latter requires “drawing in the air.”

Deja vu

A few elements of visionOS’s design really tickled me because they finally productized some visual interface ideas we tried in 2012 and 2013. It’s been long enough now that I feel comfortable sharing in broad strokes.

The context was that Scott Forstall had just been fired, Jony Ive had taken over, and he wanted to decisively remake iOS’s interface in his image. This meant aggressively removing ornamentation from the interface, to emphasize user content and to give it as much screen real estate as possible. Without borders, drop shadows, and skeuomorphic textures, though, the interfaces loses cues which communicate depth, hierarchy, and interactivity. How should we make those things clear to users in our new minimal interfaces? With a few other Apple designers and engineers1, I spent much of that year working on possible solutions that never shipped.

You might remember the “parallax effect” from iOS 7’s home screen, the Safari tabs view, alerts, and a few other places. We artificially created a depth effect using the device's motion sensors. Internally, even two months before we revealed the new interface, this effect was system-wide, on every window and control. Knobs on switches and scrubbers floated slightly above the surface. Application windows floated slightly above the wallpaper. Every app had depth-y design specialization: the numbers in the Calculator app floated way above the plane, as if they were a hologram; in Maps, pins, points of interest, and labels floated at different heights by hierarchy; etc. It was eventually deemed too much (“a bit… carnival, don't you think?”) and too battery-intensive. So it's charming to see this concept finally get shipped in visionOS, where UIKit elements seem to get the same depth-y treatments we'd tried in 2012/2013. It's much more natural in the context of a full 3D environment, and the Vision Pro can do a much better job of simulating depth than we'd ever manage with motion sensors.

A second concept rested on the observation that the new interface might be very white, but there are lots of different kinds of white: acrylic, paper, enamel, treated glass, etc. Some of these are “flat”, while others are extremely reactive to the room. If you put certain kinds of acrylic or etched glass in the middle of a table, it picks up color and lighting quality from everything around it. It’s no longer just “white”. So, what if interactive elements were not white but “digital white”—i.e. the material would be somehow dynamic, perhaps interacting visually with their surroundings? For a couple months, in internal builds, we trialled a “shimmer” effect, almost as if the controls were made of a slightly shiny foil with a subtly shifting gloss as you moved the device (again using the motion sensors). We never could really make it live up to the concept: ideally, we wanted the light to interact with your surroundings. visionOS actually does it! They dynamically adapt the control materials to the lighting in your environment and to your relative pose. And interactive elements are conceptually made of a different material which reacts to your gaze with a subtle gloss effect! Timing is everything, I suppose…

Only some of the WWDC videos about the Vision Pro have been released so far. I imagine my views will evolve as more information becomes available.

Surprising design features

Having now watched all the WWDC 2023 talks:

- ARKit persists anchors and mapping data based on your location.

- Practically speaking, this means that if you use an app to anchor some paintings to your wall at home, then go to your office, you won’t see the paintings there. You can persist new paintings on the walls in your office. Then when you return home, the device will automatically reload the anchors and map associated with that location—i.e. you’ll see the objects you anchored at home.

- This seems like a critical component of the more ambitious spatial computing paradigms I allude to above, and particularly to many Ambient computing/ubiquitous computing ideas.

- It’s very clever, and very important to the system’s overall aesthetic, that they’ve made most interface materials “glass”. This decision leans into the device’s emphasis on AR—computing within your space—rather than on VR—computing within an artificial space.

- Not only are these transparent, but their lighting reflects the lighting of your space. And it’s not just backgrounds—secondary and tertiary text elements are tinted (“vibrancy”) to make them coordinate with the scene around you.

- The net effect is lightness: you can put more “interface” in your visual field without making it feel claustrophobic.

- This is a “virtual AR” device, so it’s easy to be fooled into thinking that it’s “just” displaying the camera feed, as Quest does. But it also gathers mesh and lighting information about the room, and it uses that to transform the internal projection of the environment in certain cases.

- For instance, the film player bounces simulated emissive light from the “screen” onto the ceiling and floor, while “dimming” the rest of the room.

- Controls and interface elements cast shadows onto the 3D environment.

- “Spatial Personas” allow FaceTime sessions to “break out of” the rectangle and be displayed as simulated 3D representations in space. Pretty wild, if uncanny.

- The window/volume-centric abstraction allows SharePlay to fully manage synchronization of shared content and personas in 3D space, so that each person has the same arrangement of where each other person is relative to them in space; the app developer just specifies whether this is a “gather-round” type interaction, a “side-by-side” type interaction, etc.

- These are interesting abstractions, and I can see how they really lower the floor to making interactions “sharable by default” with very little developer intervention, even while they constrain away some more ambitious modalities.

- In the absence of haptics, spatial audio is emphasized as a continuous feedback mechanism. Unlike most personal computers, this device has built-in speakers which (I’m guessing) can be assumed to be on by default, because there’s probably little leakage to the environment. That’s pretty interesting.

- Portals are an interesting choice of primitive abstraction in RealityKit. Because Vision Pro emphasizes remaining in your physical space in most circumstances, portals offers a way to bring immersive 3D content into your environment without overtly supplanting it.

1 Something in the Apple omertà makes me uncomfortable naming my collaborators as I normally would, even as I discuss the project itself. I guess it feels like I’d be implicating them in this “behind-the-scenes” discussion without their consent? Anyway, I want to make clear that I was part of a small team here; these ideas should not be attributed to me.

Experiment log 2024-02 February

2024-02-05

- Comfort: already a big problem for me.

- I tried wearing the Solo Band for a 1.5 hour flight and was extremely uncomfortable by about halfway through.

- I switched to the Dual Loop Band, and… I can tell that this is not something I can wear all day without break. I’m about 45m in right now, and it’s noticeably uncomfortable, after numerous adjustments according to Apple’s fit guide. I imagine I’ll get used to it over time, maybe?

- I’m taking hourly breaks for ~10m. This helps.

- I find myself cycling the pressure back and forth between my cheeks and forehead. One side gets sore, then I shift a little to the other, and vice versa.

- I can’t imagine using this device without perusing the fit guide repeatedly (“how to take pressure off my cheeks again?”). I wonder if it’ll be integrated into the software

- Update, five hours of use later: the discomfort is substantially better—I think I’m slowly finding a better balance.

- I really want to try Gray Crawford’s brilliant cap+rubberband arrangement, but I’m traveling at the moment and don’t have one at hand.

- The LED ceiling lights in this room are at some slightly incompatible frequency with the cameras, so there’s a very subtle judder of the lighting on the surfaces around me. Immersive environments solve this.

- Mac mirroring:

- Latency is good for general use but round-trip latency when typing is noticeable. Not a lot, but present. Feels a bit like typing into a beefy Electron/web app. Just a little friction.

- Text is… not really quite sharp when the screen is at a natural scale. I imagine the 5K->4K scaling and streaming compression do some injury, but I think that’s not the central issue—if I move my head close to the mirrored text, it’s quite sharp. Just too large. It’s tolerable, but at the moment it’s hard to imagine wanting to use this as a primary “external display” for my Mac. Maybe as a travel display, or something which

- The mirroring window subtends quite a lot of the (limited) horizontal FoV, and I find it surprisingly difficult to get it in oriented, sized, and positioned comfortably.

- It does need to be placed “correctly” in Z, so that my eyes focus at a comfortable distance. The system seems to want to default to everything being pretty far away.

- I wish I could control window rotation. The default behavior wants to rotate just a bit too much for me. It’s encouraging me to move my head more than I want to—I want to just use my eyes to a greater degree than the defaults make easily possible.

- To get a shallower rotation, you need to “strafe”: sidestep with a slight rotation, grab the window, and it’ll match your current orientation. Then re-center your body.

- One frustrating friction: interacting with a different visionOS window causes the frontmost macOS window to lose focus. To give the frontmost window focus again (i.e. so that one can type into it), you must look and pinch. Pinching is a fairly costly interaction; I think I expect to just be able to look and type, in the same sense that looking and pinching works. Probably that’s not straightforward to have as a default because too many people need to look at the keyboard to type.

- Large white-background windows (e.g. Bear, Safari) are somewhat uncomfortably bright. Dark mode fixes this problem, but text sharpness becomes even worse.

- My Apple Bluetooth keyboard is getting intermittently disconnected (“Input temporarily unavailable”), which never ever happens when I use my Mac by itself.

- Interaction

- The look+pinch interaction is of course an astounding feat of technology. Simultaneously… it’s quite sluggish. Actions per second are <= 1.

- I think this means that serious work in this interaction modality will have to look very different. Serious work can’t depend on a high volume of discrete gestures, as we do on Mac and touch interfaces. What will it be instead? Perhaps continuous interaction modalities? It seems pretty clear to me that Apple’s not focused on serious work for this device, at least in 1.0. I wouldn’t want to use Mail in a professional capacity with this device.

- One interesting consequence is that this is actually a much more calm computing environment. Distraction is no longer a single cmd+tab away. The feeling is a little more like setting up a physical workspace: gotta set up all the little objects and supplies you need within reach, then that’s what’s there.

- When controls are placed close to each other, the system does struggle to discriminate which one has my gaze. One unintuitive discovery: it actually helps a great deal to move the window “closer” in simulated distance, even without changing its absolute perceptual size. I think this is because binocular disparity is larger for close objects. When the window is far away, binocular disparity is minimal, so the gaze tracker has to discern a 1° difference just by looking at the position of my eyes.

- Writing in Bear (my note-taking environment)

- Trying the iPad Bear app in “compatibility mode”. It’s definitely a bit out of proportion. Syncing is taking a very long time (>2hrs). The app has disappeared several times—probably exceeded memory limits. I’ve had to relaunh it each time to resume sync.

- OK, two hours later, I got my Bear library running on the device. The text is slightly sharper than the Mac mirroring, but only slightly. I find I need to make the window 50-75% larger (in simulated physical size) than a comfortable editor size on my Mac display.

- Unfortunately, Bear’s iPad app isn’t really robust enough for me to use happily.

- It doesn’t support multiple windows, so I’m stuck with only one note open. That seems fairly reasonable on iPad but isn’t suitable for most serious work I do, and of course feels ridiculous with the boundless space visionOS provides.

- On macOS I make heavy use of an Alfred workflow to jump to a desired note. Bear lacks a built-in “Quick Open” interaction that one might expect from a pro writing environment. One is stuck using the search feature in the notes list, which is unacceptably slow.

- So, for now, I’m using the visionOS Bear instance as a persistent editor for this note (my Vision Pro log), and my macOS mirrored screen to have several other secondary notes displayed.

- It’s remarkable how well the gaze interactions work for text. I can almost position the cursor by just looking at where I want to go. I get an exact match ~half the time. It’s off by a line up or down the rest of the time. This is quite remarkable—it’s sort of like what

avyand Raskin’s Canon Cat “LEAP” interaction want to be. “Go where I’m looking.” It’s not there yet, but in a few years, it’ll feel like a Poor man’s brain-computer interface, I think. - Repositioning the cursor by pinch+drag is pretty elegant.

- Selection is more complicated and somewhat less graceful

- Experiment: reading a paper in visionOS

- Unsurprisingly, it’s really not meant for this.

- You can view a PDF in Safari, but the extra chrome is distracting, and the tools are poor (just Safari’s built-in search), no page thumbnails, etc.

- Alternately, you can use the PDF viewer built into the Files app. It’s basically the iPadOS viewer, which is to say that it’s quite minimal.

- The white “paper” is uncomfortably bright.

- I sized the document to match its printed size (8.5”x11”) and positioned it at the distance I’d normally read a document of that size. In this configuration, the text is… a bit soft. Feels a bit like reading on a pre-retina display, though I think what’s going on is probably more complicated than that.

- Broadly, my impression is that the reading experience here has the main problem of reading on screens (staring at a bright white backlight is unpleasant) with additional problems of worse sharpness and physical discomfort from the heavy HMD.

- To make the text sharp enough for comfortable reading, I increased the simulated page size to roughly that of a vertical 27” display.

- The markup features are basically an unaltered port of the iPadOS interactions, with pinched fingers mapping to trackpad input (just like Freeform). It’s not usable at all; I’m surprised they didn’t just disable it.

- Likewise, selecting or copying text from the PDF is slow and cumbersome (though I applaud the ambition at trying to use gaze alone for character-level targeting—someday, that’ll work!)

- Continuous-scrolling a PDF is a very unpleasant way to move through it on this device. I don’t like it reading PDFs that way on other devices, either, but the indirect scrolling interaction on visionOS makes it even worse. I find myself strongly wishing it snapped to page boundaries.

- At present, the spatial medium doesn’t really give any new benefits to the reading experience in return for all these deficits. But, of course, it could. I have some ideas along those lines. Separately, like reading almost anything on a normal computer, we’re really not taking advantage of the dynamic/computational medium.

- Not something I’d be excited to do again soon.

- Building my first prototypes… Swift remains obnoxious, though full of interesting ideas. Maybe this’ll be the time I finally get used to SwiftUI’s layout semantics. For now, feeling slow and encumbered.

- Pleasantly surprised that SwiftUI live previews will actually deploy-to-device, and update relatively quickly (latency: ~2s). But every time any change is made, the preview window disappears and then appears in a new location (center of your viewport). Too disruptive for serious use.

2024-02-06

- Today I’ve swapped my Bluetooth mouse and keyboard for just using my MBP’s built-in keyboard and trackpad. I presume this will resolve the strange disconnection issues I experienced yesterday. But it’ll also let me use the pointer in visionOS apps: Bluetooth mice aren’t supported; only trackpads.

- I notice a lot of palm rejection issues very quickly: the trackpad is activating incorrectly within visionOS apps while I’m typing, whereas this never happens in macOS. I imagine that something like the raw HID events are being passed over from my Mac to the AVP, and the macOS palm rejection occurs later in the event pipeline. It’s obnoxious but not a deal-breaker.

- Being able to scroll with two fingers on the trackpad is so much faster and more accurate for serious work than the camera-tracked pinch+move gesture.

- Interestingly, no, I’m still intermittently getting the “input temporarily unavailable” dialog, even while the macOS display mirroring remains. I guess they’re separate connections.

- Tried using the “invert colors” accessibility function on my Mac to read white-backgrounded documentation more comfortably. No effect on the streamed video.

- There are some beautiful beams of sunlight falling into my kitchen this morning. Wearing the AVP, I feel some regret—I’m “missing” them! The pass through-rendered sunbeams are flat and dull.

- When iterating on a visionOS app on-device, window positions are not preserved across rebuilds. Inevitably, this means they open behind my macOS mirroring window (in Z) and must then be expensively repositioned.

- On-device iteration latency with small change to one file is about ten seconds. Apple sells a special USB-C attachment for the AVP to improve data transfer rates, but that’s not gating here: most of the time is spent compiling and launching the app via debugger, not copying it.

- One funny disadvantage to using my AVP as my macOS display: I can’t keep WiFi disabled on my Mac! I normally do as a simple impulse control measure during my working blocks.

- Some notes on developer restrictions / availabilities discovered this morning:

- You can only access full hand tracking data when in an immersive space. That’s quite limiting. Gray Crawford suggests that you should be able to access hand tracking data when your hand intersects an app’s volume. That makes some sense, although one hitch is that volume boundaries aren’t currently visible.

- Using

DragGesture, though, it appears that you can access 6DOF information about a pinch+drag actuated on any type of scene. - Getting full world tracking requires both immersion and explicit user permission, but

AnchorEntity(.head)might not, because it doesn’t actually expose the transform matrix?

2024-02-07

- Now on the third day, I think I’ve figured out how to wear the device comfortably. No longer struggling with the straps so much help. Not sure if this was a matter of finding the right configuration or of building tolerance.

- Spending a lot of time this morning fighting with the Xcode 15.3b2 / visionOS 1.1b1 configuration. It’s no longer willing to deploy to device.

- Three hours later, still at it. I think it might be an issue with my personal laptop still having some residual Apple internal IS&T bit set on it, a decade later?? Console error messages are complaining about trying to use AppleConnect, which is the internal SSO. No results for the error code anywhere on the internet—never a good sign. Rummaging around private developer frameworks in Hopper now. Not good, not good.

- Five hours later, fixed it: a sysdiagnose turned up

com.apple.ist.ds.appleconnectas enabled in the list of disabled services. It didn’t actually have a corresponding launchd plist anywhere, but maybe something was checking for it. I ransudo launchctl disable com.apple.ist.ds.appleconnect, thensudo find / -name “AppleConnect”and deleted some stray preference plists I found. After reboot, it was fixed.

2024-02-08

- Getting a lot of anisotropic strobing when subtly rotating images of PDF pages.

- It seems very difficult to do anything with 3D space in SwiftUI, other than very simple effects like layering 2D surfaces in parallel in 3D, or embedding small 3D models within traditional windows. It seems I’ll need to learn to use RealityKit to do anything interesting.

2024-02-09

- Spending some time this morning re-watching WWDC sessions on RealityKit. I know its Entity-Component-System architecture is pretty standard in the game programming world, but it’s not something I’ve used before. My first impression is that it seems quite graceful.

- SwiftUI gestures can interact with RealityKit views, if they have

InputTargetComponentandCollisionComponent. That must have been quite a complicated integratino. HoverEffectComponentis used for out-of-process hover effects… but it appears that there’s no available configuration at all.

- SwiftUI gestures can interact with RealityKit views, if they have

- Big improvement in Xcode 15.3b2 / visionOS 1.1b1: SwiftUI previews now stay in the same spot when making changes! That’s the upside. The downside is that some part of the process is happening on the UI thread, so every time I change a line, Xcode beachballs for like two seconds while the preview updates. Maddening.

2024-02-10

- It is very pleasant taking AVP outside to my unfinished rooftop, where it’s ordinarily much too bright and glare-y to use a computer. Escaping Backlit displays limit computers to interiors! I could feel the sun and breeze on my skin while still reading sharp displays. Lovely.

2024-02-12

- I’m realizing that I don’t understand the rules of window and volume clipping frustrums.

- If I place a

Model3Dinside a.plainSwiftUI window, it seems to clip at z=-250 and +570 - It also clips when its x and y values extend beyond the window’s frame, irrespective of its z position (and so the projected x/y value may be considerably beyond the window frame if it has a large positive z value).

- It appears that volumetric windows do not provide resize affordances to the user.

- If I place a

Model3Dinside a volumetric window, I can translate to ~±200 outside its z extents before it clips. It clips immediately when exceeding the x or y values of the frustrum.

- If I place a

- Big ugh: RealityKit types are expressed in terms of

Float; SwiftUI types are expressed in terms ofDouble. Also, number literals like0.5are inferred to beDoublenotFloatwhen no context is provided. And Swift does not automatically coerce. What a nuisance. - How to use Xcode Previews to preview immersive scenes? This WWDC talk suggests that one can achieve this by adding the

.previewLayout(.sizeThatFits)modifier to one’s#Previewview, but in my experiments, that only resizes up to relatively small limits. My immersive scenes are clipping in Xcode Preview. - Hm. SwiftUI will make gesture-initiated animations match the user’s velocity, but there seems to be no natural way to imitate this with

RealityKit’s animation primitives. Unless I drop into the full physics body simulator, I guess? Frustrating. - In fact, it seems that RealityKit only supports cubic bezier timing curves. We’re back in 2013!

2024-02-13

- Finding myself doing more iteration in the Xcode simulator, even when the device is at hand. It’s just a little faster for iteration. And unfortunately, when developing an immersive scene, the Xcode Preview doesn’t quite work: it clips, even when using the

.sizeThatFitspreview layout. Iterating on device with immersive scenes has extra friction because the macOS mirroring window disappears while in the immersive scene. - It’s surprisingly difficult to record a good video of visionOS concepts. Using the on-device recording, text comes out quite fuzzy. Using the Reality Composer Pro “Developer Capture” feature causes the device framerate to fall to ~15fps or so, producing a low-quality video.

2024-02-14

- Disappointed to learn that

ARBodyAnchoris unavailable on visionOS, even when in an immersive space and with all available permissions. It’s not clear whether that’s a deliberate decision—to make people-tracking unavailable—or if it’s just part of a broader API migration away fromARAnchorin favor of the newARKitSessionsuite of APIs, which includes analogues of many of theARAnchorsubclasses, but not this one. - Experimented with

ImageAnchorthis morning, tracking physical books.- Using the spine as the target image, it worked only very sporadically. Xcode warns me that AR images work best when they’re not very narrow along one axis.

- Using the book’s cover as the target image, it recognizes fairly reliably, though not at all robust to book motion—it only updates the anchor transform at roughly 1Hz. Seems to be a known issue.

- Interestingly, if you don’t need the full 4x4 transform but can make do with

AnchorEntity’s limited API, you don’t need to request any user permissions to use image recognition (but you do need to be in an immersive space, alas)

2024-02-15

- Debugging an app that uses an immersive space is quite obnoxious. Need to see some

printlogs? Did your app crash? Too bad—the macOS mirroring window isn’t visible! And you can’t even squint at your Mac’s screen through the headset because it’s blacked out while mirroring is enabled, even if the mirroring window isn’t visible. - I spent today trying to place a model of a piano keyboard over my real piano keyboard. Surprisingly difficult to get accurate enough positioning. I’m “calibrating” by placing my index finger on the first and last key of the keyboard. The hand positioning is accurate to within ~2cm, but when the keyboard is 1.5m wide, small errors are very noticeable.

2024-02-16

- Using plane detection as part of my strategy to help calibrate the positioning of the piano keyboard. Planes seem a bit more stable than the finger tracking.

- Doing this kind of work—trying to overlay 3D content over physical content—reveals that the world tracking is… not quite as precise as it seems. Anchors really do visibly “swim” a bit within the world when you’re relatively close to them. When the object in question is a 5-foot piano keyboard, that’s… quite visible. It feels remarkably like Waking Life’s rotoscoping aesthetic, actually.

2024-02-19

- I realize now that the world tracking is really not good enough for basically any object-interactive mixed reality experiences I have in mind. Positions swim too much for tight integration between virtual objects and real ones.

- More optimistically, I think API improvements can go a long way here, because realtime tracking does seem to largely mitigate these issues in the case of hands.

- That is: if I anchor a sphere to my fingertip, keep my finger still, and move my head, then the hand tracking will report different world translations for my fingertip over time (even though it’s not moving), but these deltas are basically compensating for errors in world tracking. The relative transform between the device anchor and the fingertip is mostly consistent with my actual motion.

- The image tracker is a bit janky and unreliable, but with ideal images, it produces a similar effect to the hand tracking: it “fixes” world tracking errors when images re-anchor. The problem is that they reanchor at 1Hz. I think 2-3 Hz would probably be good enuogh for fixed objects (e.g. a bookcase, a tag taped to a table); for manipulable objects, 30 Hz is really the minimum. Dynamicland does ~10 Hz with much processing power and pre-DL CV algos. Surely this is possible.

- Speaking of Dynamicland, I realize that an important advantage it (and other Ubiquitous computing) systems have over these HMDs is: the camera has a fixed, calibrated world position. (Also the table/wall surface can usually be assumed to have known planar world coordinates). These HMD systems are simultaneously estimating the world positions of the tracked object and the user’s head. Poor things. No wonder there’s error.

2024-02-26

- Iterating on immersive spaces is really unpleasant for reasons I’ve described above. But today I figured out how to make the iteration cycle time go from ~15s to ~2s! The time cost is mostly LLDB attachment time. Unchecking “Debug executable” in the scheme editor makes things much much faster.

- Why are transforms named

originFromAnchorTransform? This seemed backwards to me at first: isn’t this the transform which moves the anchor, from the origin? The name makes sense if you think of it as a transform which takes a point in the anchor’s coordinate system and moves it into the world coordinate system.

WWDC 24 Notes:

Platforms state of the union

- 38:26: custom hover effects in SwiftUI—i.e. revealing more information on hover

- 1:01:53: object tracking API, unclear sample rate

Some important new vision features for enterprise customers: BarcodeDetectionProvider will let us track barcodes and QR codes. And… you can request camera data. But only for enterprises. Bluh.

Explore object tracking with visionOS

Explore object tracking for visionOS - WWDC24 - Videos - Apple Developer

- 3D models are used to train (at dev time) a model to recognize the object

- Can capture the model using Apple’s “Object Capture” tech

- Training can take a few hours

- Enterprise API can change the tracking rate. Not sure what the default tracking rate is.

- Supported objects should be “mostly stationary”—womp wimp

- Can use “occlusion materials” to make virtual objects “disappear” behind the physical objects

Enhanced spatial computing experiences with ARKit

Create enhanced spatial computing experiences with ARKit - WWDC24 - Videos - Apple Developer

- Room tracking can find boundaries of rooms so that you can trigger behavior according to moving between rooms (which have stable identity, and an associated set of plane and mesh anchors)

- Hand anchor data is now delivered at frame rate!!

Discover RealityKit APIs for iOS, macOS and visionOS

https://developer.apple.com/videos/play/wwdc2024/10103/?time=164

- Can make a hover-dependent shader

Create custom hover effects in visionOS

Create custom hover effects in visionOS - WWDC24 - Videos - Apple Developer

- Content effects can apply styling modifications on hover which don’t change layout

- The account icon example achieves an apparent size change by changing its clipping path

- Can add delays to effects enabling or disabling to avoid visual noise