Andyʼs working notes

About these notesLog: Quantum Country analysis

==Remember:== Recall rates are a misleading proxy for more meaningful goals of the mnemonic medium

Question queue:

- 2022-01-27: are people who forget a lot more likely to churn?

- 2022-01-20: 1-day “make-up” sessions rather than 5-day (as in aggressiveStart) yields higher accuracies in that session (Quantum Country users who forget in-essay exhibit sharp forgetting curves), but what does that mean in terms of the overall schedule? We’re seeing spacing effect vs. some kind of “correctness” reinforcement effect here… can I use this to create any kind of holistic measure? measure “stability”?

- 2022-01-20: Plot per-card forgetting chart like the one in QCVC questions are initially forgotten at very different rates for initially-forgotten cards, to reinforce Quantum Country users who forget in-essay exhibit sharp forgetting curves

- 2022-01-14: Make a per-card response time plot, segmented by condition.

- 2022-01-14: Examine the effect of initial delays on compliance.

- 2021-03-09: Do in-essay response times predict in-essay accuracy? That is, can we use them to identify prior ability?

- 2021-03-03: Does response time predict next question accuracy? If I take a long time to think about a forgotten response am I more likely to remember it next time? Or the reverse?

- Look at question correlations: can we use them to see dependencies?

2022-06-28

Updating QC data. 20220628094431

Not much change. Even less forgetting now!

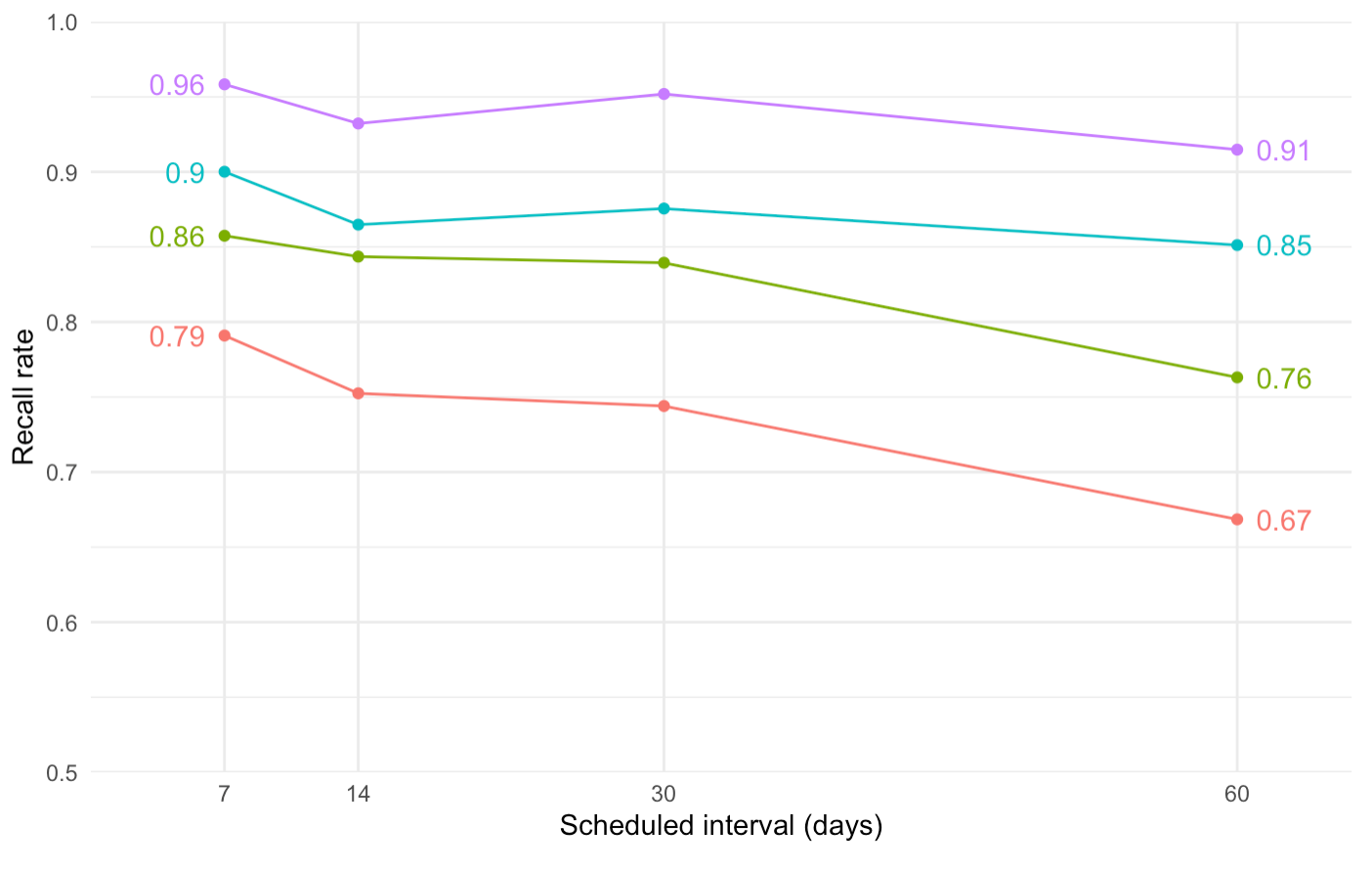

(vs, in February):

At least the 30 day cohort is behaving a little more sanely.

2022-02-24

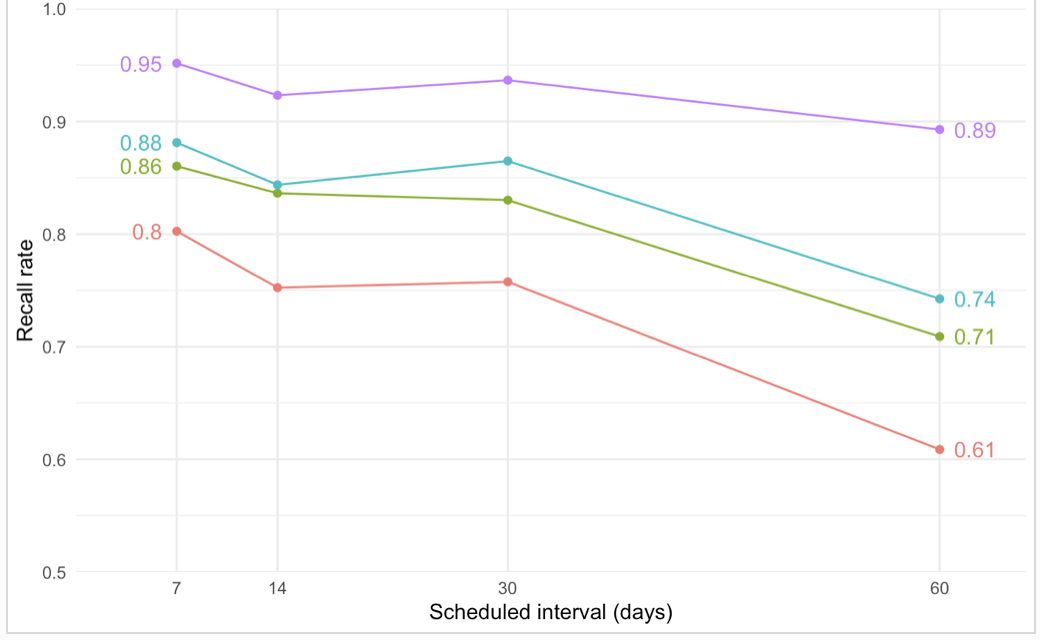

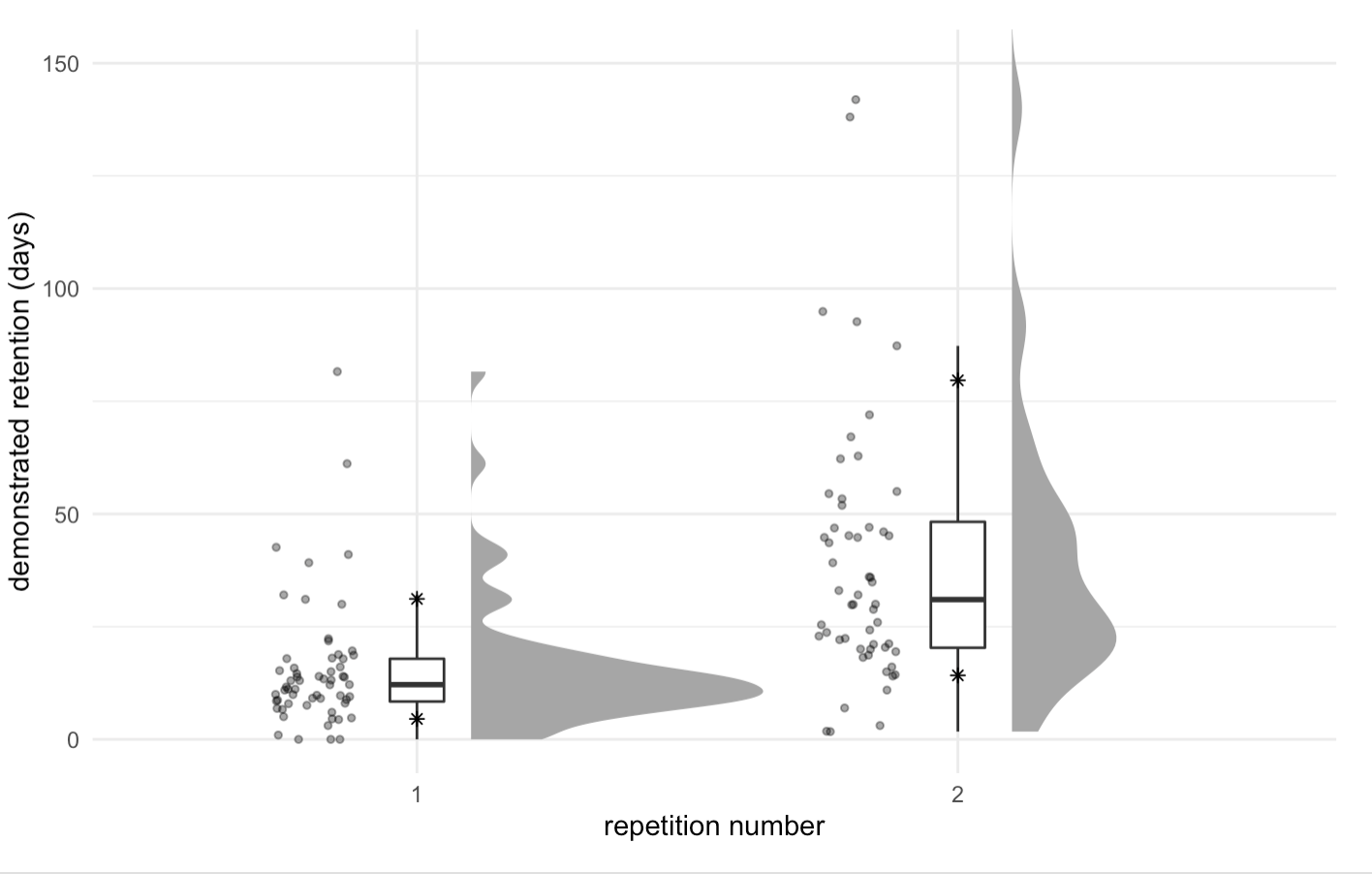

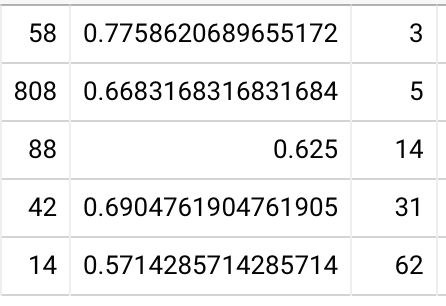

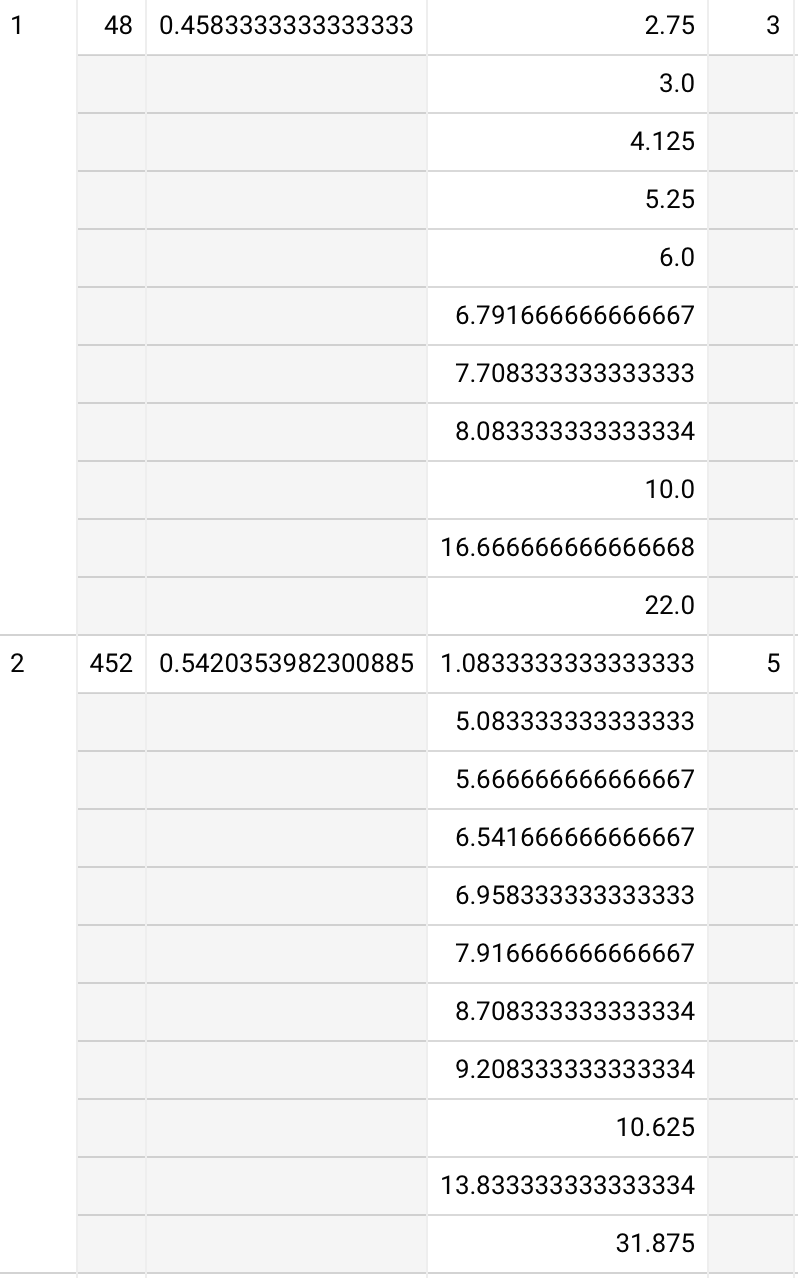

More detailed plots which more or less tell the same story as what I narrated on 2022-02-24:

Note that the bottom-quartile 60 day data here comprises only about 6 users. And this data is quite skewed because I’m not including only people who completed an entire first review.

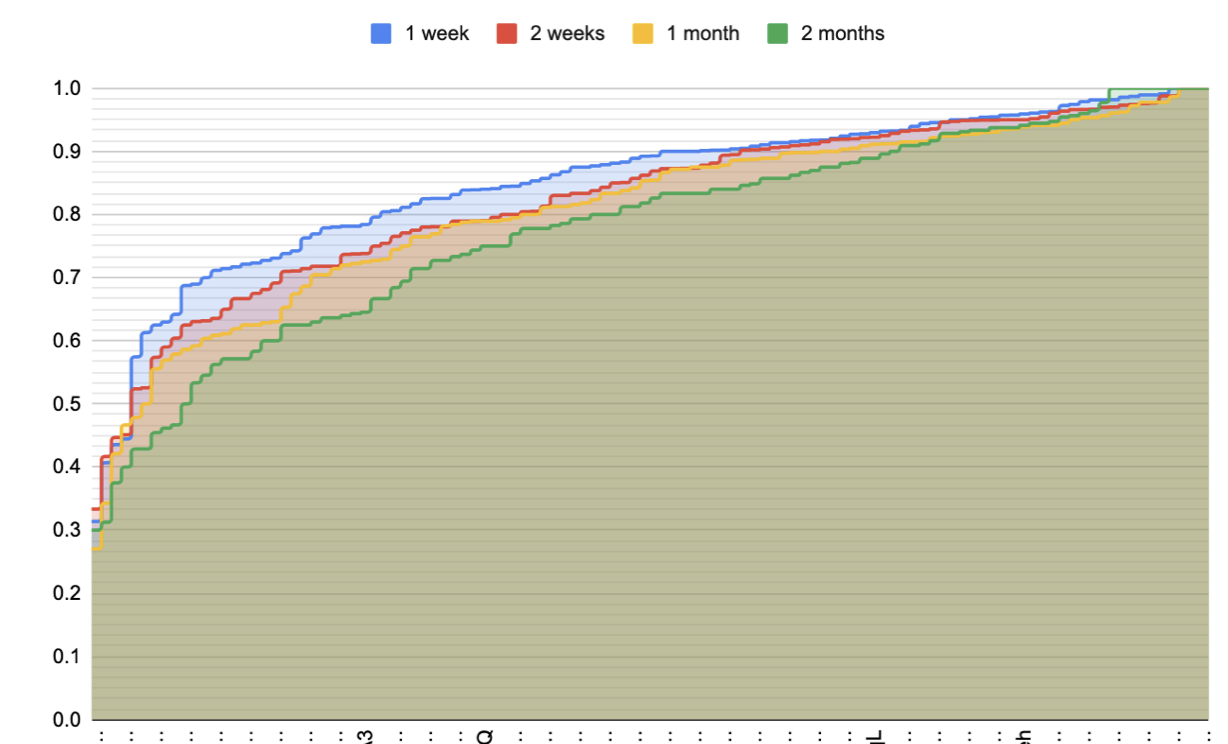

Using only those who finished their first repetition, I get a sharper story:

The trouble here is that I only have 4 (!!) data points for the 60-day mark.

2022-02-17

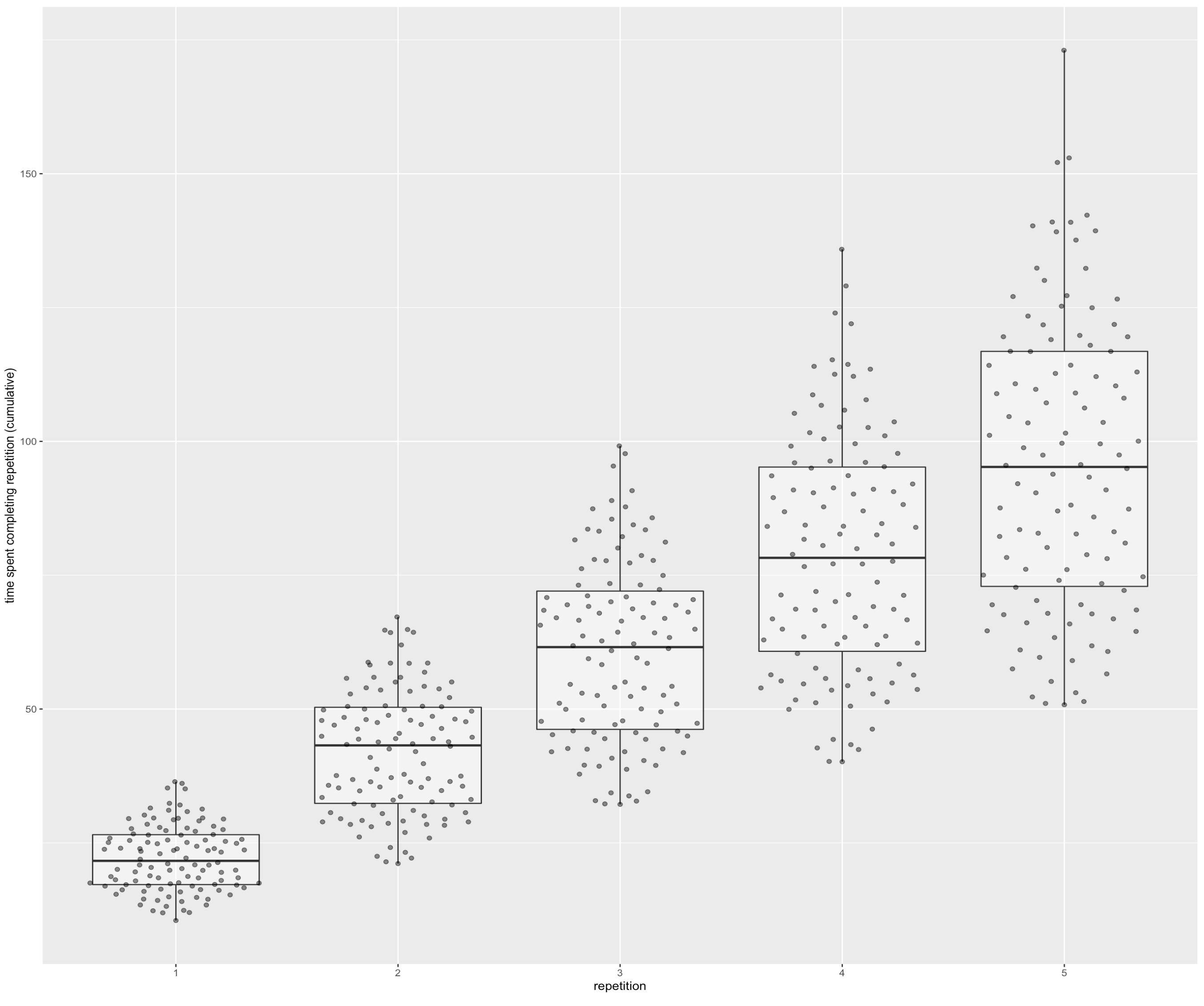

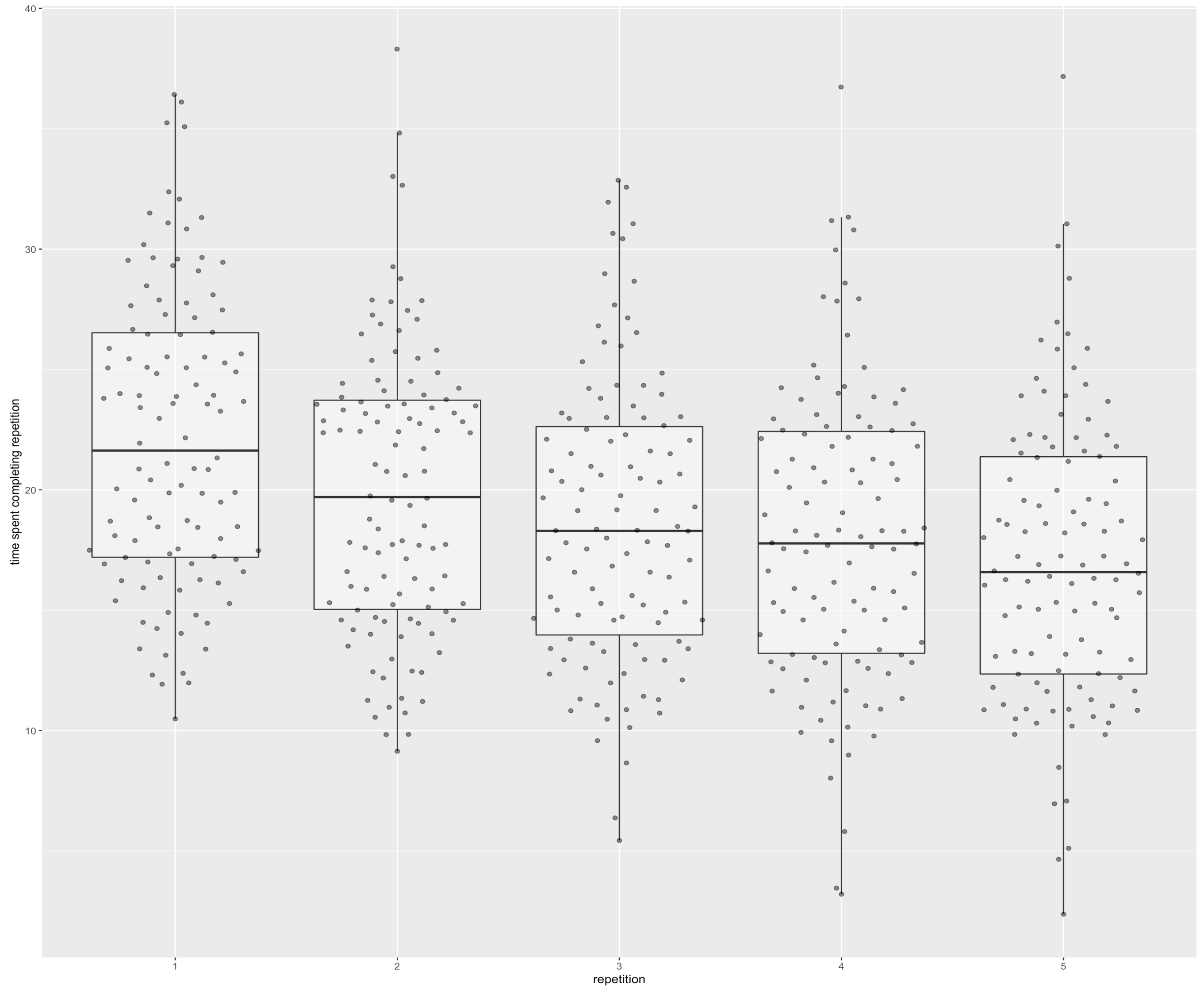

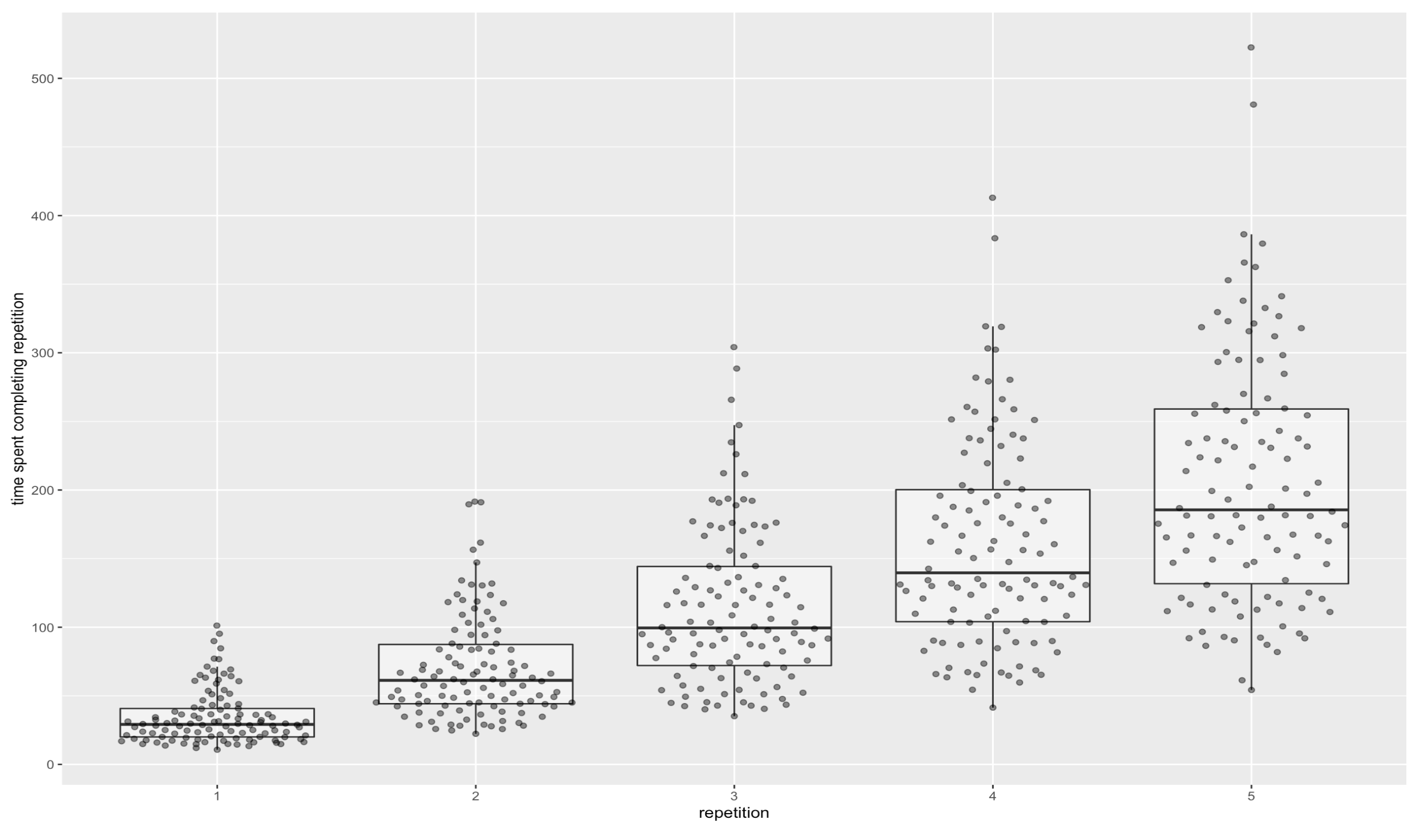

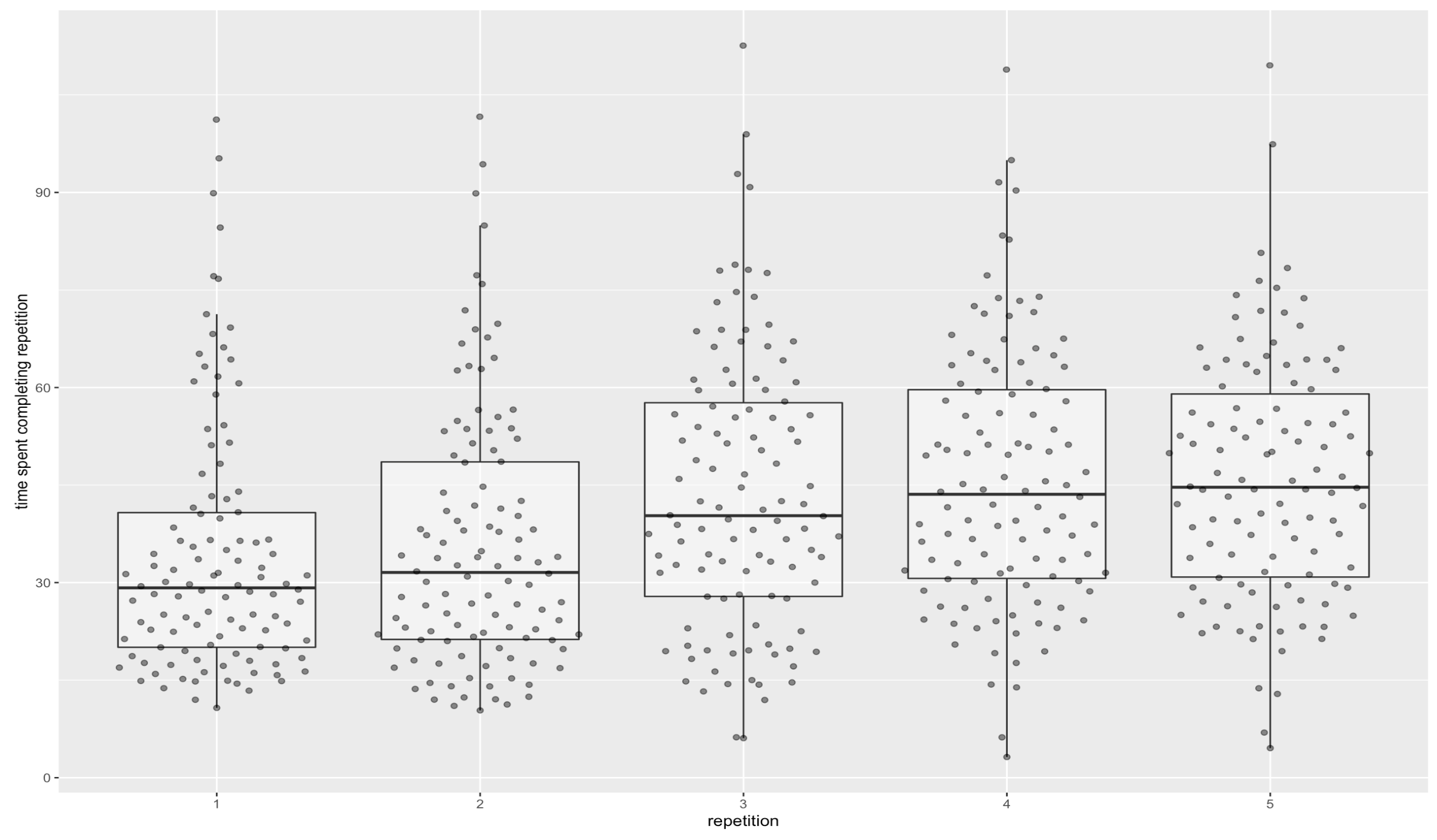

Plotted time costs by repetition:

20220217132449

Those plots are cheating a little bit, since they only count the reviews that are actually part of a given repetition. I think that’s fair, since in practice if you just wanted to do four repetitions, you could e.g. elide all reps after that, and you’d get these numbers. But if you want “more fair” numbers:

20220217132456

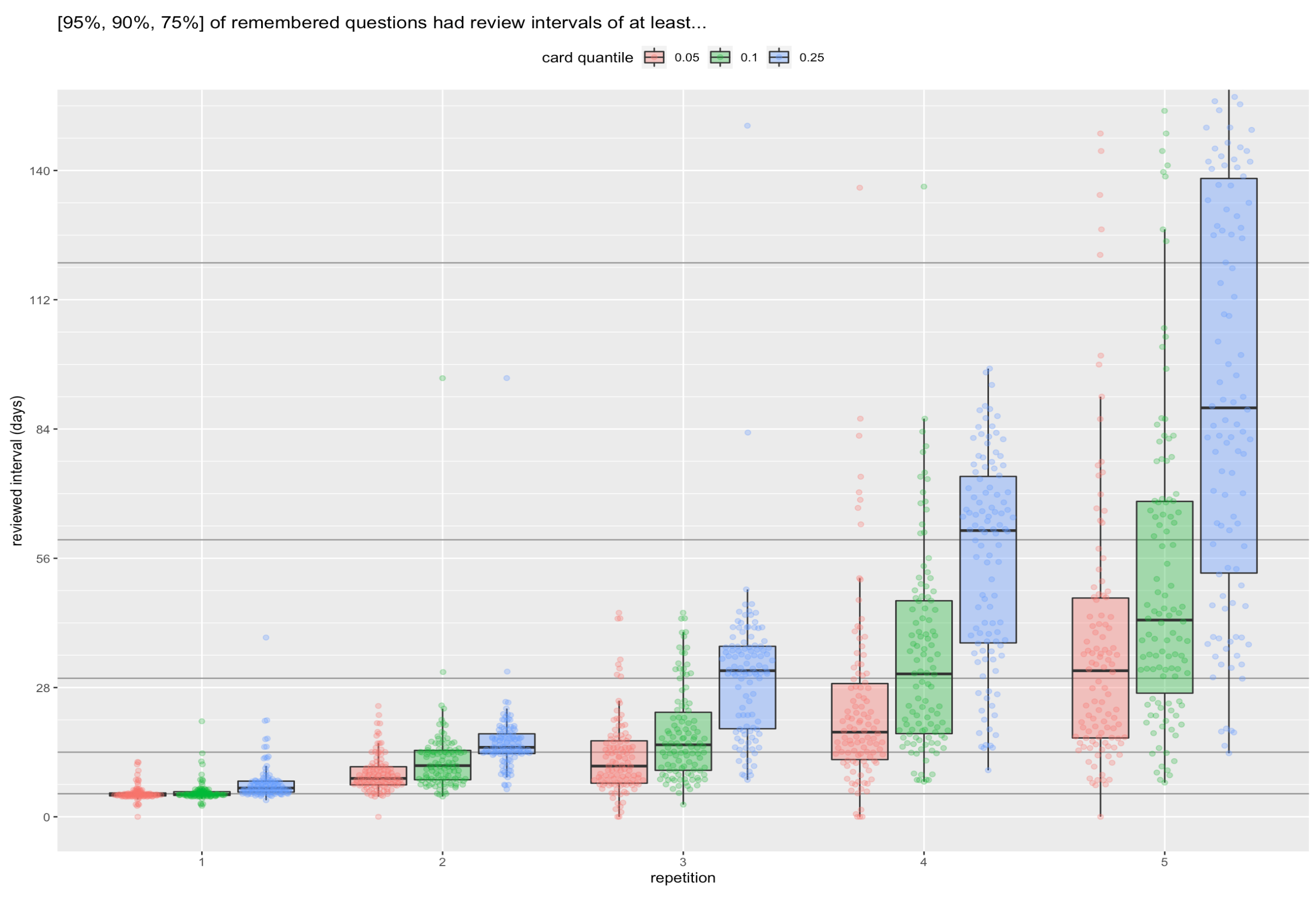

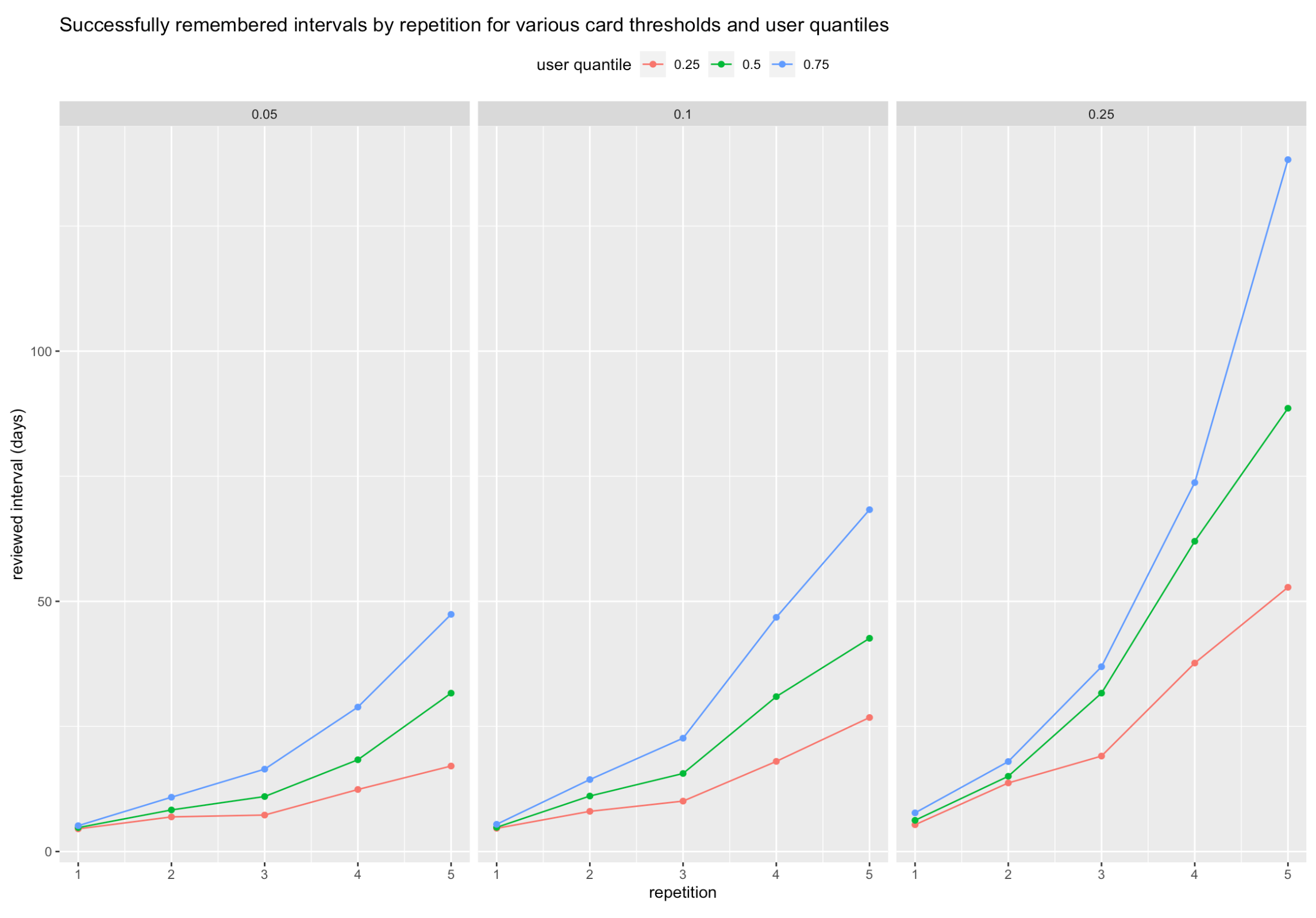

So, yes, to get the recall for 95% of the median user’s cards above 1 month, it takes about 80 minutes of review.

2022-02-16

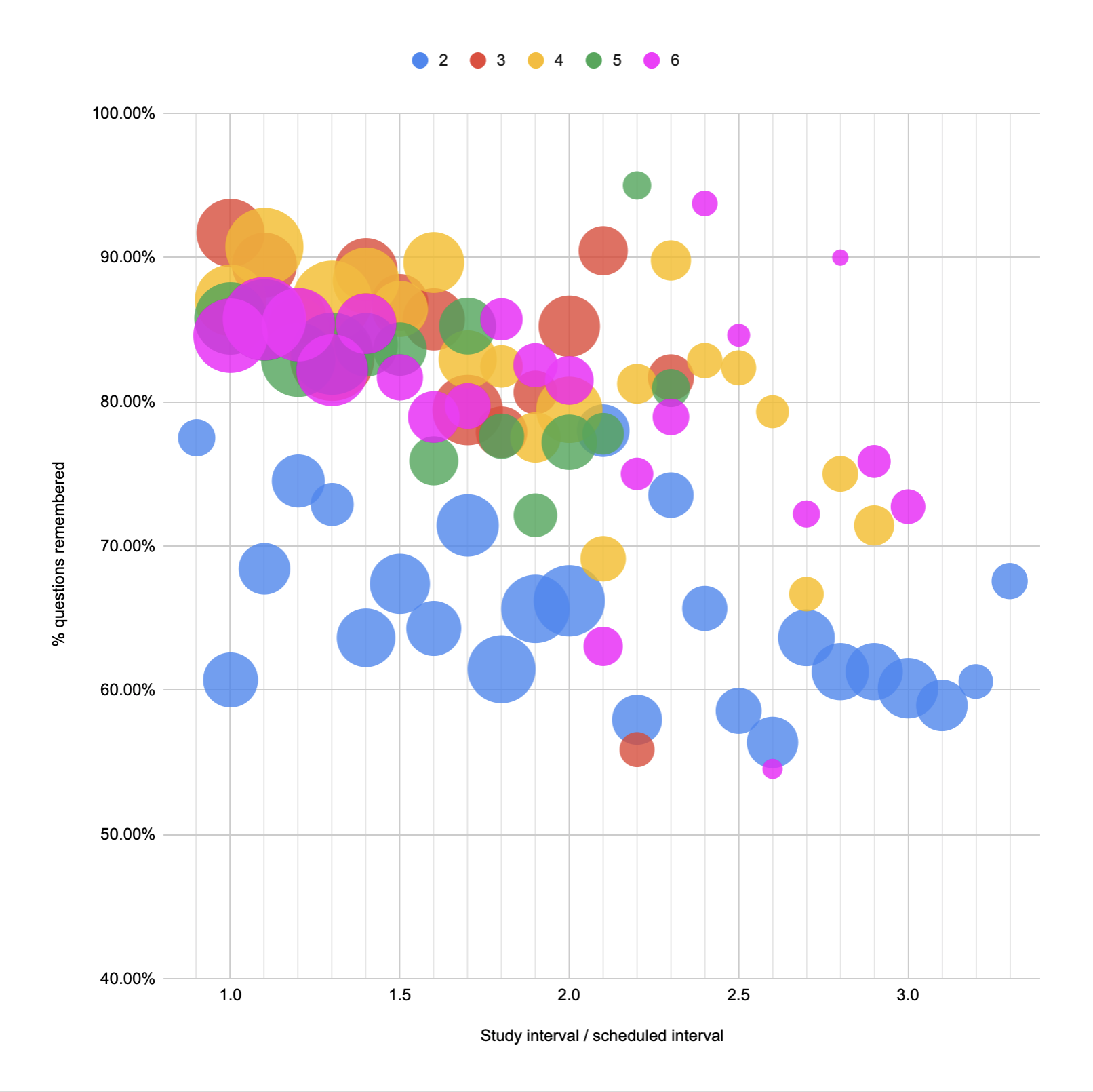

Starting more closely at charts of demonstrated retention, one thing I’m surprised to notice is how many of the points are much higher than they “should” be. In early repetitions, this can be chalked up to tardiness, but that interval expands to a month or more in later repetitions. And it shouldn’t be cumulative—that is, early tardiness should get reset on each session.

Looking at QC’s sources, I realized with a start that I’m still running the 2020/10 per-answer variation in scheduling (for aggressiveStart and original users). I’ve been running it all this time! How much impact has that had? (Several hours go by in R…) Looks like not much, practically speaking. Around 5-10% of readers are getting “under scheduled”, which means e.g. we’re probably understating demonstrated retention at 1+ month.

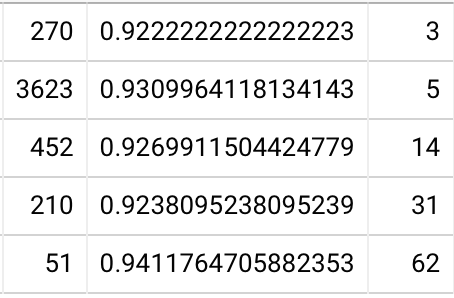

Alright. I think I’ve got a decent summary presentation for “does it work”, and this sort of also works for showing the exponential (maybe with some clean-up).

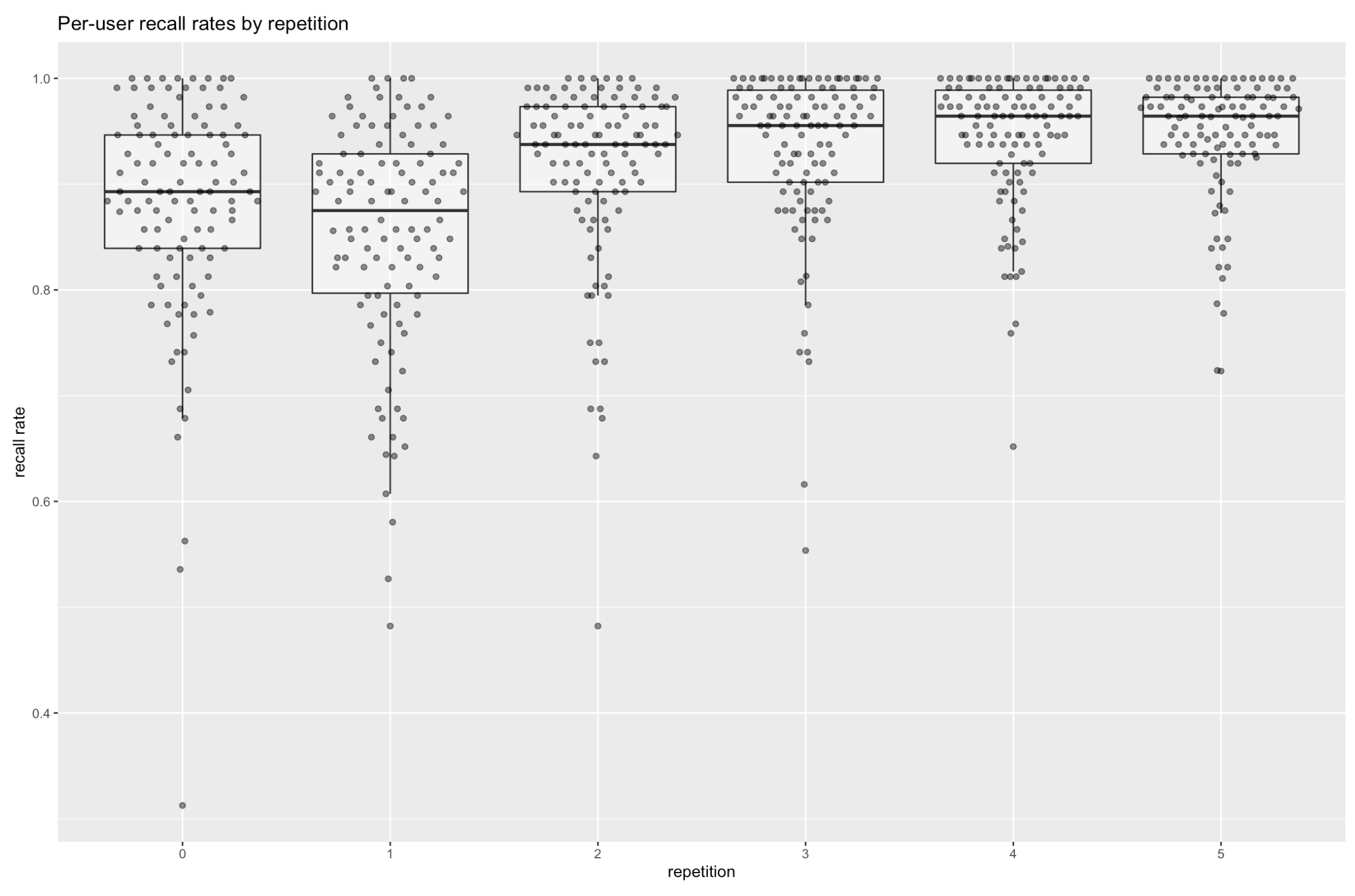

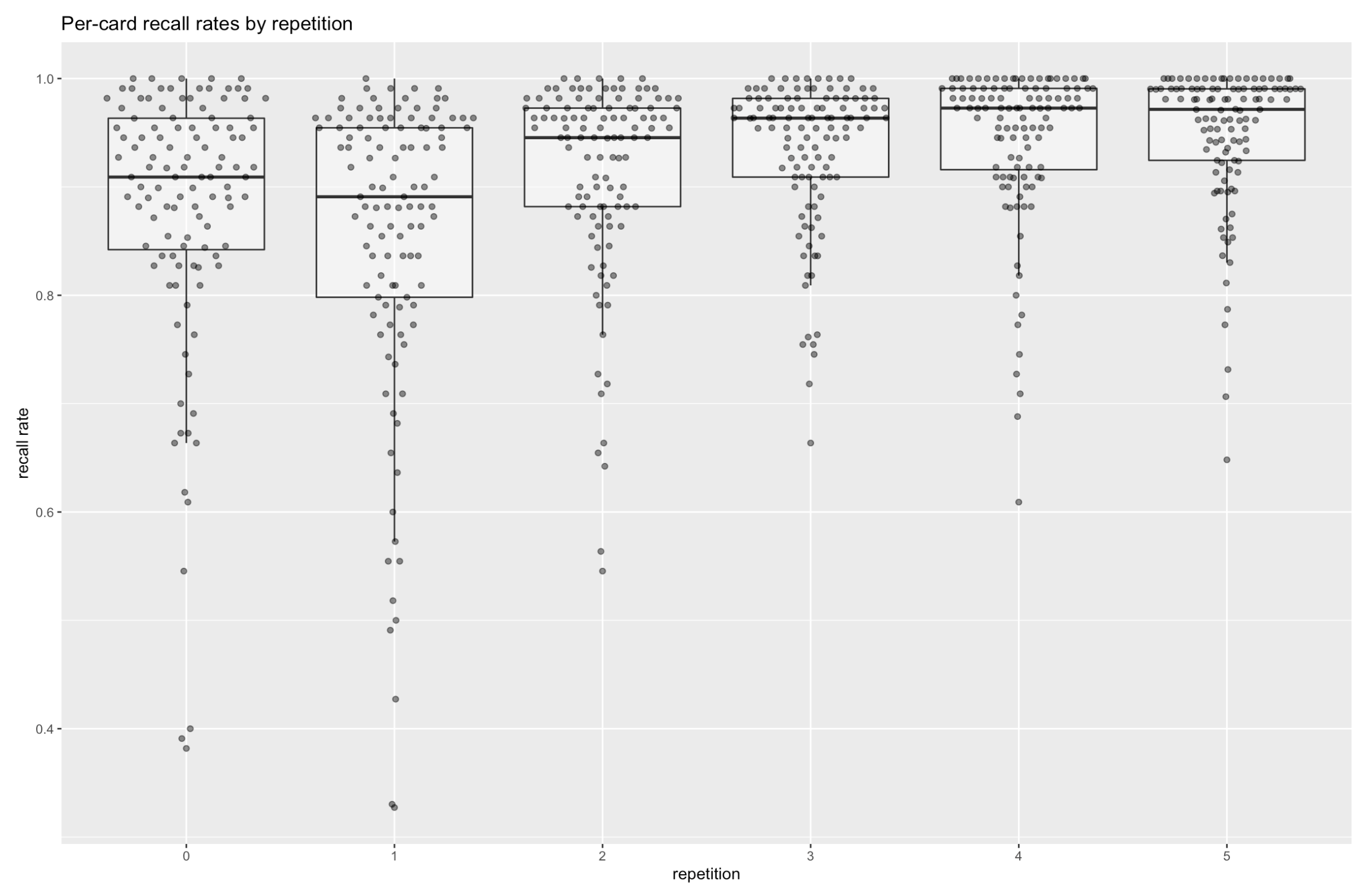

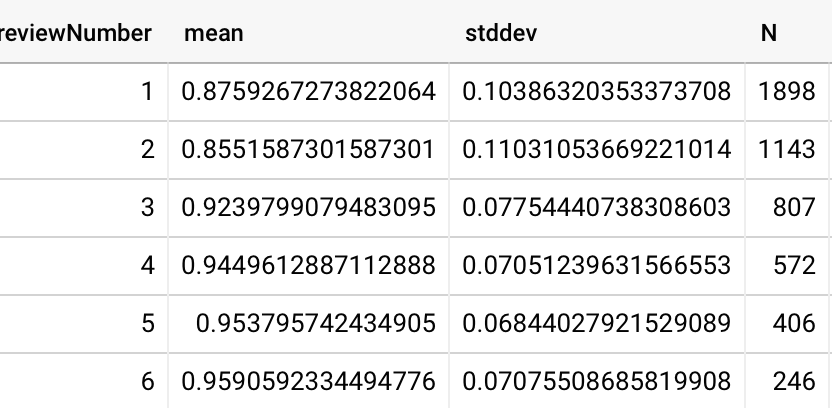

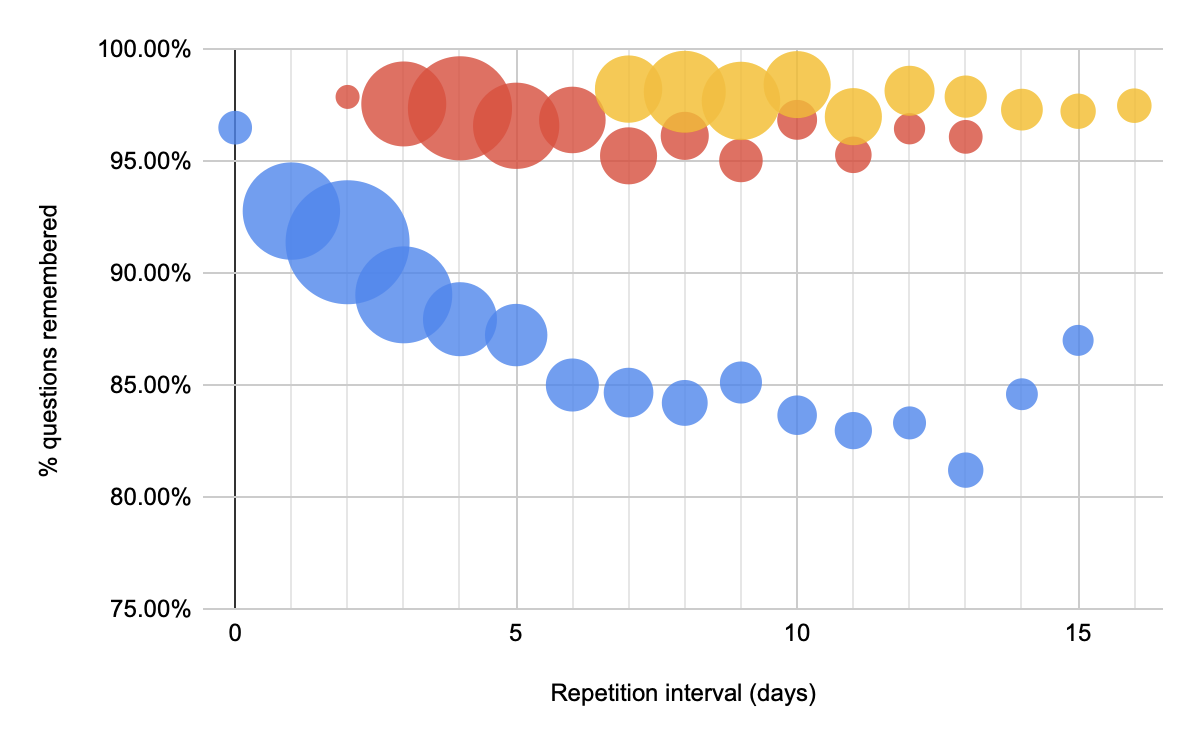

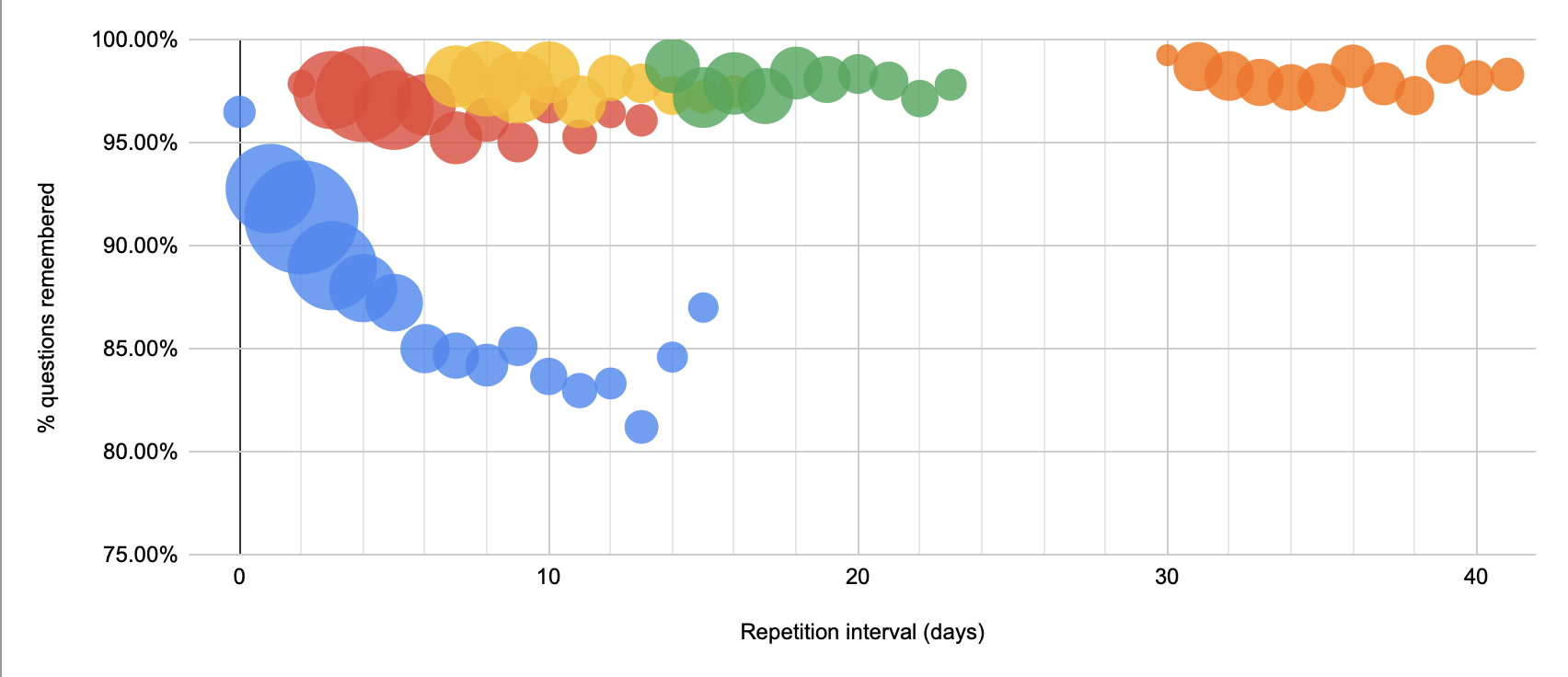

Recall rates rise with each repetition, even while the intervals between repetitions get longer and longer.

See 20220216132601 and 20220216132606 in qc-analysis.rmd.

The exponential is still there, buried in the noise of the latter plot.

20220216133411

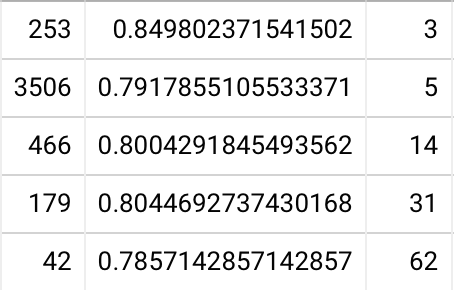

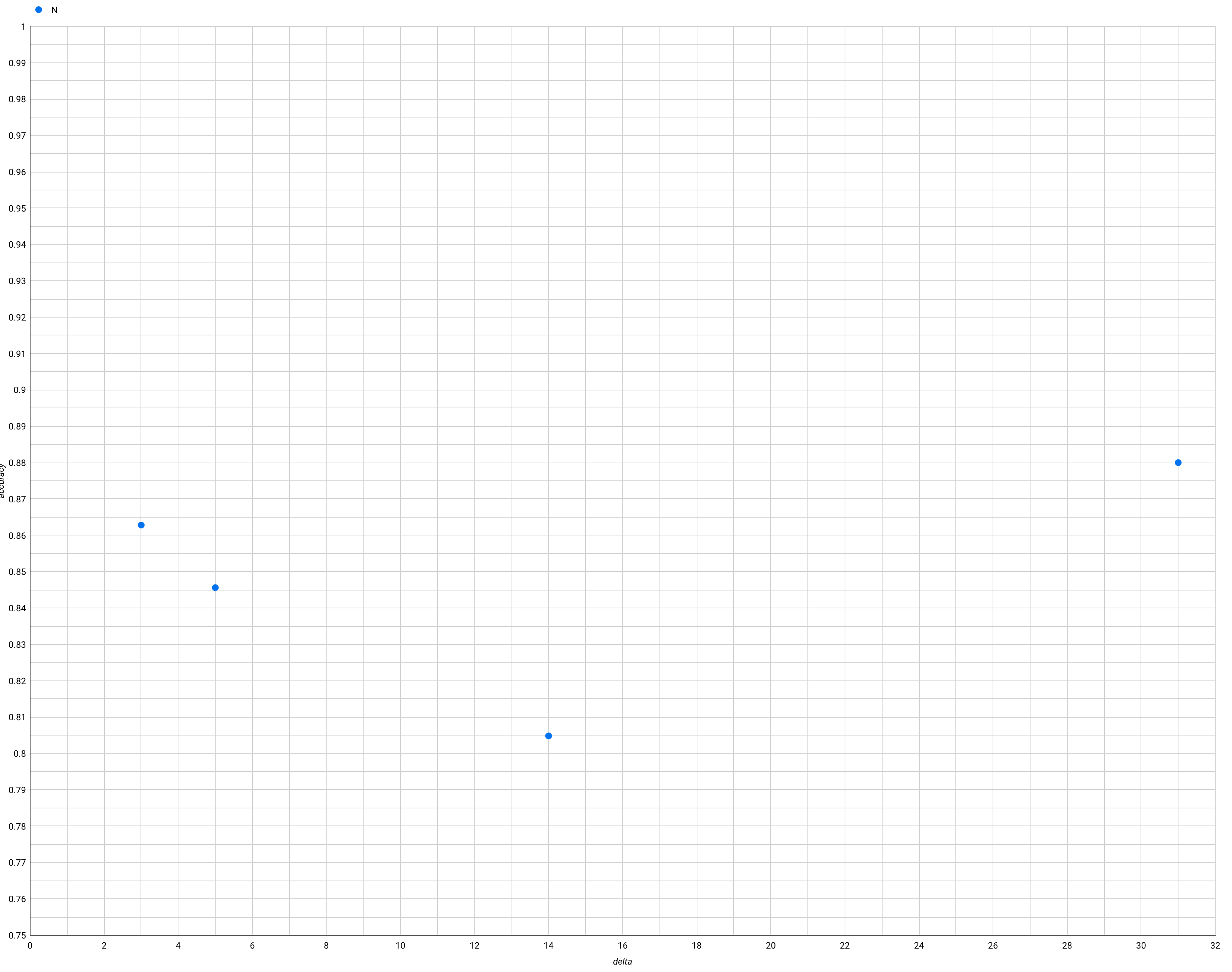

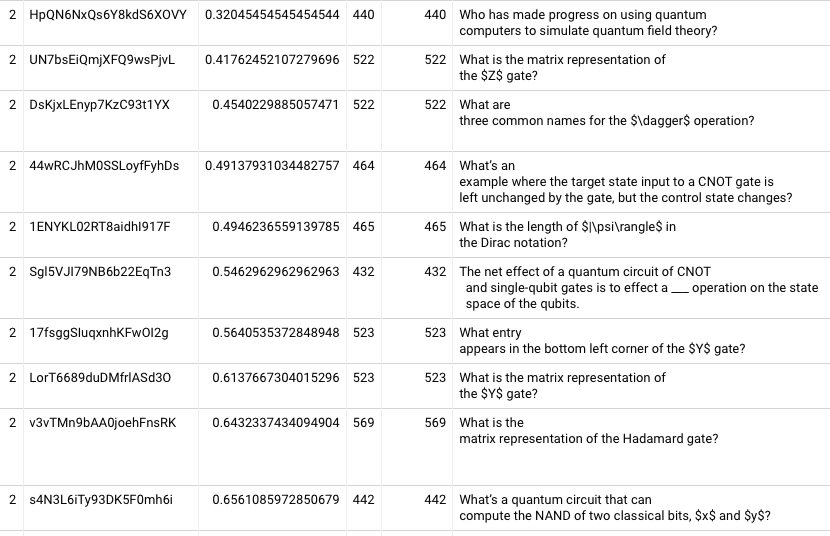

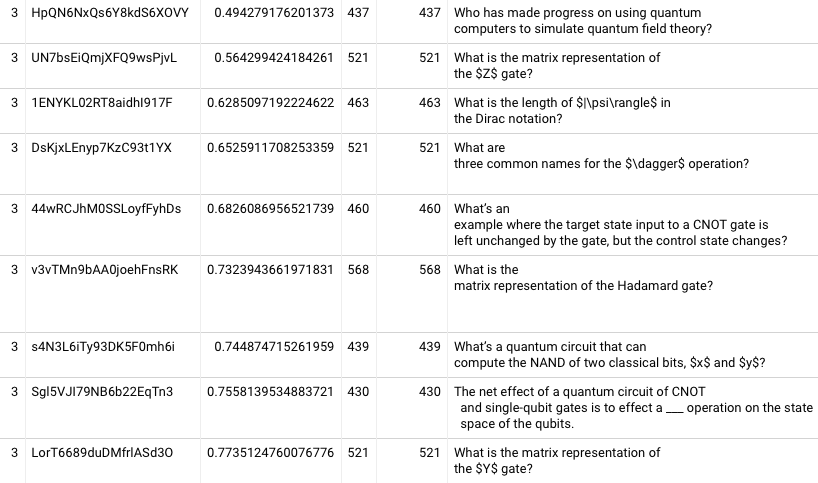

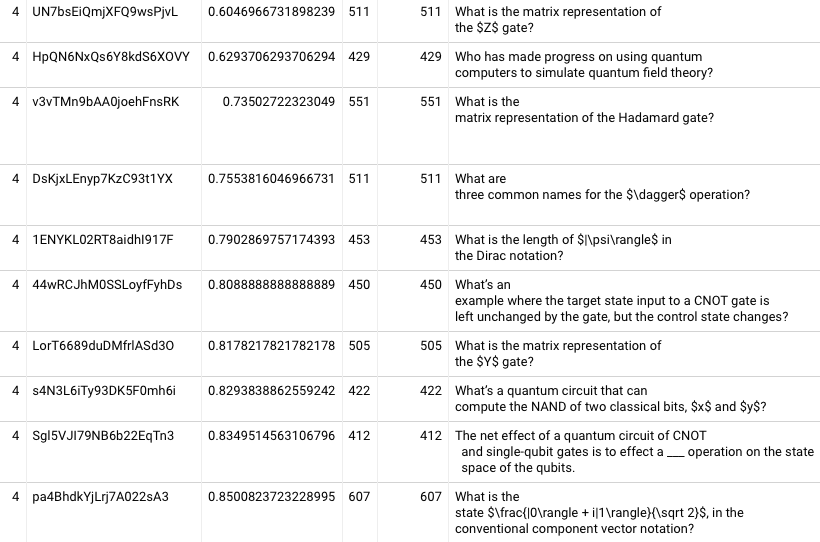

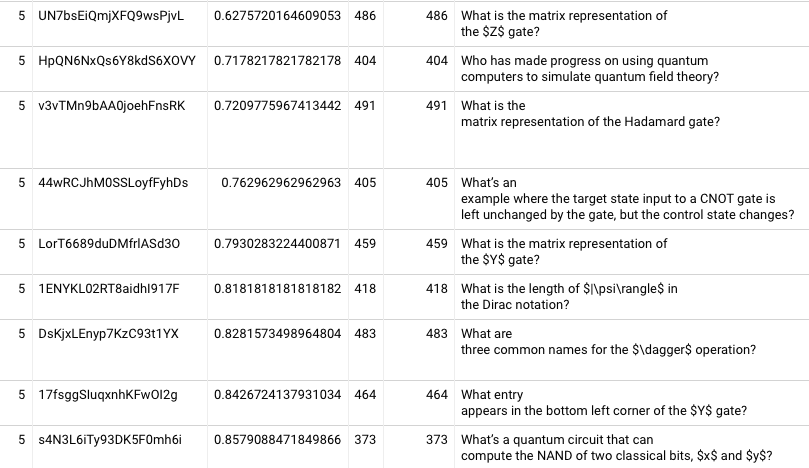

And the per-card recall rates look good, too. By repetition 3, only 5-8 have recall rates below 80%.

20220216133522

2022-02-14 / 2022-02-15

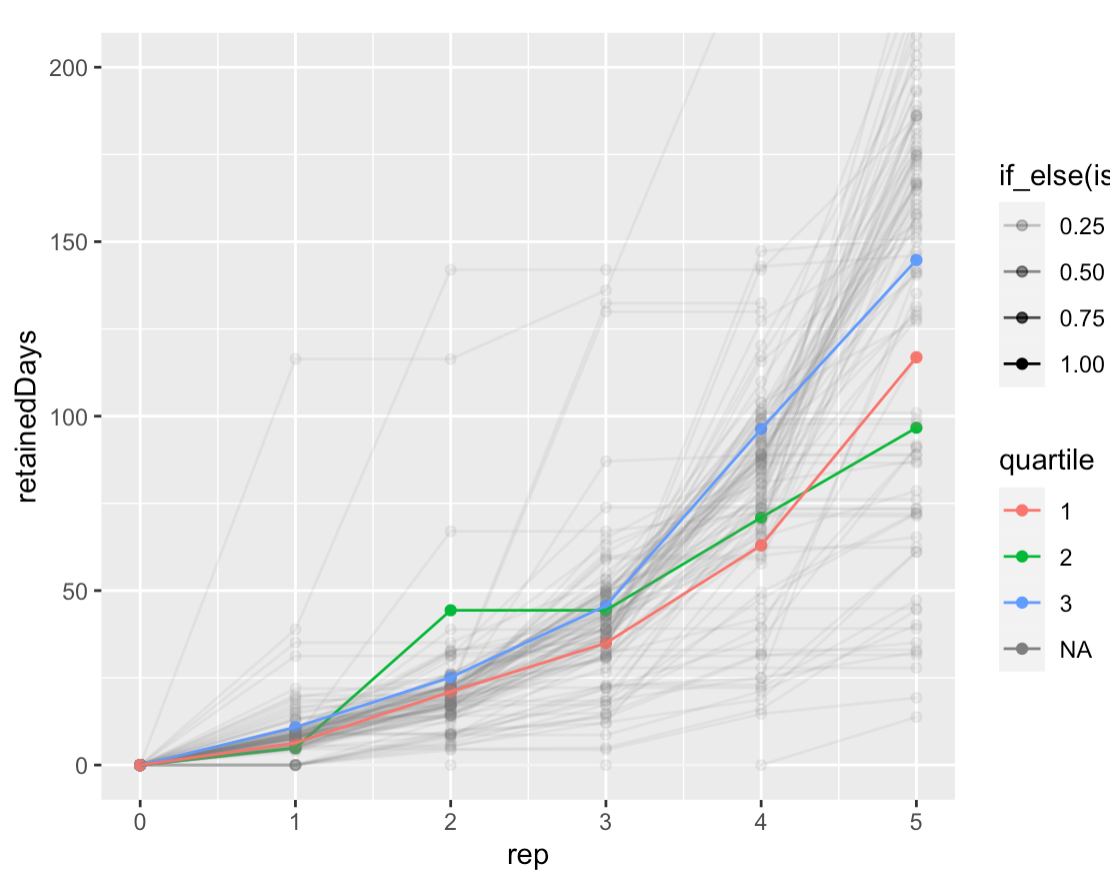

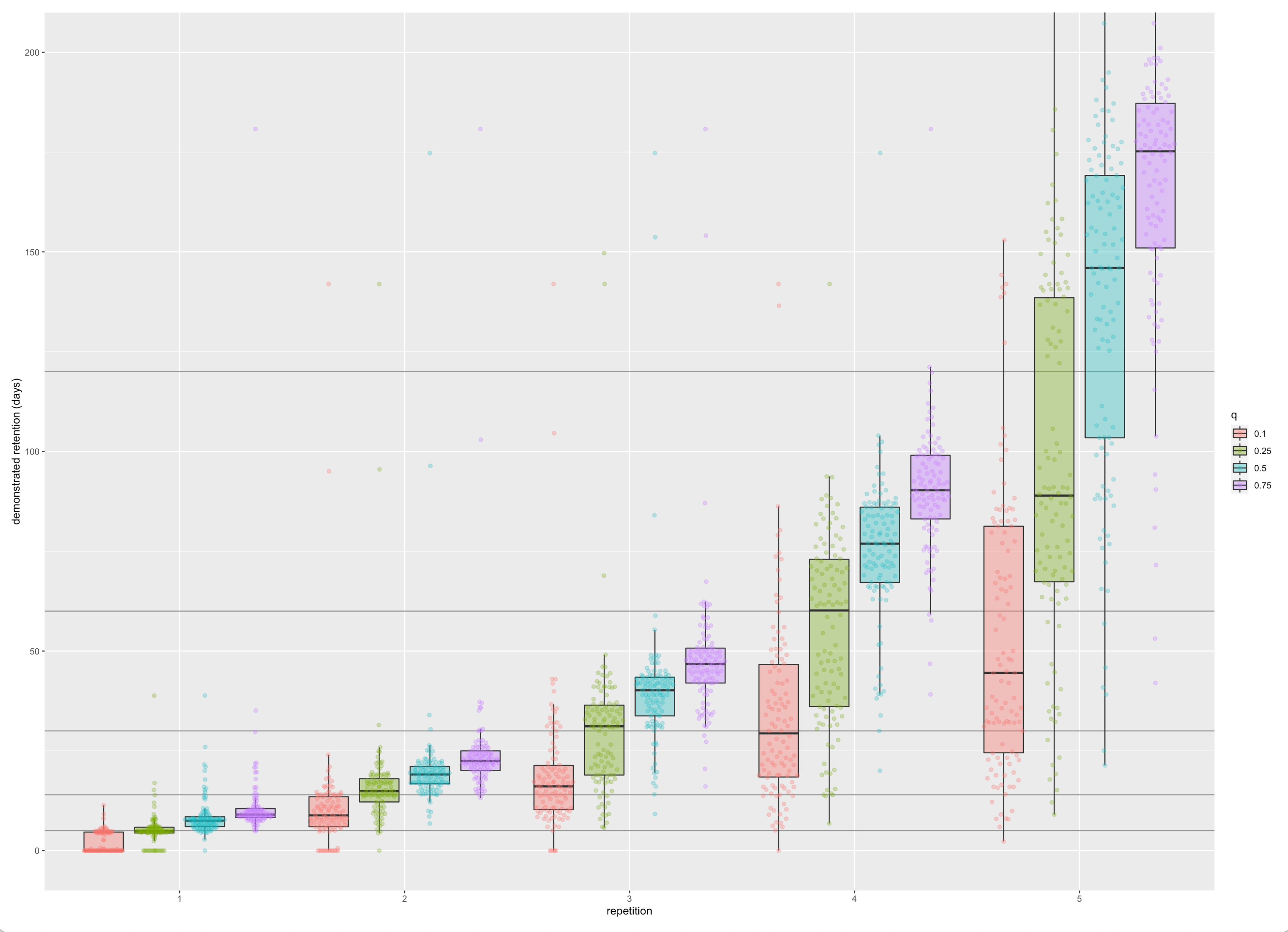

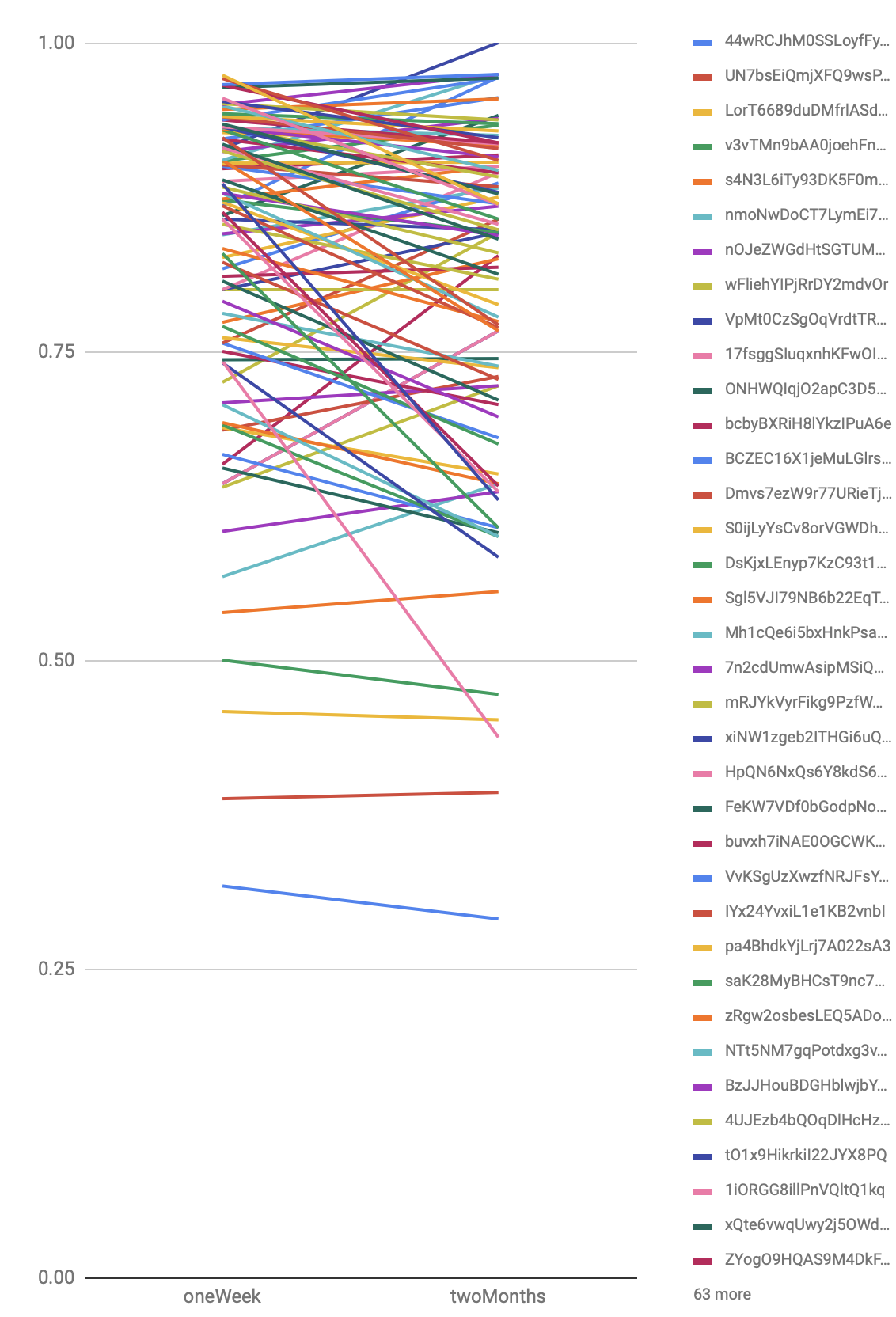

I’ve spent a couple mornings re-implementing demonstrated retention analysis in R. So I can now, e.g. plot demonstrated retention for all (qualifying) users of a single card (1iORGG8illPnVQltQ1kq); colors represent Q1-3 users by in-essay score:

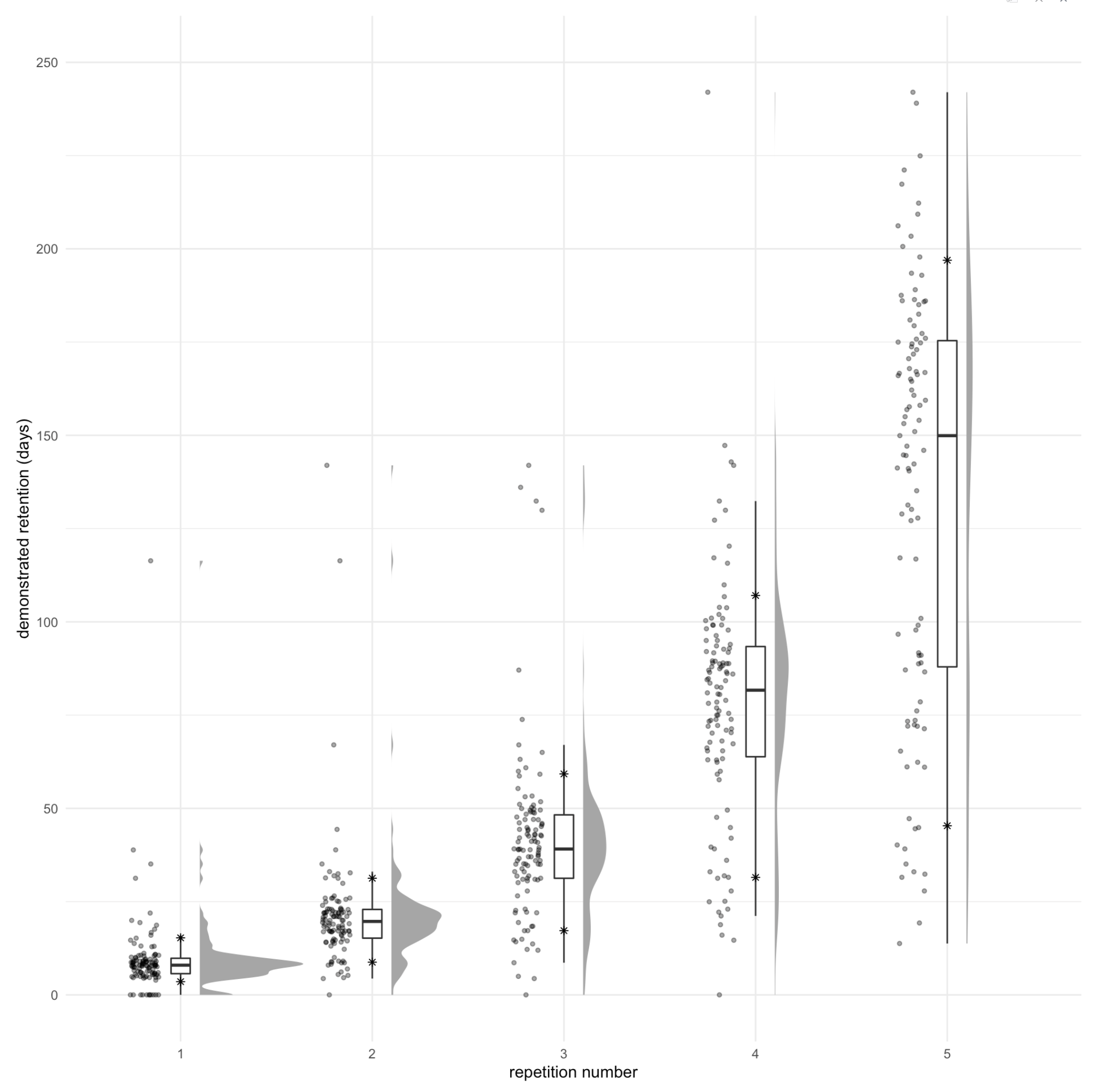

Here’s a Raincloud plot, which is much more instructive, though it loses the connections.

See chunk 20220215082229 in qc-analysis.rmd. Asterisks represents 10th and 90th %iles.

Notes on this figure:

- We see the bimodality we’d expect from the binary way these values are computed: the second “rump” represents people who forgot and were “left behind” the pack.

- The median user makes their way up the exponential as expected, but since we’re choosing the schedule, what this really represents is “the median user remembers the answer each time.”

- I also see exponentials in the 75th, and 90th %iles. And the 25th through the fourth repetition, but flattening at the fifth. But the tenth looks more linear. Linear is fine, potentially—it’s still ending up at a strong value.

- It’s misleading to “connect the dots” of these critical values here; they don’t represent the same user. So this graph is not suggesting that e.g. the 25th %ile reader has sigmoidal demonstrated retention.

- But it’s also not totally off base, given that there’s a lot of “jitter” in the sampling. In effect, these quartile stats are aggregating a bunch of different readers’ results in a way which should reduce the noise.

- These points really approximate a “lower bound” of these readers’ “safe intervals”. The bound is probably particularly loose for people in the “lower hump”, since they’ve been “held back” and could likely remember over longer durations, but they haven’t had a chance to demonstrate that.

- But in fact, these points are only sort of a “lower bound”, if we think about modeling recall as a stochastic phenomenon. Some of these points are “higher than they should be” because of “luck”.

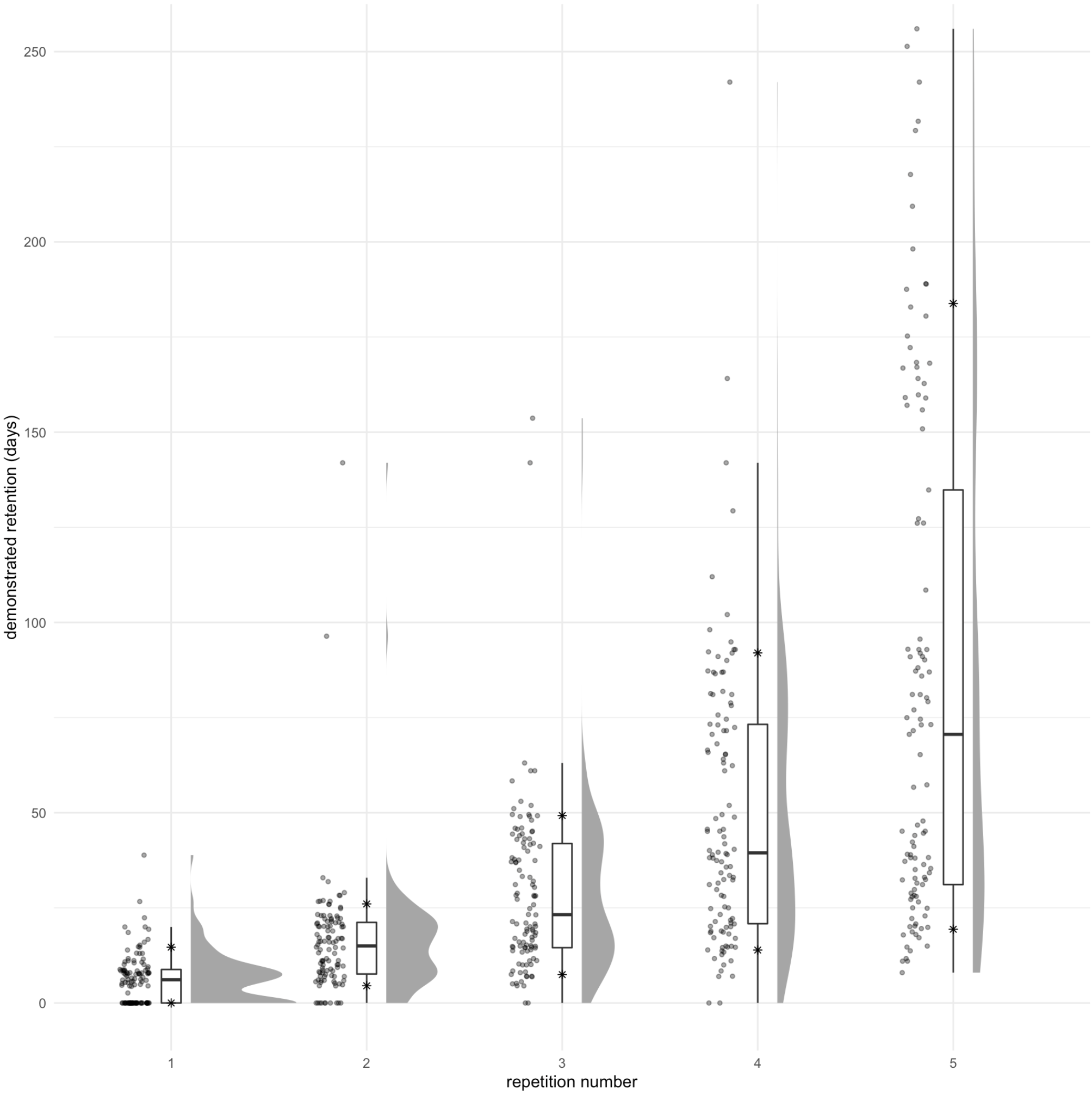

Just for comparison, here’s a “hard” card, 17fsggSIuqxnhKFwOI2g, which asks for one of the values of the Y gate:

Here the exponential has disappeared for the 25th %ile too. And the apparent median value for the 4th repetition and 5th repetitions are roughly half what they were for the other card. You can see multi-modality quite clearly, representing the different number of successful recall attempts.

It makes sense that these values should be lower, but I’m not quite sure how to interpret the differences which this figure presents. The 90th %ile values are pretty close in these two figures. The second is a bit lower. But that’s not meaningful: in both cases, the 90th %ile just represents a reader who remembered the answer on each attempt. And so on their 5th repetition, they’d be asked to remember after 120 days. The true attempt interval depends on compliance, batching, etc. Noise, really.

The apparent exponential is also “forced” by our system. In truth, the “safe interval” for many of these users at the first repetition is probably in the weeks or months. So this graph exaggerates the relative benefit of practice, since the bound is likely looser at earlier repetitions.

And here’s the new schedule on the first card:

See chunk 20220215093014. Note the multi-modality. Not enough samples to examine many repetitions (just 58 readers here).

…

I think I’m conflating two goals: to answer “does it work?”; and to show some visual representation of Spaced repetition yields (what feel like) exponential returns for small increases in effort. The latter is much harder than the former. Maybe I can show the former in some very clear way, then gesture at the latter much more loosely.

How might I show the former? Ideally, in a way which doesn’t require models, which shows improvement over time / with more practice, and which obviously can’t just be chance? No need to also show the counter-factual here.

Ways to evaluate if “it’s working”:

- recall rates after two rounds of practice

- (doesn’t capture interval)

- recall rate at first attempt at interval X

- (doesn’t normalize for number of rounds of practice, encodes schedule)

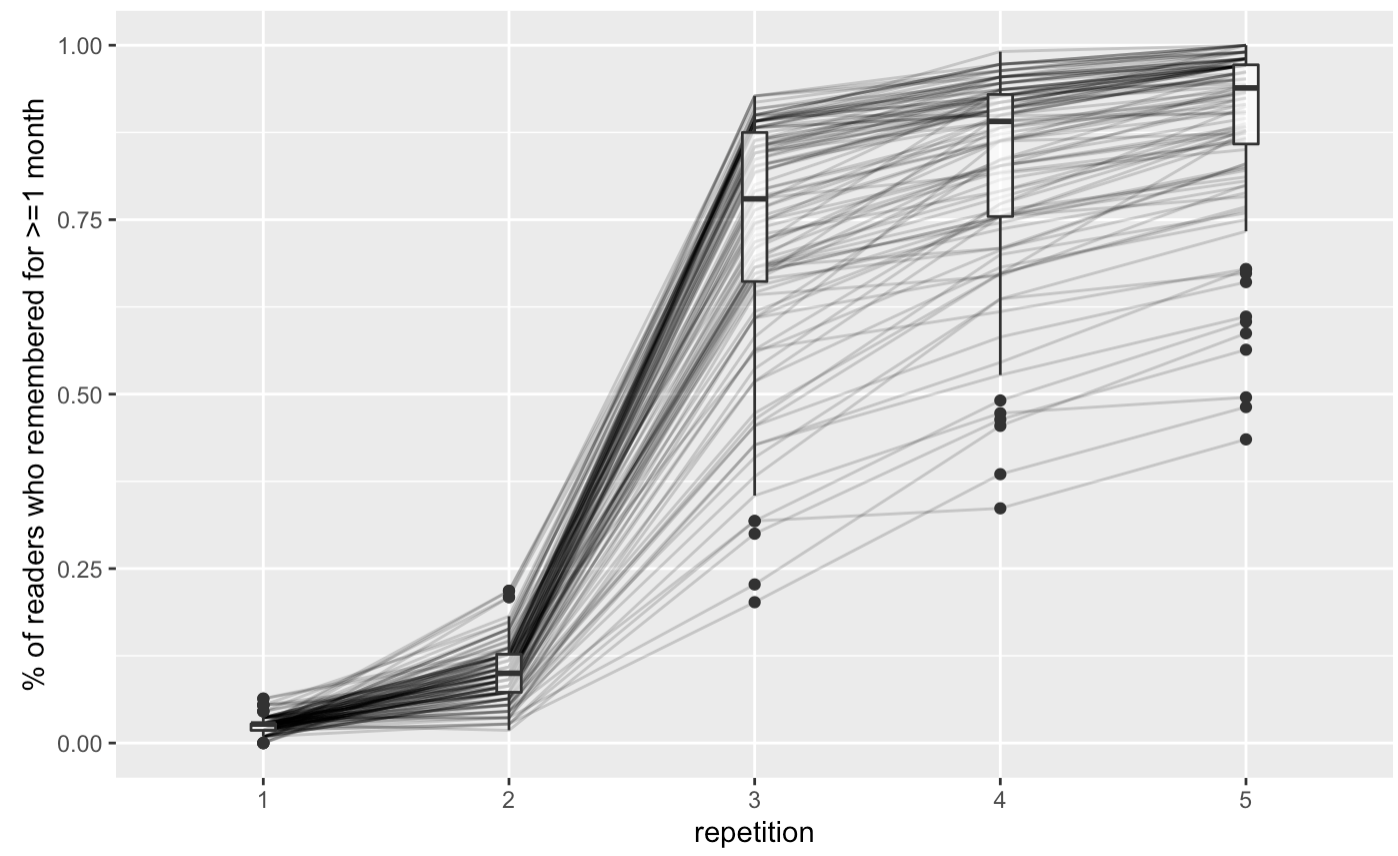

% of readers who have successfully demonstrated one month retention

- seems subject to claims of binomial chance, though maybe I could disprove that with some stats

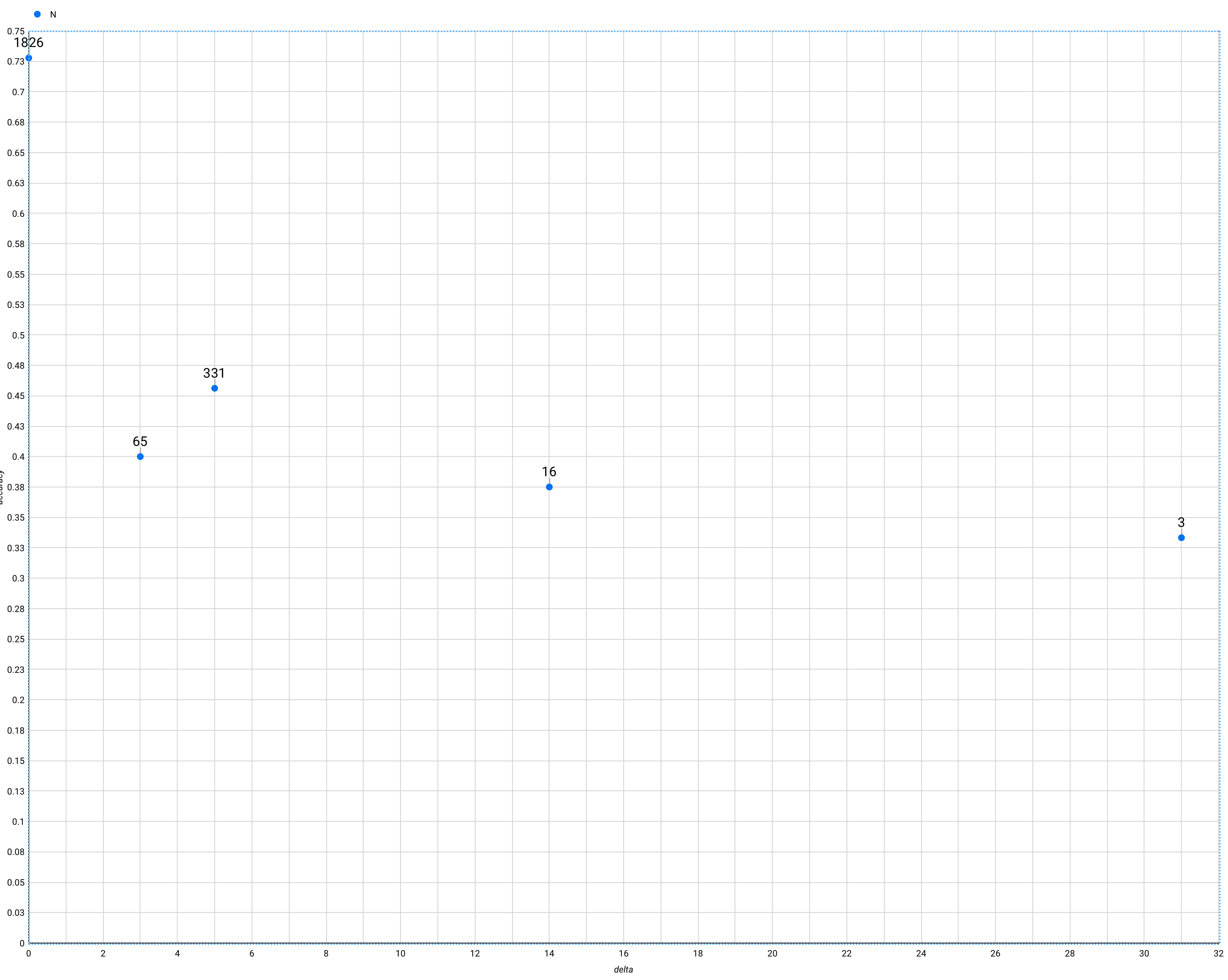

- here’s % of readers with 1+ month recall by card plotted:

20220215111327 in qc-analysis.rmd

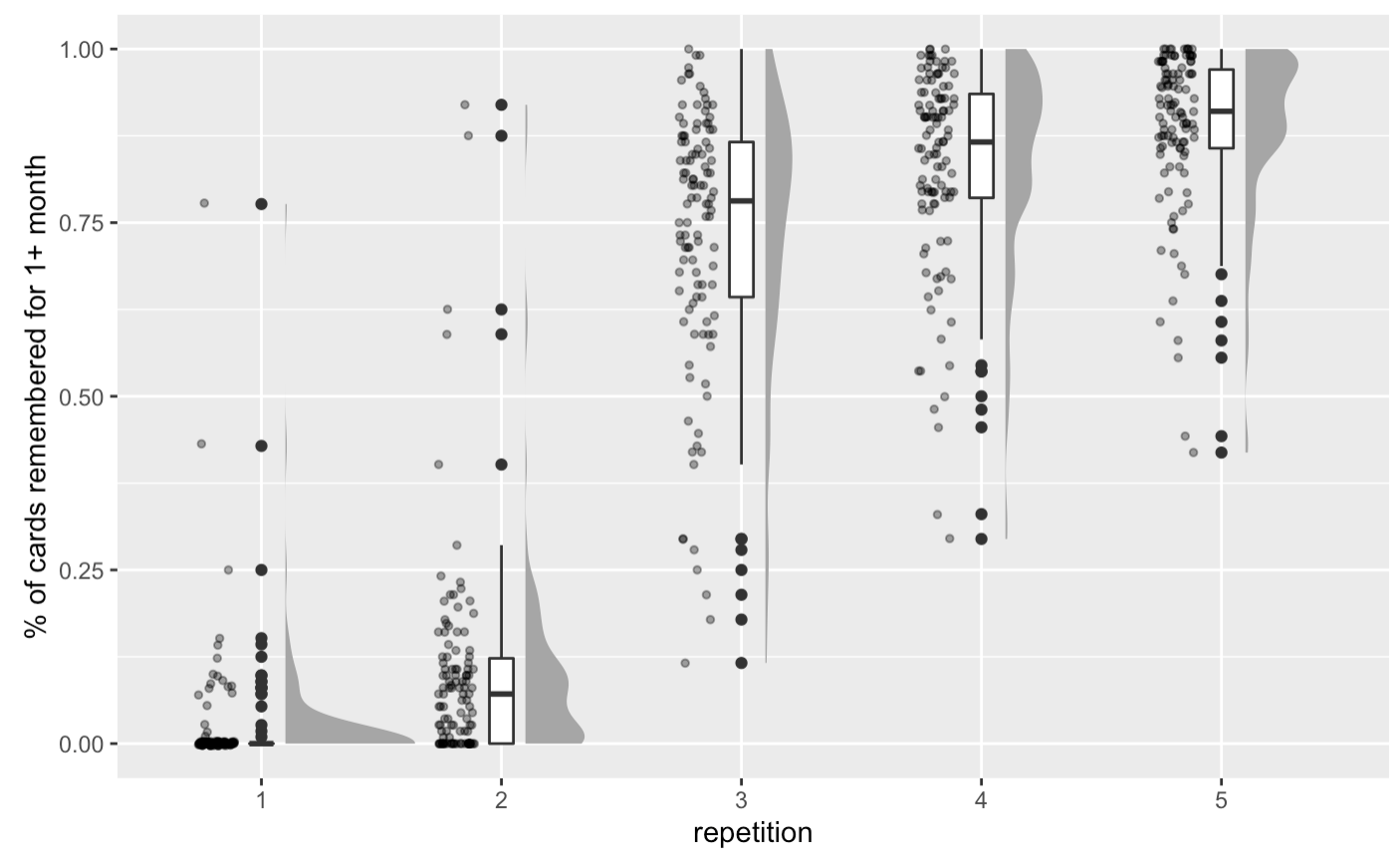

20220215111327 in qc-analysis.rmd - and here’s % of cards with 1+ month recall, by reader:

20220215111707 in qc-analysis.rmd

look at the median / 25th %ile reader… what’s their situation?

- demonstrated retention at various card %iles

20220215124135

20220215124135 20220215124146

20220215124146

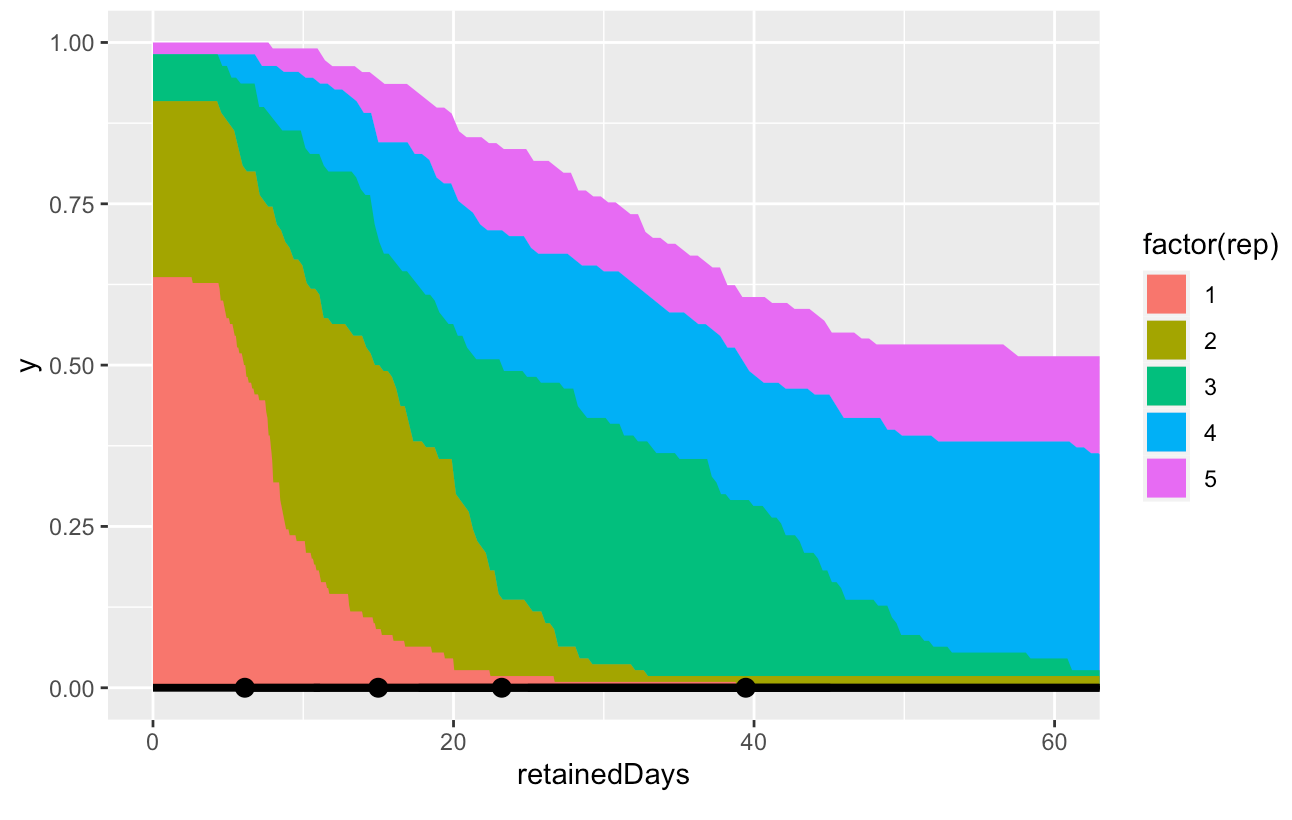

- What % of readers achieve X days of demonstrated retention, by repetition?

- Here’s one plot addressing that for

17fsggSIuqxnhKFwOI2g: 20220215162158

20220215162158

- Here’s one plot addressing that for

2022-02-09

Let’s say that I had Giacomo Randazzo’s memory model implementations, and I ran them on QC data. What kind of narrative could I write? Would I buy it?

One way to evaluate these systems is to ask: how many repetitions are required to reach a high degree of stability? That is, until recall rates would still be quite high even after a month or two away? We can’t directly measure that, but we can predict it with this model, which does successfully predict reader recall elsewhere quite accurately.

Yeah… I guess I just don’t really buy this. I mean, it might be fine as a way of iterating on the system, or of comparing schedule A vs schedule B. Curve fitting doesn’t tell a very strong story. It’s certainly not the primary way I’d like to demonstrate the system’s success.

To broadly demonstrate the success of the system, I think I’d rather say something quite broad and intuitive. Ideally, it should surface an exponential which demonstrates that linear effort produces non-linear returns.

Alright… what is it that increases exponentially with linear practice? In expectation, the interval of time which one could “safely” wait without practice. We could call this your “safe retention interval.” A (lossy) proxy for this is the amount of time you’ve demonstrated your recall successfully. It’s not ideal because you could have demonstrated that recall by luck, and because it may actually understate your potential retention: you may have hit a given safe retention interval several repetitions earlier, but you didn’t get a chance to prove it.

Looking at the 10th %ile demonstrated retention seems to sort of approximate what we’re looking for, if we want a “safe” recall rate of p=0.9. … Is that true? Say that your entire library of cards really did have a safe retention interval of 1 month, with p=0.9. The most likely observation, then, would be 90% of your cards demonstrating 1 month, and 10% demonstrating some previous lower interval. However, it’s also somewhat likely that e.g. 87% of your cards would be demonstrated at 1 month, and 13% demonstrating some previous lower interval, as well as 93% / 7%. Looking at the 10th %ile, maybe these possibilities balance each other out?

The problem with naively looking at card %iles in demonstrated retention is that the cards absolutely aren’t uniform. The true “safe interval” for the 5th %ile card isn’t the same as that of the 50th %ile card.

Can I slice by card? Users are more uniform than the cards are. So looking just at card X, at repetition 5, I can say what % of readers have demonstrated retention at various levels. If 90% of “median-ability” readers have demonstrated retention above some level X, I guess I feel comfortable saying that the “safe interval” for those users is probably bounded below by X.

2022-02-08

New thought this morning regarding How to consider cost benefit tradeoffs of practice vs retention in spaced repetition memory systems?: can I roughly model both retrievability and stability by requiring:

- 90% retrievability at session N

- 90% retrievability at session N+1, one month later

This seems pretty good, except that I do still have the problem of inconsistent schedules. Say that I have 90% retrievability at repetition 2. The 10% who failed won’t get a chance to try again one month later—they’ll be asked to try again sooner than that. So the pool of one-month-later people will be skewed towards those with better memory performance. The same is true, to a lesser extent, recursively across the preceding sessions. The people who even have a shot at one month retention are skewed high-ability.

Can we approximate this by asking “how many sessions does it take for X% of readers to achieve one month retention?” I think there’s a relation between the two. One problem with this framing: say that for a schedule with an initial interval of one month, P_recall is 0.4. So I’d produce a statistic that says “wow! 40% of readers can remember even with this little reinforcement!” But that’s just a matter of chance. Accumulating these chance successes over time will produce a total of 0.9 after a few sessions even if the probability is low each time. With p=0.4, if they have 3 chances, they’ve got a 94% chance of success in at least one of those chances.

All this really makes me question my prior metrics of demonstrated retention. It’s good at least that Demonstrated retention reliably bounds future recall attempts on Quantum Country; that’s some evidence that what I’m seeing isn’t just a matter of chance. But I still feel I’m far from a good “is it working?” metric.

If P_recall is 0.5 at all stages, then after five repetitions, 96% would have demonstrated one week retention; 54% would have demonstrated two weeks; 27% would have demonstrated one month; 6% would have demonstrated two months. At P=0.7, we’d see 100%, 82%, 60%, and 30%, respectively. This feels pretty distorted. 20220208103440

Now, if I saw that among a specific set of users at a particular repetition, 90% of them were able to recall the answer correctly, I’d feel decent about suggesting that approximates P_recall=0.9. The difference, I guess, is the “multiple chances” element. The problem with using the smooth-brain sampling method I describe is that the cohorts get skewed by the dynamic scheduling. If everyone’s schedule were exactly the same, I think I’d believe in dumb sampling. Bluhhh. I guess I need to use a model to really get anywhere here.

Is there a smooth-brain way of assessing the system’s success, one which is resilient to “just try one month immediately lol”? I wonder if I can see the impact of stability on week-over-week retention at a first repetition of one month. For the “median” card, eugu7LKeiO34xF1ParBu, I get 82% / 93% / 91% for 4, 5, and 6 weeks, N=11/28/11. 20220208113105. For a “hard” card, 1ENYKL02RT8aidhI917F, I get 59% / 67% for 4/5 weeks, N=22/18. Eh.

Really, I’d like to find some way of measuring—or at least convincingly estimating—stability directly at repetition N.

2022-02-07

The trouble I’m having as I think about stability is the same as the one I was stuck on a year and a half ago: I’m trying to approximate the probability of recall by using recall rates within cohorts of users. That approximation works to the extent that an individual user in the cohort is a good approximation for the whole cohort. I can control for more degrees of freedom now than I could then (i.e. through different schedules, and because I now see that Quantum Country readers who complete first review don’t have strongly skewed in-essay accuracies). But the fundamental problem remains.

So one thing I’ve been doing is to look at cohorts along particular paths—e.g. those who have successfully remembered three times. Such cohorts will be more internally similar than less-conditioned analogues, but this approach makes it difficult to talk about the schedule as a whole. Because the review schedules are dynamic, cohorts diverge in their timing fairly rapidly—we’re no longer comparing like with like.

One alternative is to ask threshold-based questions. For instance: how many repetitions did it take to demonstrate one month retention, with high stability thereafter? This question must be refined in several ways.

Say that the true value is something like “on their Nth repetition, the median user will attempt recall over 1+month and succeed with p=0.9”. How can we translate this into things we can actually measure? At least without a model, we estimate probability with recall rates, so we can never make exactly this statement.

But we can maybe say something like: “on their Nth repetition, 90% of readers whose in-essay recall was in Q2/Q3 succeed in demonstrating recall of 1+ month.” We can solve for N (if such a solution exists). We can specify for what % of questions this statement holds. This is satisfying enough, for now, I think.

The second piece is stability. Two framings that come to mind:

- after the repetition in which they demonstrate one month retention, recall falls slowly: one month later, they still have 90%+ odds of recall

- at the time of that successful one month repetition, we see very little decline in recall over the subsequent weeks (this is a stricter standard)

The latter roughly corresponds to “sufficient stability that a one month delay produces only a 10% drop in recall,” which sort of matches our intuitions that it’s built durable recall.

One we can compare schedules is to ask: for what % of cards is this true? We can also look at the extent to which it’s almost true.

So the criteria I’m looking for in a repetition N is:

- of readers with in-essay accuracies in Q2 or Q3…

- 90%+ are attempting an interval >= 1 month

- 90%+ of those attempting succeed

- this is true for X% of cards

- … and forgetting is slow in the weeks thereafter

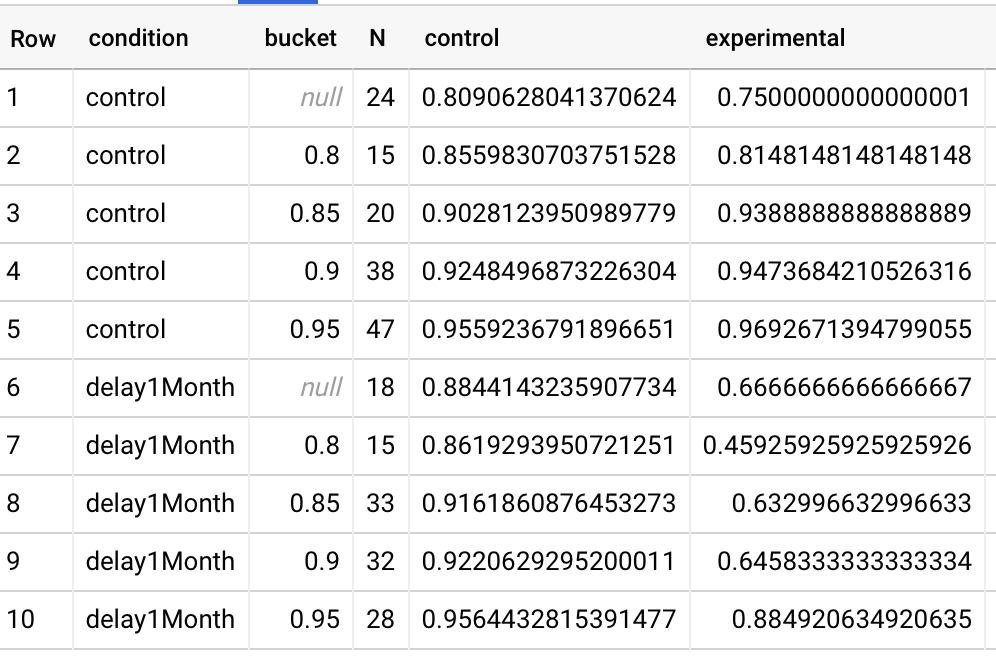

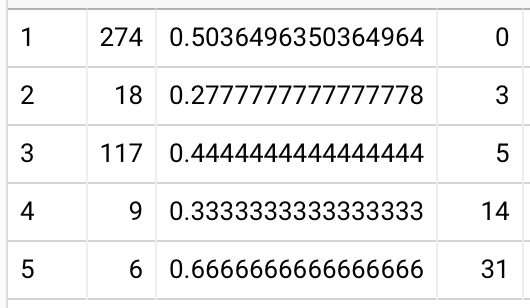

First tried this with 17fsggSIuqxnhKFwOI2g, and it looks like it never converges. 20220207122212. Trying the card with the median first review accuracy, eugu7LKeiO34xF1ParBu (“The simplest quantum circuit is a wire”), I see the repetition 5 almost qualifies (86% of readers trying for 1+ month; 99.7% of those succeeding), and repetition 6 does qualify. Constraining just to aggressiveStart schedule, repetition 3 almost qualifies (88% / 99%) and repetition 4 does qualify (92% / 100%). OK! Now we’re getting somewhere.

Let’s look across all QCVC cards. By repetition 4, the median card has 88% of readers trying for a month; 85% both try for a month and succeed. 60th %ile crosses 90% for the joint probability. The 20th %ile card has 70% of readers trying for a month; 66% both try and succeed. The 10th %ile card has joint probability of 56%. Not controlling reader pool very carefully here; N varies from 132 to 284 depending on card. I’ll tighten this down later. By fifth repetition, the median card has a 92% joint probability, and 40th %ile has 87%. 20220207125100

It’s nice to pin down these figures, but they’re actually not that encouraging. Only roughly half of cards qualify as “learned” by repetition 5? I wonder if we’re seeing a lot of population skew in these samples because readers who forget cards will have extra repetitions. Let me try looking just at people who completed 4 repetitions of everything. 20220207130354 Alright, this looks more promising. In the fourth repetition, 30th %ile is at 85% joint probability; 40th %ile is at 87%; median at 91%. In the fifth repetition, the 30th and 40th %ile joint probabilities are 88% and 92% respectively. Restricting to the subset of users who completed five repetitions, the results look pretty much the same.

But it’s also worth noting that among people who are attempting 1 month intervals at the fourth repetition, 92% of them are successful at the 10th %ile. It’s just that only 76% of people are attempting to recall the 10th %ile card. I’m having trouble figuring out how to think about this. The joint probability is an underestimate because it fails to include some people who would remember at one month if they had a chance to try; the conditional probability is an overestimate because it excludes people who failed to remember and needed a make-up session or two. So, I guess at least they’re upper and lower bounds. But their diversion suggests that my attempt to “control for reader ability” isn’t working very well. On the third repetition, the conditional probability at the 10th %ile is 88% (but only 60% got the chance). Now, the third repetition is the first time you could have a shot at this in that schedule without being late / non-compliant.

I think I’m too high up the ladder of abstraction. “Compared to what?” Is this working well? Do these numbers really indicate “stability”?

2022-02-04

One way to think about How to consider cost benefit tradeoffs of practice vs retention in spaced repetition memory systems? is to try to use stability as a key metric. Intuitively… if we could actually measure this hidden variable, we’d like to minimize the amount of effort expended to achieve a high degree of stability for an item. We don’t necessarily need to see the reader prove that they have high retrievability if we can demonstrate high stability—and if we can prove that stability is sufficiently predictable.

How might I model stability from the data I have, without using some complicated regression model? Mozer, M. C., Pashler, H., Cepeda, N., Lindsey, R., & Vul, E. (2009). Predicting the Optimal Spacing of Study: A Multiscale Context Model of Memory. In Y. Bengio, D. Schuurmans, J. Lafferty, C. K. I. Williams, & A. Culotta (Eds.), Advances in Neural Information Processing Systems 22 (pp. 1321–1329). uses different constants in its leaky integrators’ activation strength according to recall success. Mozer, M. C., & Lindsey, R. V. (2016). Predicting and Improving Memory Retention: Psychological Theory Matters in the Big Data Era. In M. N. Jones (Ed.), Big data in cognitive science (pp. 34–64). incorporates successes/failures into the review history via separate learned weights. I don’t love either of these approaches.

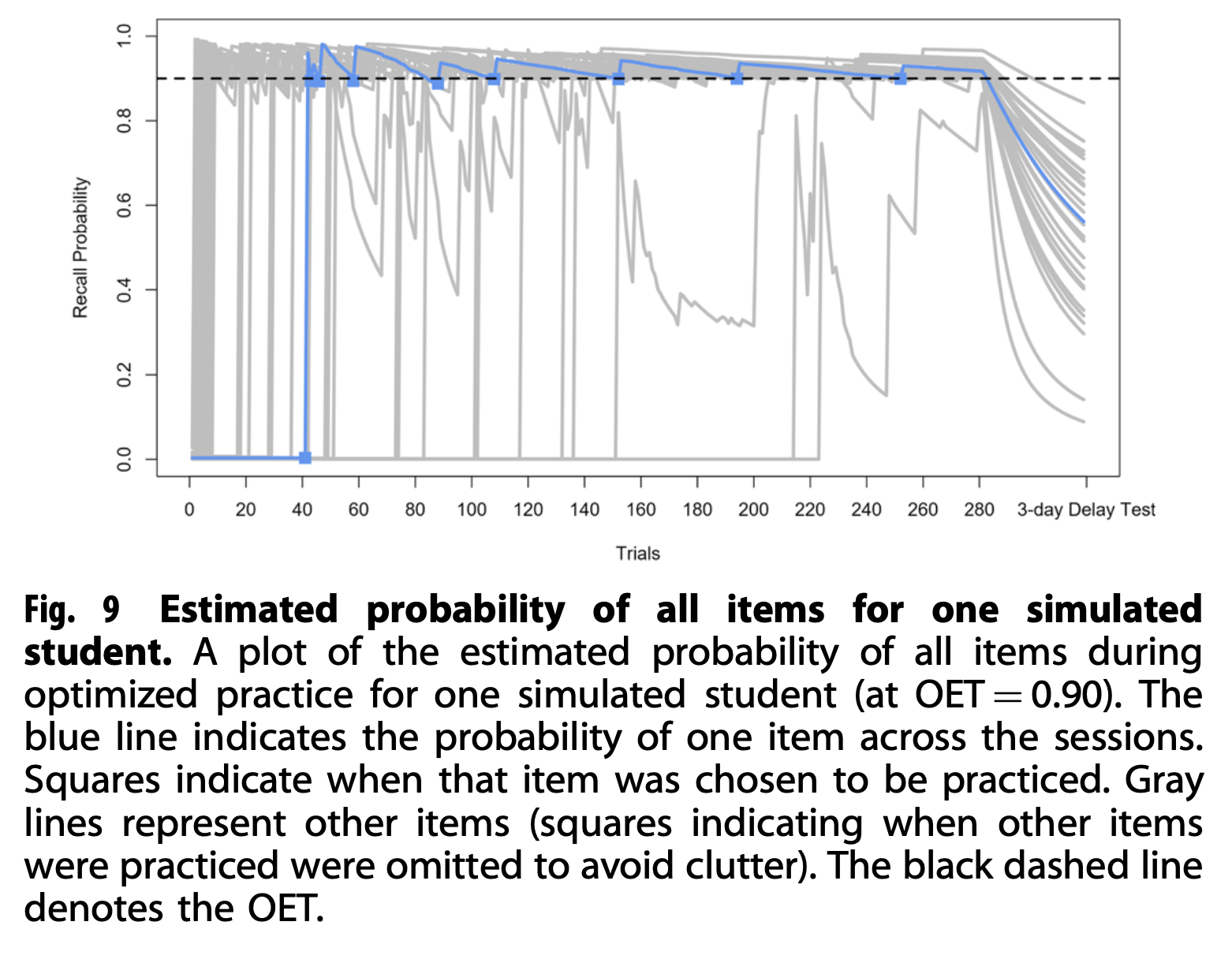

This graph from Eglington, L. G., & Pavlik Jr, P. I. (2020). Optimizing practice scheduling requires quantitative tracking of individual item performance. Npj Science of Learning, 5(1), 15 is tantalizing… can I produce something similar?

Well… one thing I notice about this image is that once stabilities get pretty long, they look surprisingly linear. And they’re probably mostly linear in the >0.9 regime? Maybe I can just approximate stability linearly to start.

If we model retrieval with a power law, i.e. m(1 + ht)^-f, then stability must be encoded in f. But it’s interesting that in Two-component model of memory, by contrast, it’s modeled with exp(-t/S), i.e. with time in the exponent.

Alright… enough theoretical mindset. What can I see? Honestly, not much. Looking at xiNW1zgeb2ITHGi6uQtg (a “hard” card), for readers who remembered in-essay and in their first session, and bucketing by clock-time review interval, I see recall rates of 93%, 87%, 95%, and 80% for 2, 3, 4, and 5 weeks respectively. So… well, OK, these people could double their interval without suffering much. That’s not so outlandish. 20220204123058 Using 1ENYKL02RT8aidhI917F, which has a 42% in-essay recall rate, I get similar figures.

OK, but those two cards are a bit wonky because I used them for the 2020-01 trial. Trying 17fsggSIuqxnhKFwOI2g, about the value of the Y gate. For third review (first two successful), I get 84%, 82%, 88%, 94% for 2, 3, 4, 5 weeks. (Why increasing?? Interference?) For fourth review (first three successful), I get 98%, 89%, 90%, 78% for 5, 6, 7, 8 weeks.

For people who forgot in-essay and then remembered in their first review, I see a clear example of poor stability: 81%, 79%, and 68% at 2, 3, and 4 weeks, respectively. For xiNW1zgeb2ITHGi6uQtg, I see 81%, 76%, and 67%. For 1ENYKL02RT8aidhI917F, 67%, 70%, 68%. 20220204125007

Maybe I can compare schedules by looking for “the most efficient schedule necessary to reach 90%+ at 1+ month, with slow declines week-over-week thereafter”.

1ENYKL02RT8aidhI917F doesn’t seem to quite be passing that test on Quantum Country right now: 88%, 85%, 88%, 87% for 5, 6, 7, 8 weeks. But it’s close. So maybe a good place to start? 20220204154812

2022-02-03

Looking at the data from 2020-01 Quantum Country efficacy experiment, I see that people in the bottom quartile of in-essay accuracy have a recall rate of 23% and 25% respectively for the two hardest questions with no intervention at all (N=53/60). 20220203085026 Nice to see that pattern continue.

OK, so if you’re in the bottom quartile, you really are gonna struggle without aids. Can we say the opposite—does the 1+3 pattern work for the bottom quartile? I see 75% / 60% (N=4/5, alas; 20220203085524)

What if we just look at repetition 3, 4, 5, etc? Does it converge? No! For the bottom quartile users, it doesn’t! 20220203091948 Repetitions 3, 4, and 5 are 66%, 75%, 73% / 70%, 76, 78% for the two hard cards at the bottom reader quartile. That’s pretty interesting.

(BTW, in-essay accuracy for these cards is 64% / 60% with no constraints on user pool)

The story here, as it’s shaping up:

- Hard cards need support to be recalled at one month:

- Hard cards have low recall with no support (42% / 42%)

- With in-essay practice and make-up sessions, recall rates rise to 71% / 68% at one month

- With an extra round of practice at one week, recall rates rise to 90% / 90%.

- Easy cards don’t need much support:

- Easiest card in my sample has 89% recall rate with no support

- With in-essay practice and make-up sessions: 91%

- With an extra round of practice at one week: 100%

- But the situation also varies by user. For people whose in-essay accuracies were in the bottom quartile of in-essay recall rates…

- The hard card figures are: 23% / 25%; 62% / 58%; 75% / 60% (tiny N)

- The easy card figures are: 79%; 93%; 100% (tiny N)

- Whereas for people in the top quartile of in-essay recall rates (>= 95.7%)…

- The hard card figures are: 56% / 65%; 67% / 86%; 100% / 100% (tiny N)

- The easy card figures are: 97%; 87%; 100% (tiny N)

There’s an obvious inconsistency in that very last sequence. Could be due to the folks in the 2020-01 trial getting reinforcement from other cards… not sure.

But the overall counterfactual story is shaping up here with this data. Everyone needs support for hard cards; low-ability readers need support for easy cards. So… what about for “middling” cards? We don’t know yet! I don’t think I want to dig into this at the moment.

This data isn’t very clean. If I want to tell this particular story, I can gather new data making a more head-to-head comparison. But I think it’s enough for my understanding now. I’ve pushed through my confusion around Quantum Country users seem to forget most prompts quite slowly: I do indeed see a forgetting curve—it’s just steeper for some readers and prompts than for others. Some readers/prompts experience relatively little forgetting, and that’s fine; we can make the experience more efficient for them. I can make a real case for the counter-factual.

But my picture of the positive case is hazier now! I don’t know how to think about the relative value of in-essay review given the substantial extra time cost. How should I compare these new schedules? How can I evaluate a potential change?

Eglington, L. G., & Pavlik Jr, P. I. (2020). Optimizing practice scheduling requires quantitative tracking of individual item performance. Npj Science of Learning, 5(1), 15 uses a fixed amount of study time to evaluate these trade-offs. Nioche, A., Murena, P.-A., de la Torre-Ortiz, C., & Oulasvirta, A. (2021). Improving Artificial Teachers by Considering How People Learn and Forget. 26th International Conference on Intelligent User Interfaces, 445–453 does something similar—assumes constant study time, searches for the most effective strategy.

The Pavlik paper makes fine distinctions in study time required when the interval is longer, or when the previous attempt failed. I don’t think this is the high-order bit. What really seems to matter is repetition count. How many times do I have to review this thing in a given period to reach a given stability?

2022-02-01 / 2022-02-02

Trying again to summarize what I know about the counter-factual situation. I can actually produce something like a density plot of estimated counter-factual forgetting up to 1 month. It doesn’t look that different from the plot without the practice at 1 day.

Comparisons (with practice for initially-forgotten vs. without):

- median: 82% vs 80%

- 25th %ile: 73% vs 70%

- 10th %ile: 66% vs 64% (10th %ile accuracy for initially-remembered is 69%)

Roughly consistent across readers. I’m finding myself skeptical that the effects of make-up practice are this small, given what we’ve seen elsewhere about the impact of practice.

One reason this data makes sense is that rates of initial forgetting are really pretty low. So having vs. not-having practice can only affect a small fraction of the resulting figure. And: as we look at lower-ability deciles, the recall rates for initially-remembered questions approach those of initially-forgotten questions (in part because we haven’t broken the latter down by reader decile).

So one theory here could be: maybe the recall rate for initially-forgotten questions would actually be much lower than 50% at one month, especially for lower percentile readers. Pushing the data from 20220119094651 for late people (probably unreasonably far), I see declines to 45 and 37% at 42 and 49 days respectively.

Are these late-practicers representative? I can try to assess that by comparing the in-essay accuracies of people reviewing forgotten questions with various delays to the prevailing in-essay accuracies. Here are median (IQR) in-essay accuracies among users reviewing forgotten questions at various delays (20220201092144):

- original schedule

- 0-3.5 days: 88% (79-93%)

- 3.5-10.5 days: 85% (77-91%)

- 10.5-17.5 days: 85% (76-91%)

- 5-day schedule

- 0-3.5 days: 87% (76-91%)

- 3.5-10.5 days: 86% (78-92%)

- 10.5-17.5 days: 85% (76-90%)

- 17.5-24.5 days: 83% (75-90%)

- 24.5-31.5 days: 84% (74-88%)

- 31.5-38.5 days: 82% (74-89%)

Maybe there’s a small effect here, but it’s not clearly all that pronounced. This pool of readers doing these reviews is skewed a few points from the prevailing pool—I’m not filtering by people collecting and completing reviews of 50+ cards, for instance—but it’s close enough that I’m not really going to fuss about the difference.

A thought: if I want more 2 month data, I could swap the %s around for new cohorts to emphasize that condition. Even tripling its prevalence, it’d probably take another year before I got solid data!

How much do people forget next-day? Quantum Country users seem to forget most prompts quite slowly suggests an accuracy of 89% at one day. I should compute scores from an equivalent pool of users to make a real comparison, but that gives me a taste.

So my counterfactual is that after a month, the median recall % is about 80%. 25th %ile is about 70%. This is probably an over-estimate, since we’re looking at relatively conscientious people. But the impact is not uniform: some questions suffer much more forgetting—e.g. 10% of cards have 60% or lower recall rates after 1 month.

Probably a better way to look at this is marginal forgetting. Compare one day to a month or two months.

…

Looking into most-forgotten question from 2020-01 Quantum Country efficacy experiment, curious how it compares vs. this one month forgetting data. The card is xiNW1zgeb2ITHGi6uQtg: “How can you write the $jk$th component of the matrix $M$, in terms of the Dirac notation and the unit vectors $|e_j\rangle$?”. s8duZcGBbu0dxb4xEAGg (“How can we express the squared length $|M |\psi \rangle |^2$ in terms of $M^\dagger M$?”) has almost the same forgetting rate. Both are 42% recall at one month without any support at all (i.e. without reviewing in-essay). They measure 76% and 79% respectively for the control group, which had a couple practice rounds.

So… how did those questions fare after 1 month with the new cohort? It’s a little hard to compare, since some readers remembered in-essay and some didn’t, so they’ll have different amounts of practice. For those who remembered in-essay, the 1-month-hence scores are 69% and 55% respectively. For those who forgot in-essay and got some extra practice, the scores are 84% and 63%; those are 50% and 44% of readers respectively. It’s interesting that extra practice seems to dominate the initial condition. That’s a good insight to follow up on… it’s probably true in general.

One question we can ask is: if those folks forgot in-essay and didn’t get extra practice, what might have happened? Looking at samples from people who simply reviewed late, we don’t really have enough data to say, but at two weeks, with a couple dozen users each, we see 32% and 49% respectively.

The control group numbers in the 2020-01 experiment seem surprisingly low to me: 76% and 79%? Really? Hm. In the 2021 1+3 week scenario, which is comparable, I see 59% and 71% respectively 20220201124331. OK, that squares. These cards clearly struggle with stability. It makes sense that their numbers in this new schedule would be lower than in the 2020 schedule, which would have an extra practice session.

Let me lift my head up here and summarize. For a couple “difficult” cards, recall rates after one month:

- with no support at all: 42% / 42%

- in-essay review (make-up sessions if necessary): 78% / 59%

- remembered in-essay: 75% / 52%

- forgot in-essay, got make-up sessions: 81% / 68% 50% / 43% of readers

- in-essay review (make-up sessions if necessary), practice at 1 week, sample at 3 weeks: 90% / 90%

- 2020 schedule (in-essay, 5 days, 2 weeks, sample at first review after 30 days of ingesting): 76% / 79%

And a few more, for good measure 20220202114355:

- in-essay review (with make-up sessions), sample at 1 week: 85% / 87%

- remembered in-essay: 81% / 83%

- forgot in-essay, got make-up sessions: 90% / 91% 44% / 50% of readers

- in-essay review (with make-up sessions), sample at 2 weeks: 76% / 86%

- remembered in-essay: 65% / 83%

- forgot in-essay, got make-up sessions: 94% / 88% 39% / 49% of readers

- unfortunately, only about 40 samples for each point here, so error bars are enormous

- Do these groups have substantially different “ability” levels? Can I use that to “explain” or “normalize” what I’m seeing? 20220202122723

- Eh… not really. The 2 week group does have a wider spread, but it’s hard to make a strong case here.

- eliminating the requirement for 50+ cards and a complete review, I get:

- 1 week: 82% / 87%; N = 72 / 70

- remembered in-essay: 76% / 84%

- forgot in-essay: 90% / 93% 52% / 49%

- 2 weeks: 75% / 82%; N = 68 / 61

- remembered in-essay: 73% / 85%

- forgot in-essay: 79% / 76% 35% / 34%

- 1 month: 71% / 68%; N = 58 / 71

- remembered in-essay: 65% / 65%

- forgot in-essay: 78% / 71% 47% / 44%

- 2 months: 67% / 65%; N = 51 / 54

- remembered in-essay: 63% / 72%

- forgot in-essay: 74% / 50% 37% / 33%

What about the “easiest” card from the 2021-01 experiment, h1AXHXVtsGKxkamS8Hb2, ($\langle \psi|$ is an example of a …)? It was remembered by 89% in that experiment without in-essay prompts or reviews (96% in the 2020 study with in-essay prompts and reviews). Current data 20220202131353:

- 1 week: 91% N=76

- 2 weeks: 84% N=64

- 1 month: 91% N=56

- 2 months: 100% (?!) N=43

- 1+3 weeks: 100% N=30 20220202131444

What about “lower ability” people? Say we look at the people who scored in the bottom quartile of in-essay accuracies (taking that threshold to be 83%, based on the 01-31 heuristic/data). In that case, I see what’s almost surely noise and survivorship bias: 78% / 64% / 93% / 100% for the four schedules; N = 9 / 11 / 14 / 5 respectively. Looking at the two harder cards, I get 75%/75%; 73%/75%; 62%/58%; 43%/44% for the four schedules. Only about ten samples for each, so not hugely persuasive. But maybe roughly indicative? 20220202153724

I’m a bit worried about the apparent discrepancies with the 2020 control group. Is this about the value of the 1-day make-up sessions? The 2020 control group had more practice in most(?) cases, but they scored worse! Hm… could those be because they were being tested one month after their 2 week session, i.e. at 7 weeks after reading? Looks like the median (IQR) delay is 22 (12-31) days and 21 (12-31) days. So I wonder why the performance is much worse than the 2021 schedule’s 1/3 weeks. Missing the 1-day make-ups? Looking at individual logs, I think that explains some of it, yes. These logs are, in general, pretty sloppy. One key generator of sloppiness here was the way that I decided to sample at one month—i.e. irrespective of when they’d last reviewed. So GyYpSKQ6pYfIHPf6xdK806nD6eR2, for instance, reviews on two adjacent days, because they’d happened to complete their most recent review on day 29 after enrolling.

2022-01-31

I’ve become increasingly interested since my call with Giacomo Randazzo in establishing some “truer” estimate of the QC counterfactual. The day-by-day data I’ve got in Quantum Country users who forget in-essay exhibit sharp forgetting curves gives a bleaker picture of recall for the subset of questions which are initially forgotten in the essay. And I think this data is also more representative because it’s less skewed by survivorship bias. Forgotten questions are reviewed first, so there’s less selection pressure on this data.

So, two related questions: leaving aside survivorship bias, how many questions do “real” readers forget in the essay? and: just how skewed is my practice data by survivorship bias?

I wonder if I can get some sense of the latter by trying to estimate the “ability” (in an IRT sense) of readers in these cohorts, and comparing those estimates to the initial performance while reading. One problem here will be that In-essay Quantum Country reader performance partially predicts first review performance—the correlation’s only about 0.5.

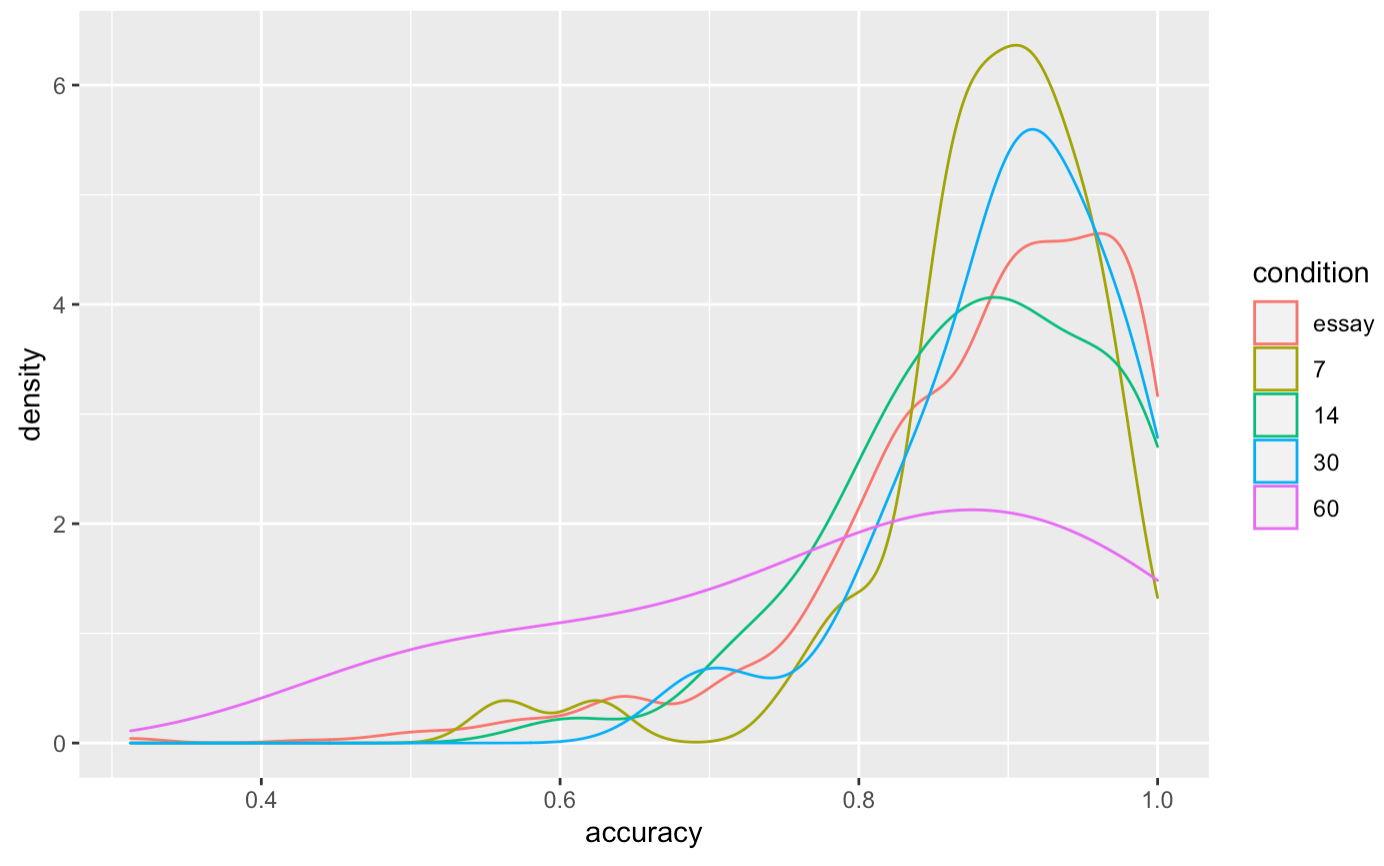

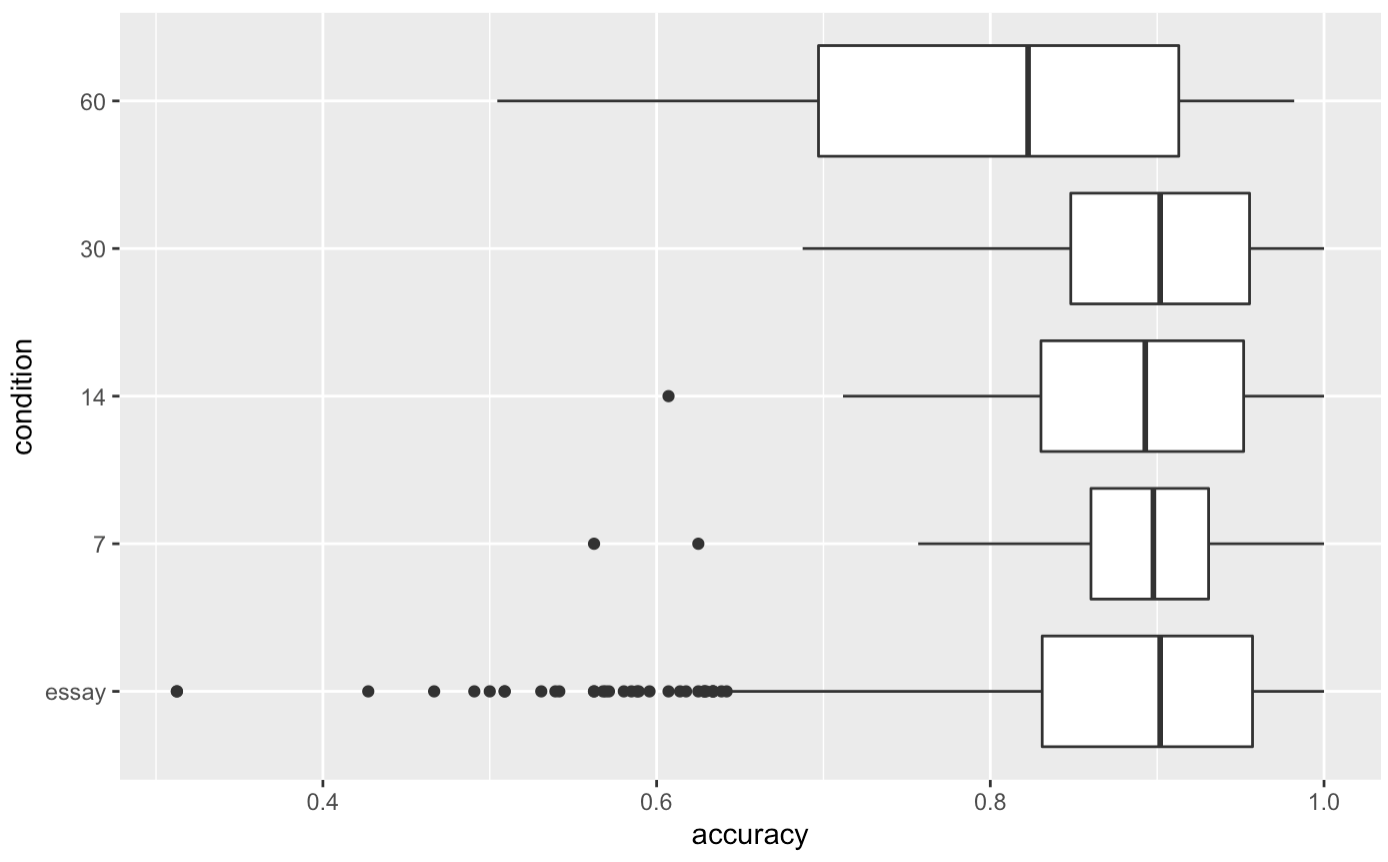

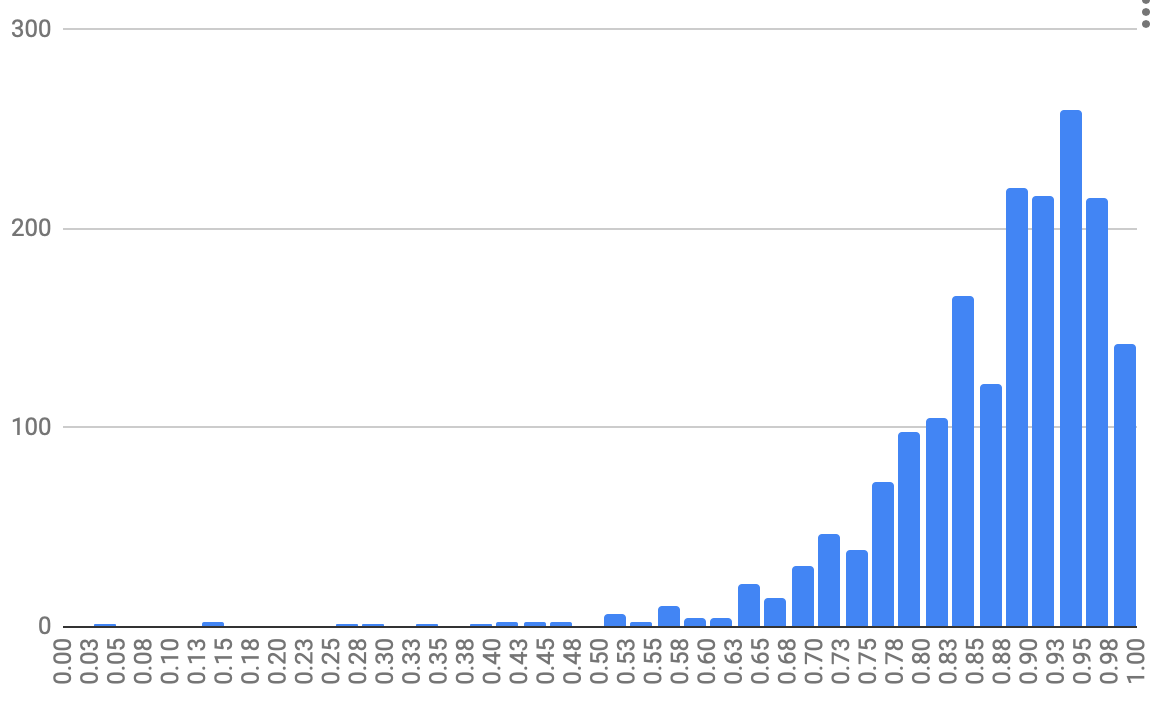

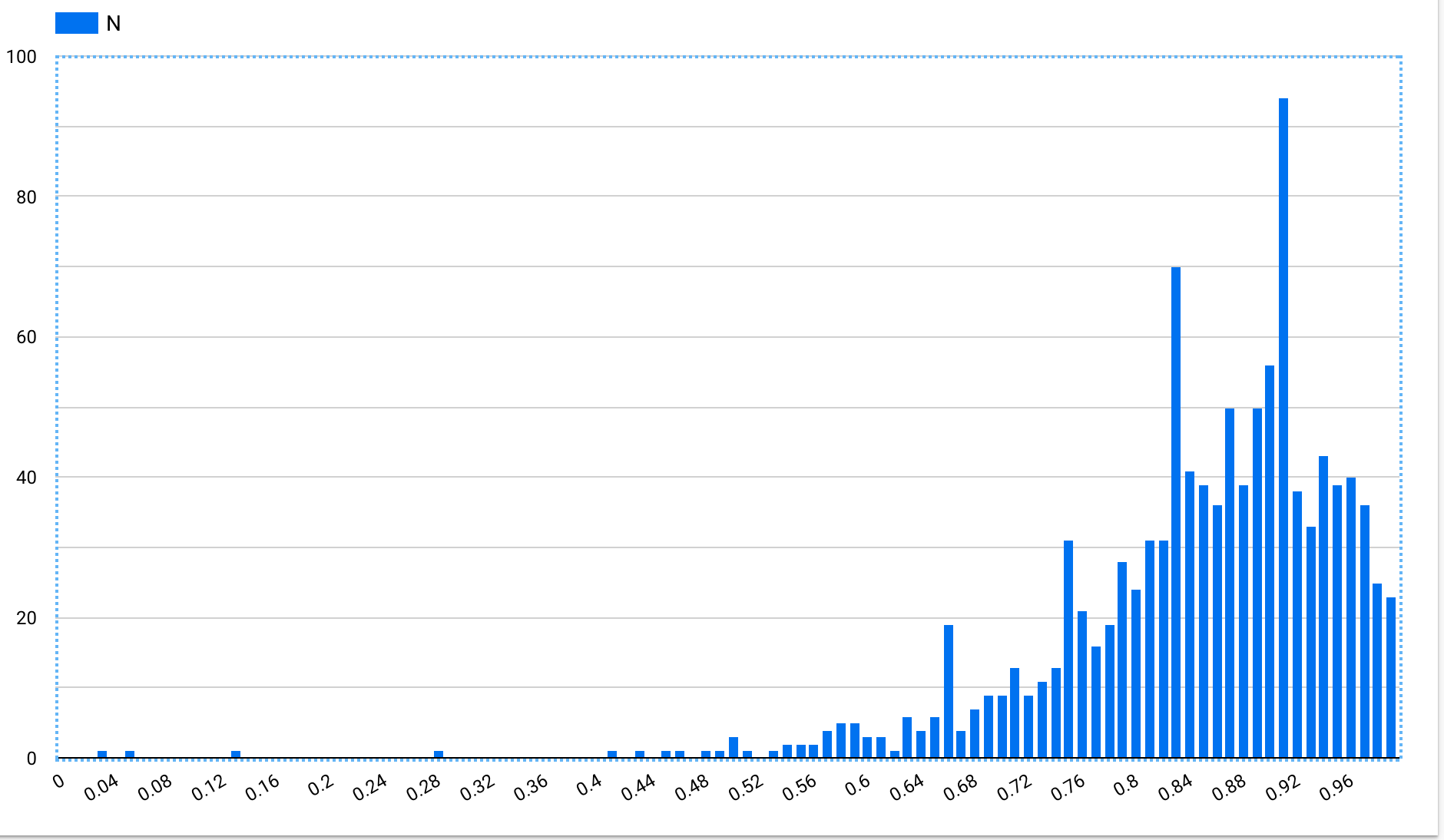

Had to use RStudio to plot this, since Google can’t do density plots. Felt so quick!

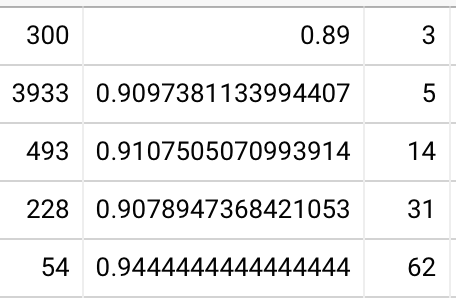

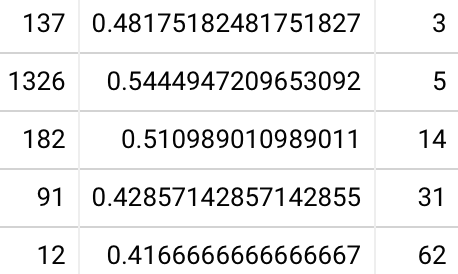

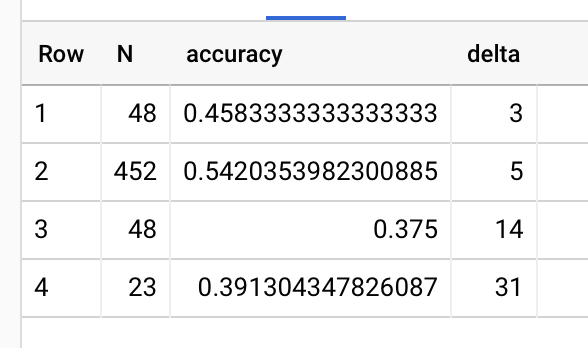

This is over people who collected at least 50 QCVC cards, and reviewed at least 95% of them. 20220131115743 What to make of this? n.b. this is 890 samples for essay, 50 for 7 and 14 days, 33 for 30 days, 6 for 60 days

I don’t make much of this, honestly. The distributions of in-essay performance of the subset of folks who actually complete a first review don’t seem all that skewed relative to the full set. At least for 7, 14, and 30 days, it looks like these are plausibly drawn from the same distribution as the in-essay set. Maybe not 14… but if anything, it’s actually left skewed relative to the essay. This suggests that in terms of “ability”, the numbers I’m looking at for folks who finished their first review are actually pretty representative of the whole. If I sample the median, or the 25th %ile reader in the 14 day condition who finished their first review, their in-essay accuracy will be pretty much the same as the median or 25th %ile reader without those constraints.

What I really want to know is: for various “typical” readers, how much should they expect to forget without review after, say, a month? Or, ideally, two months? The latter I don’t think I can answer—my sample is too small. But maybe I can approximate the former. The reader quantile plot from 2022-01-26 sort of shows this. But it’s the first delayed review, not the first actual review. So it understates the forgetting because the questions forgotten in-essay have received an extra round of practice.

Refining my reasoning from 01-26, let’s use queries for initially-remembered prompts for people who meet the same review criteria above (i.e. 50+ cards collected, 95%+ reviewed, 20220201083843). Now we find, at 1 month, 83% (74-91%; N=31). Doesn’t really change the estimate of 80% recall. Odd that this is only ~2pp below the figure below, which includes practice of the initially-forgotten prompts. 25th %ile is rougher: the estimate is ~70% recall without practice of initially-forgotten prompts. That’s about 3pp lower than the sample with practice of initially-forgotten prompts from 01-26.

I guess I still don’t really believe that I’m not seeing a skewed sample here. Hm… how could I convince myself?

2022-01-27

I realize now that my method for computing reader quantiles in QCVC questions are initially forgotten at very different rates was very wrong. Re-running, I see… well… confusion? 20220127162236 These don’t seem to line up with the graph from yesterday. Also, they don’t show any forgetting?

2022-01-26

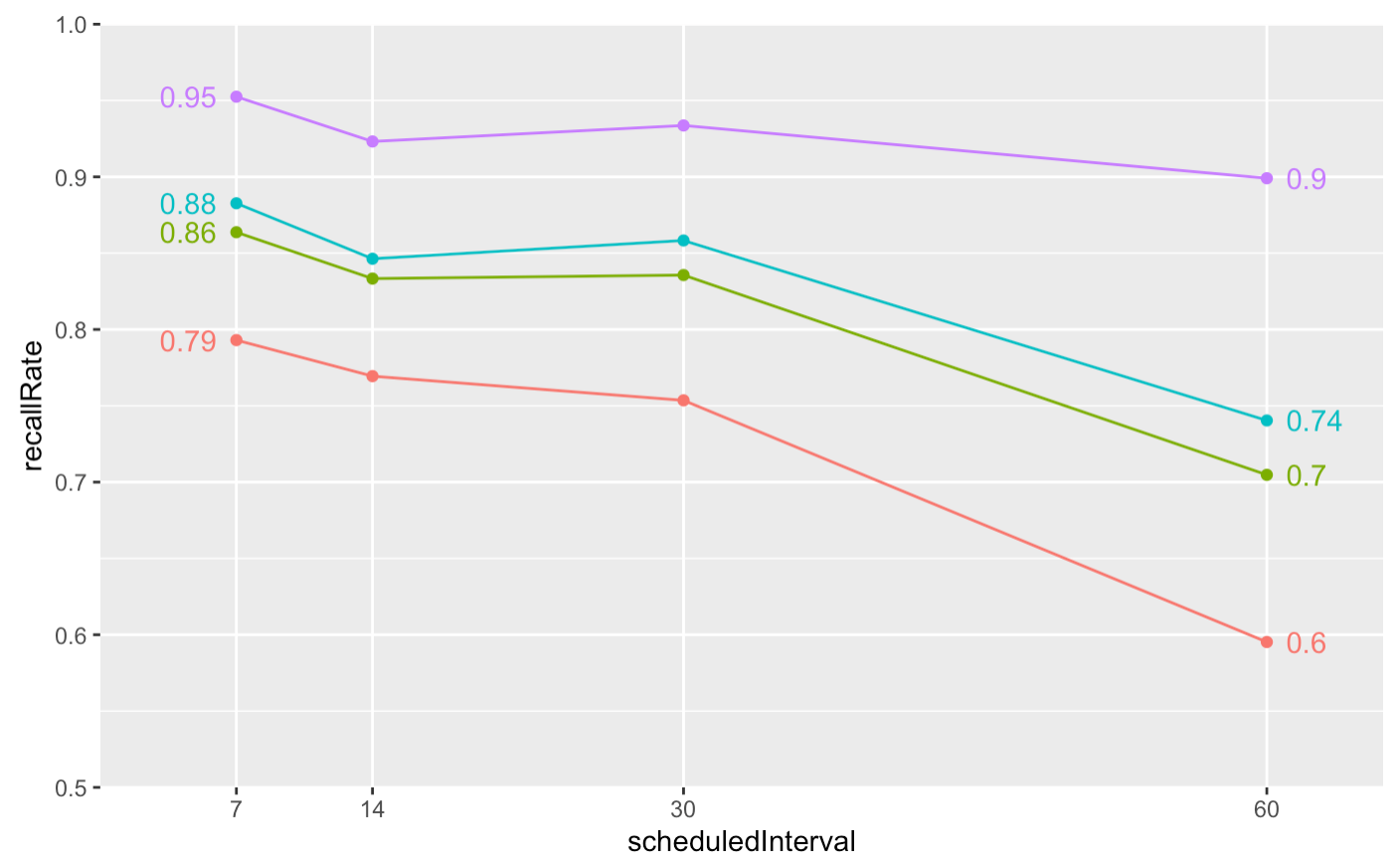

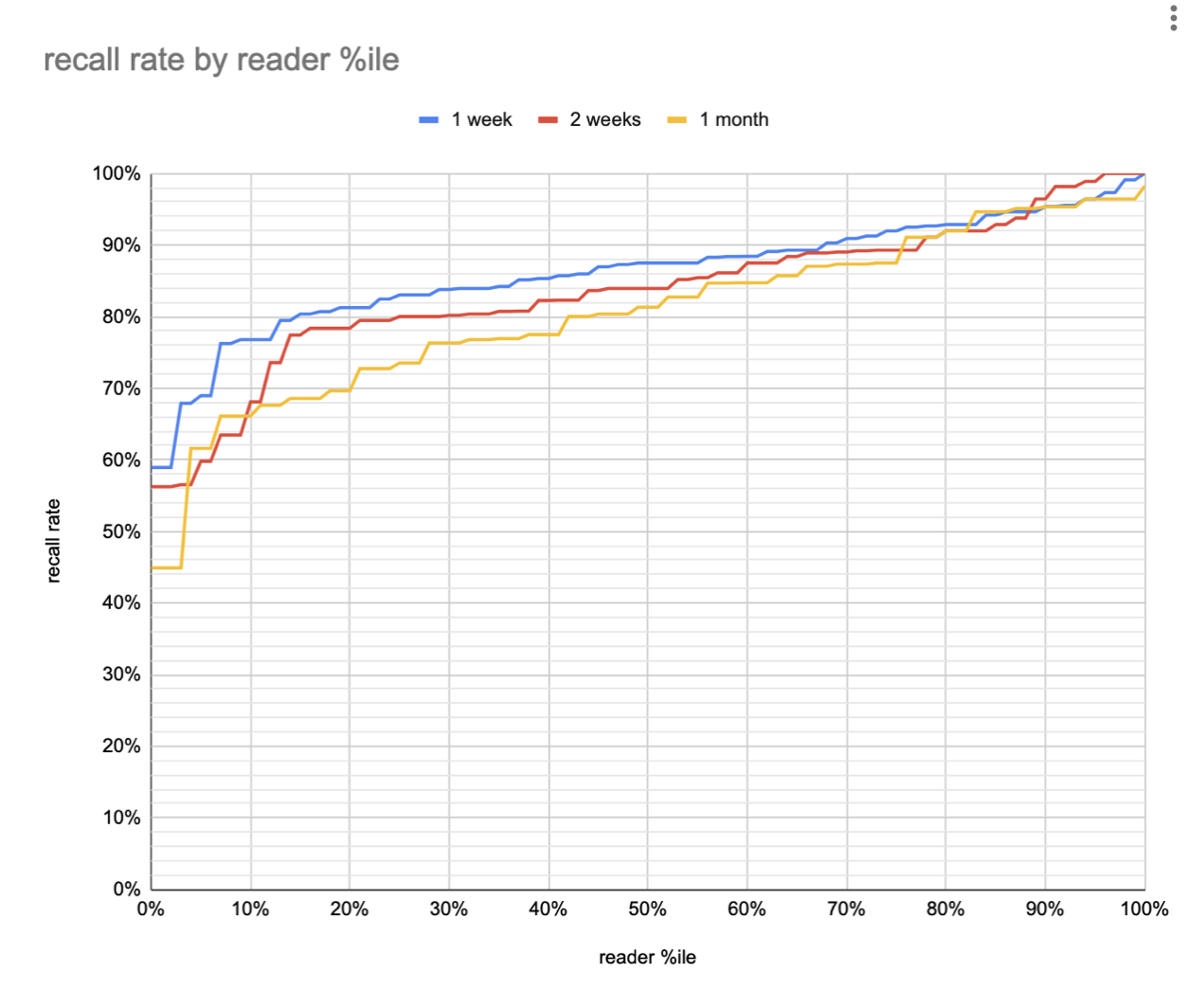

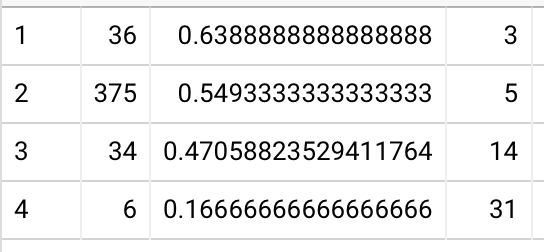

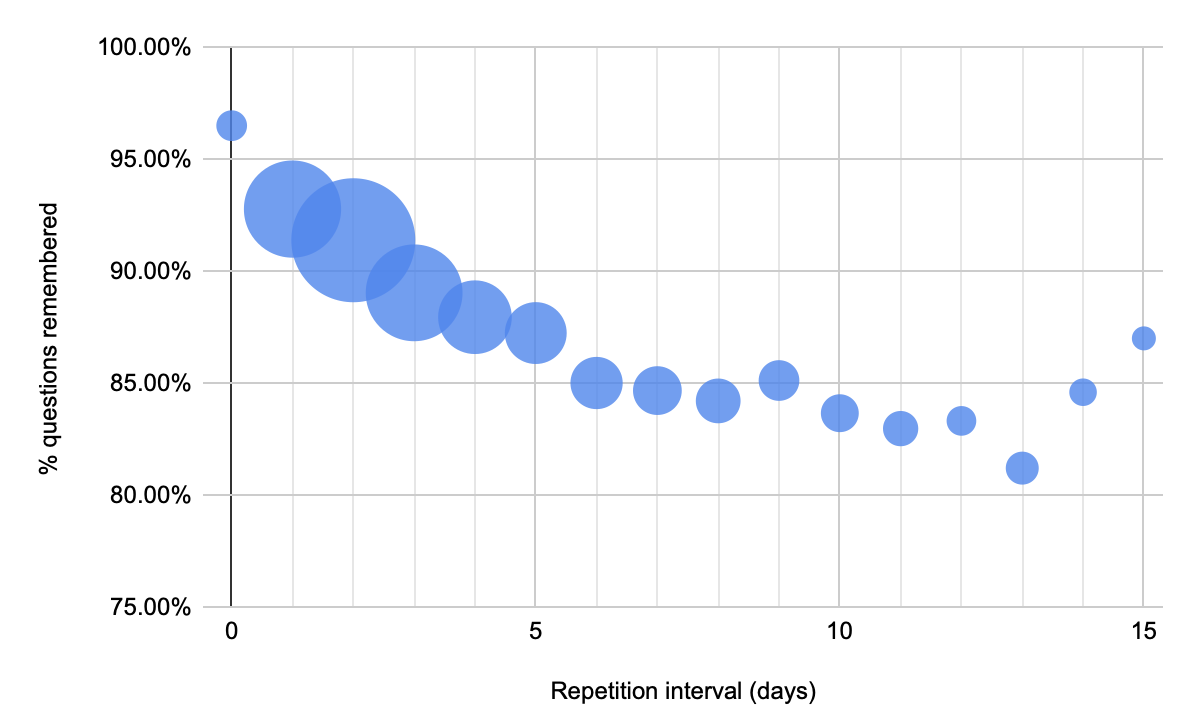

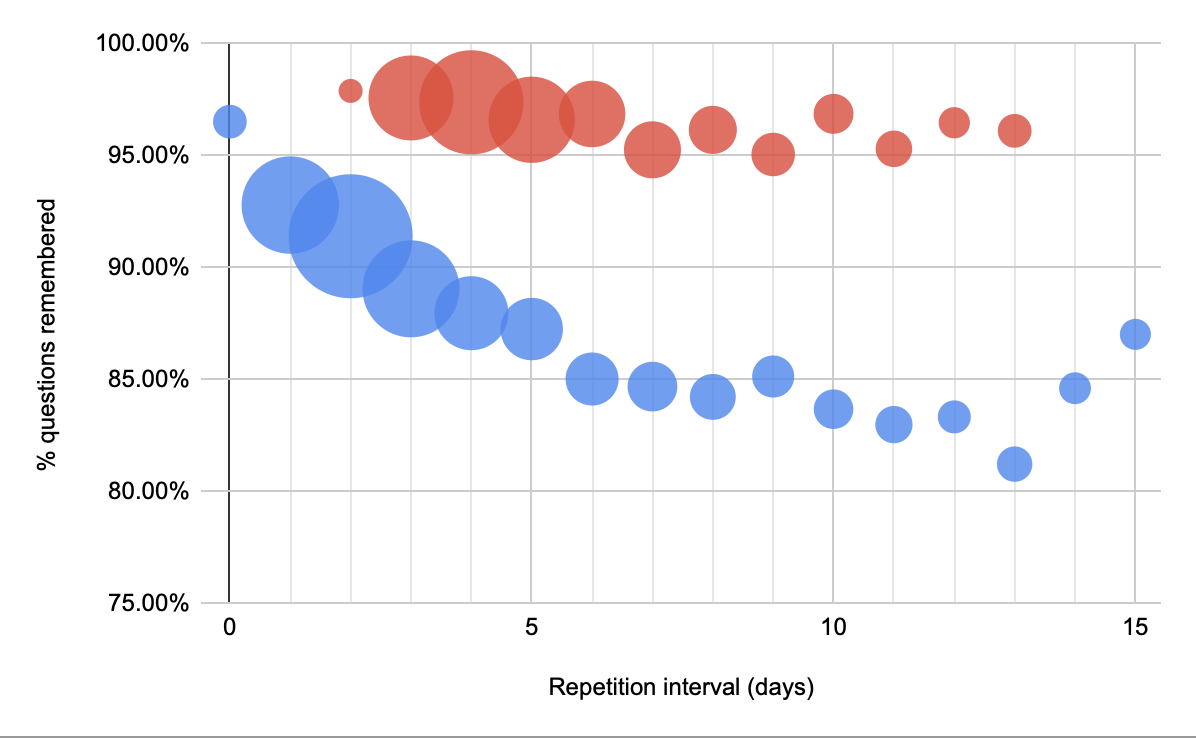

First delayed review, sliced by reader rather than by card (among readers who collected >= 50 QCVC cards and completed first review of >= 95%):

20220126164531 plot

So the median reader forgets a marginal ~8pp over the course of a month; the 25th forgets more like 10pp; 75th more like 5pp. But that’s with the make-up sessions’ reinforcement. This figure doesn’t really tell us about the true counterfactual—what would have happened without practice for a month. Can we see that somehow?

Looking at Quantum Country users who forget in-essay exhibit sharp forgetting curves, it seems that at one month, we should expect initially forgotten questions to have a roughly 50% recall rate. And per Quantum Country users seem to forget most prompts quite slowly, initially remembered prompts are at 84%. Median in-essay accuracy rates are around 90%, so we should expect median one-month recall rates (without make-up reviews) at around 80%. I find this pretty plausible.

25th %ile recall rates at 1 month are around 75% for initially-remembered (per 2021-10-11). Assuming (probably inappropriately) the same 50% recall rate for forgotten questions, and a 90% in-essay recall rate, we find a counterfactual recall rate of 53% at one month for the 25th %ile reader. I find this plausible too.

At the 75th %ile, they didn’t forget any questions in-text… that’s honestly just suspicious. Smells like To what extent do people “lie” when self-grading spaced repetition prompts? If we include only people who ~completed their first repetition, in-essay accuracy rates don’t change much. Median (IQR):

- 1 week: 90% (86-94%, N=34)

- 2 weeks: 87% (81-94%, N=34)

- 1 month: 90% (85-96%, N=23)

- 2 months: 84% (66-94%, N=5)

So anyway, very informally… 75th %ile recall rates at 1 month are ~95% (per 2021-10-11), so we should expect roughly a 93% counterfactual recall rate at 1 month for the 75th %ile reader. This is somewhat less plausible.

2022-01-20

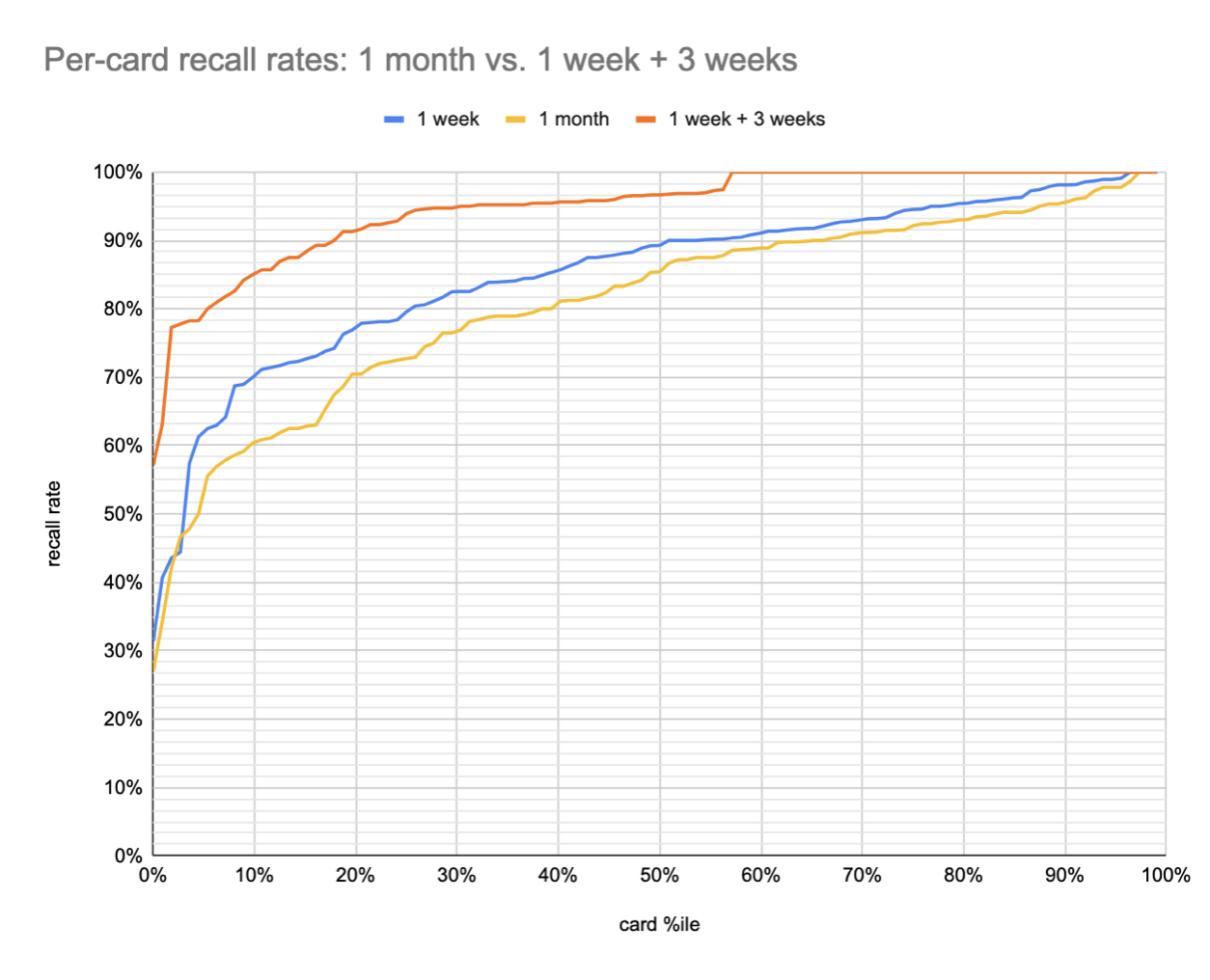

Extending the (hand-wave-y, not really accurate) quantile analysis for 1/2+6 vs 8 weeks:

- % of readers above recall thresholds (1+3 vs 4; 1/2+6 vs 8 weeks):

- 50%+: 97% vs 85%; 95% vs 83%

- 75%+: 94% vs 73%; 86% vs 66%

- 90%+: 86% vs 60%; 78% vs 56%

- % of questions recalled, by reader quantile (1+3 vs 4; 1/2+6 vs 8 weeks):

- 10th %ile: 82% vs 37%; 66% vs 28%

- 25th %ile: 98% vs 72%; 93% vs 65%

- 50th %ile: 100% vs 95%; 100% vs 92%

Not sure I can make much out of this. It’s hard to distinguish the effect of the first session being 1 vs 2 weeks (i.e. lower starting encoding strength) and the effect of the second session being 6 vs 3 weeks out.

The big picture effect of practice is the same: across roughly the same period of eight weeks, a single session of practice makes an absolutely enormous difference in retention rates, particularly for lower-scoring readers and higher-difficulty questions.

30% more readers would get a C or higher on a test at this time; 39% more readers would get an A. Without practice, about half of students would get an A; with practice, about three quarters would. This discussion is all quite speculative, given the incomplete rosters of the second session samples and the strong selection / survivorship effects. But it’s directional, at least.

…

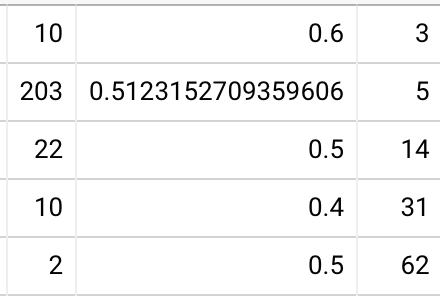

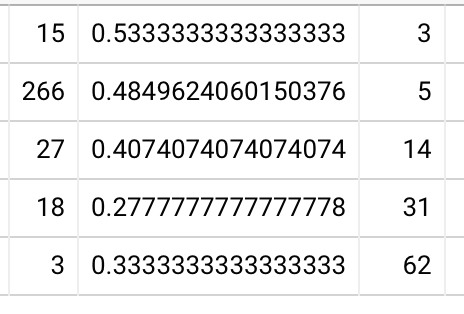

Looking now at the consequences of “make-up” session timing. Across different cohorts, we have:

- make up at 5 days (58%), then 2 weeks: 82%

- make up at 1 day (71%), then 2 weeks: 77%

Looks like this might represent a mild spacing effect?

Using only people who completed a full first delayed session:

- 5 days -> 2 weeks: 82% (6178 reviews, 484 users) 20220120104919

- 1 day -> 2 weeks: 78% (337 reviews, 28 users) 20220120104903

OK, so basically nothing happens to the effect.

Maybe this is because the 5 day users end up doing more practice before getting to 2 weeks, which would make the comparison unfair. Repetition count deciles:

- 5 days -> 2 weeks: 2 / 2 / 2 / 2 / 2 / 4 / 4/ 5 / 6 / 30 20220120105436

- 1 day -> 2 weeks: 2 / 2 / 2 / 2 / 2 / 2 / 2 / 3 / 3 / 6 20220120105634

Yes, this is a somewhat plausible explanation. Half of the users in the 5 -> 14 condition have 2 more repetitions under their belts. So let’s compare accuracies for only those users who remembered on their first make-up attempt.

- 5 days -> 2 weeks: 87% (3236 reviews, 461 users) 20220120110038

- 1 day -> 2 weeks: 78% (401 reviews, 78 users) 20220120105936

This makes sense, in hindsight. Because the 5 day recall rate is lower, this constraint selects for “easier” questions.

OK, well, then maybe the relevant statistic to look at is the expected number of repetitions to get to a given level of recall. This is multi-dimensional! Some users will get the best “scores” in this respect by using very long intervals. Others will need shorter intervals.

Alright, I think I need to look at the per-card plots for people who actually completed a first session, to really understand how things vary per-user.

…

Tried that 20220120165741

But we really don’t have enough data. The broad strokes here don’t look much different from what’s in QCVC questions are initially forgotten at very different rates, but it’s difficult to read much into this.

…

Looked into “why don’t the 2 month folks stick around”? Is there something wrong with the notifications? I don’t really see anything. 20220120170540

2022-01-19

Lots of analysis today in Quantum Country users who forget in-essay exhibit sharp forgetting curves.

How many initial 2-month reviews do we have? 2193 across 63 users (20220119122607). Hard to produce summary stats from that: an average of 35 reviews per user.

2022-01-18

On a whim I plotted 1/2+6 weeks on top of the 1+3 week per-card plot.

That’s pretty interesting. It suggests that forgetting is still a relevant factor in the second session. n.b. I only have 20-30 samples per card for that line.

Let me try to articulate my “so what” w.r.t. what I’ve learned from QCVC questions are initially forgotten at very different rates and these practice plots:

- We’ve roughly established the counterfactual. At least for roughly half of our users, if you don’t review, you’re likely to forget one-two thirds of the material by two months later.

- I need to get the “higher quality” version of this data, controlling the samples more accurately.

- I now actually have some metrics I can monitor and use to improve the efficacy of the system.

- For a large fraction of users and a large fraction of questions, frequent review really is unnecessary. The initial intervals can probably be quite high.

- Practice is the high-order bit, not getting the schedule exactly right.

Wrote:

- In-essay Quantum Country reader performance partially predicts first review performance

- In-essay Quantum Country question difficulties partially predict first review difficulties

- Longer initial intervals produce much lower completion rates (among 2021 QCVC readers)

2022-01-12

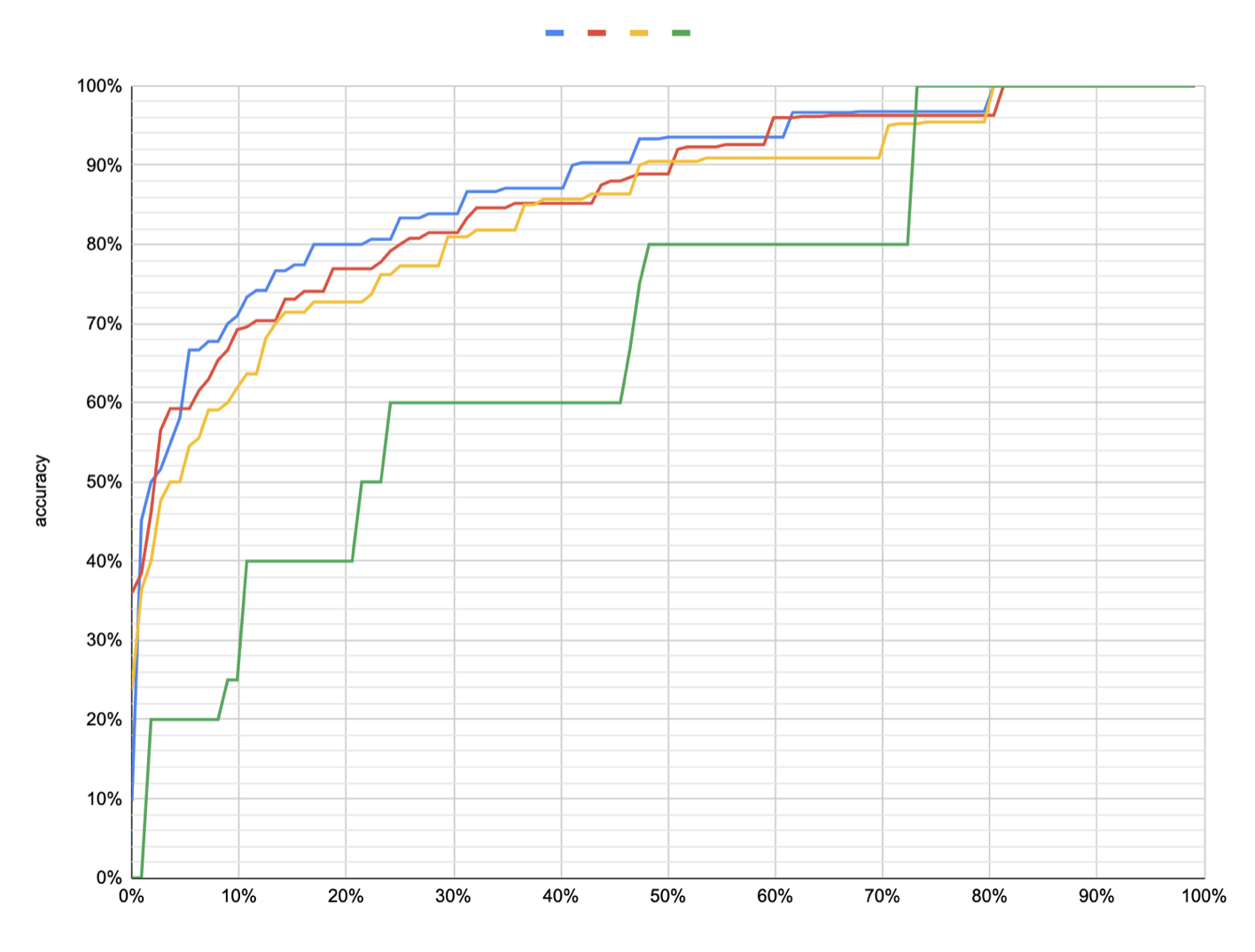

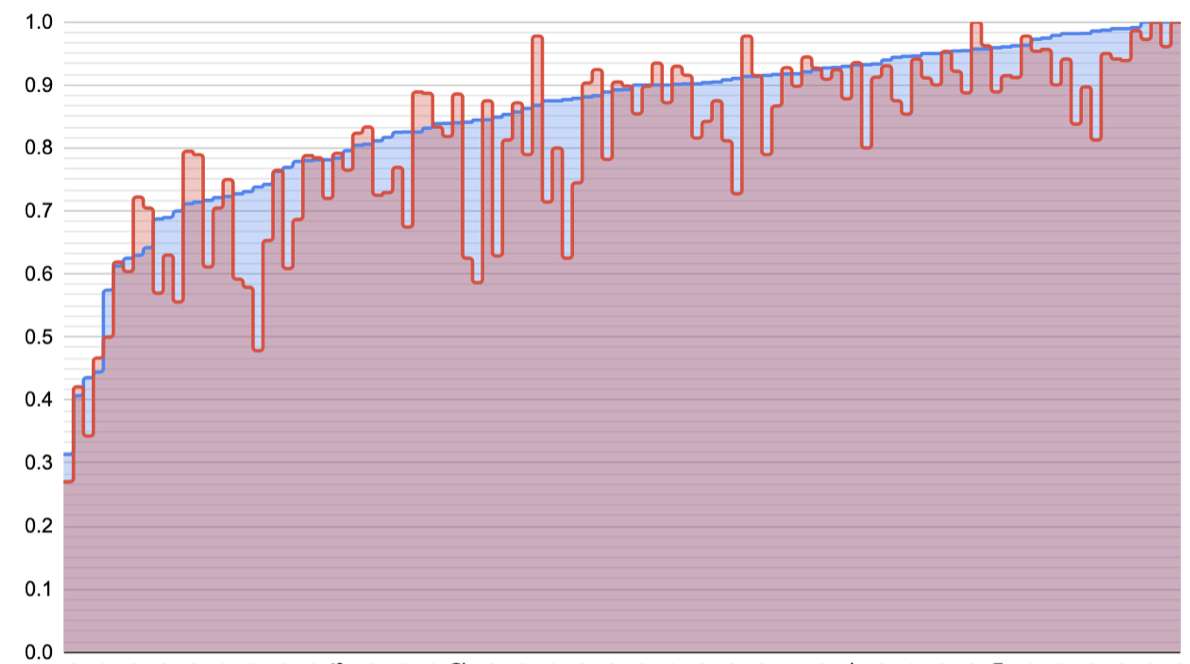

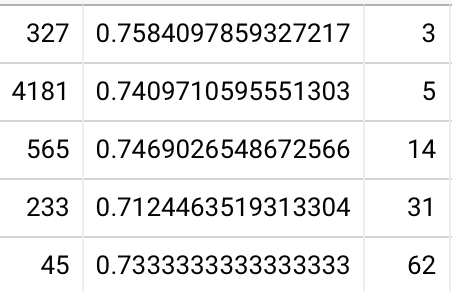

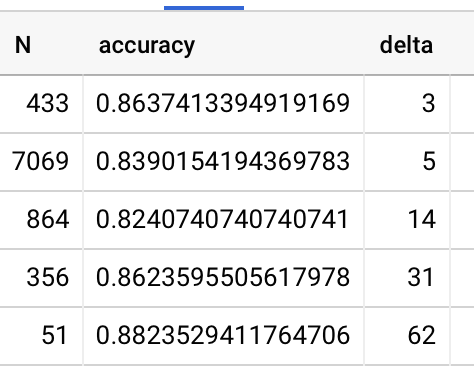

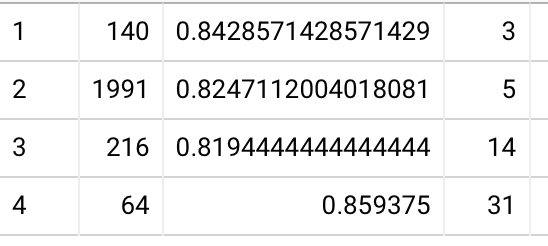

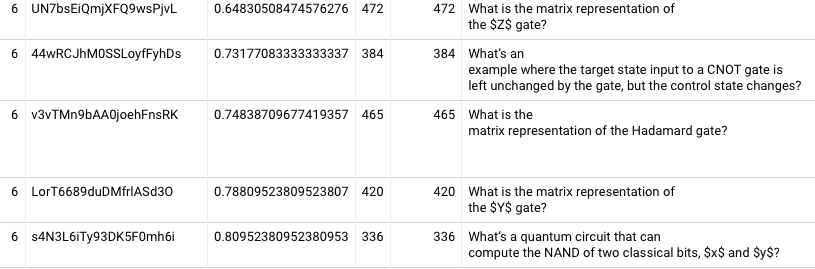

Continuing to look at per-question forgetting. I don’t know why I didn’t think to do this sooner, but here’s all four conditions:

This is really quite interesting. The average forgetting delta between 1 week and 2 months is only 7%. But some questions experience much more forgetting. I’d say about a third of the questions exhibit substantial forgetting (15%); a third exhibit very little (2-3%); and a third exhibit moderate forgetting (8%). The positions of these segments are roughly consistent with an IRT model with the low-forgetting questions at the tails and the high-forgetting at the middle.

Another interesting way to look at this: at 1 week, roughly half the questions are recalled by 90% of readers; three quarters are recalled by 80% of readers. At 2 months, a quarter of questions are recalled by 90% of readers; half are recalled by 80% of readers.

Two take-aways here for me:

- As often happens, many “easy” questions end up flattening differences in more “critical” questions. When they’re removed from consideration, differences appear more clearly.

- I complained that I couldn’t see the counterfactual and that I don’t have a clear metric to guide future work. This seems to solve both. For many questions (though not many others!), 15%+ marginal forgetting is accrued between 1 week and 2 months; 10%+ is accrued between 1 and 4 weeks. Those numbers seem pretty plausible. I bet that if I break things down by quartile things get even clearer. Some reasonable metrics which emerge here are: for how many questions do 90% of people remember? What’s P_recall of the 10th %ile question? (here, roughly 56% vs 72%).

I summarized all this in QCVC questions are initially forgotten at very different rates

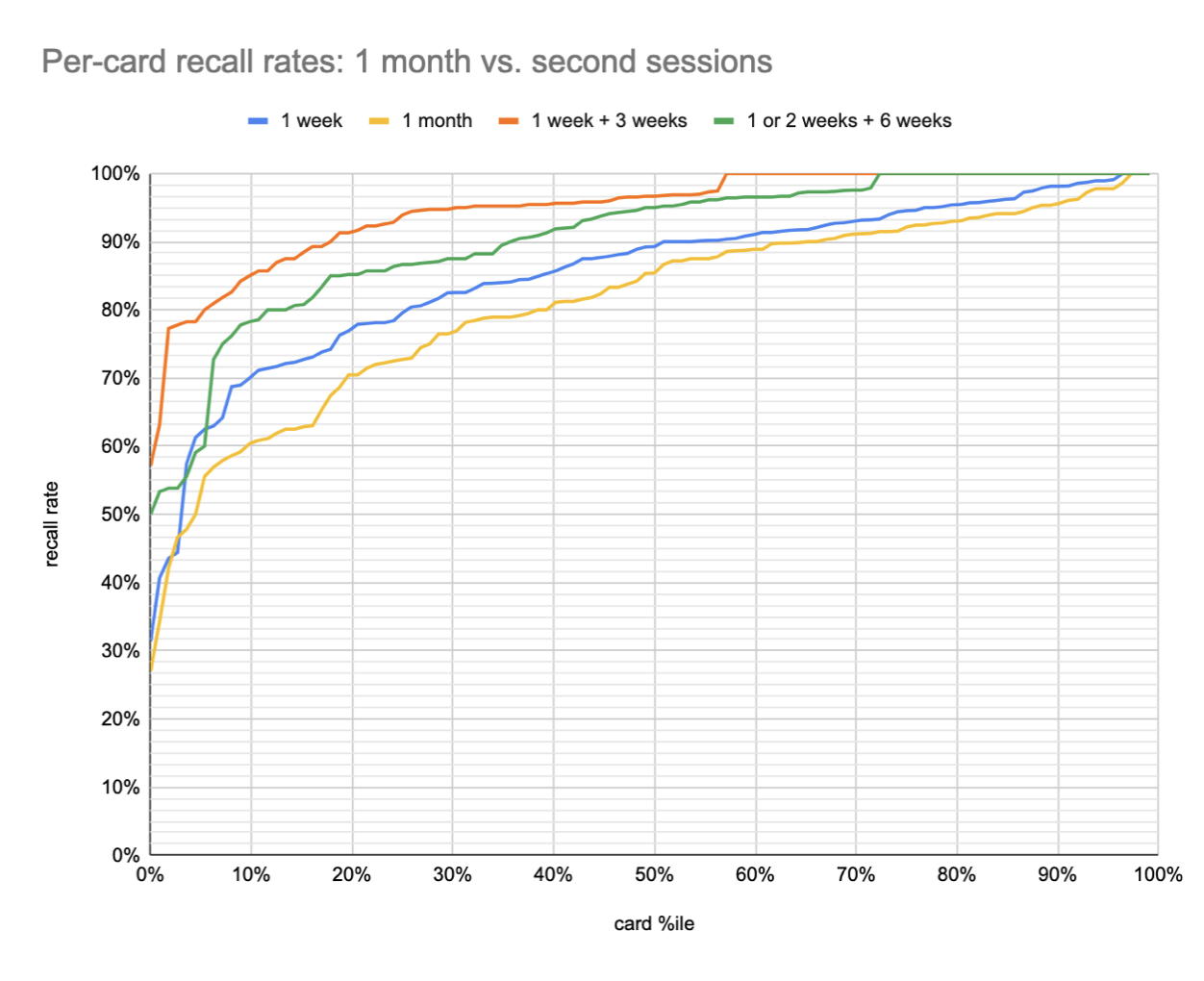

I think it’d be even more instructive to look at second session stats on this same plot. I can directly compare 1+3 vs. 4 weeks. 20220112091414

This tells a much better story. Suppose you want to remember the contents of this book one month later. If you review them at one week, you have a 90% chance of remembering ~80% of them; the 10th %ile question is recalled by 85% of readers. By contrast, if you simply wait one month, you have a 90% chance of remembering only about a third of them, the 10th %ile question is recalled by 61% of readers.

Some caveats here:

The 1+3 samples overstate the relevant recall rates in cases where the reader forgot at the 1 week point. In these cases, before attempting the 3 week interval, they try again after a 1 week interval, and proceed to the 3 week interval only once successful. This both falsely inflates the data (more practice) and also probably skews the samples up, since I expect some users bailed before getting to the 3 week point.

The 1+3 readers are doing more review sessions, which skews the sample more conscientious.

% of readers above recall thresholds (1+3 vs 4 weeks):

- 50%+: 96% vs 85%

- 75%+: 94% vs 73%

- 90%+: 86% vs 60%

% of questions recalled, by reader quantile (1+3 vs 4 weeks):

- 10th %ile: 82% vs 37%

- 25th %ile: 95% vs 72%

- 50th %ile: 100% vs 95%

2022-01-11

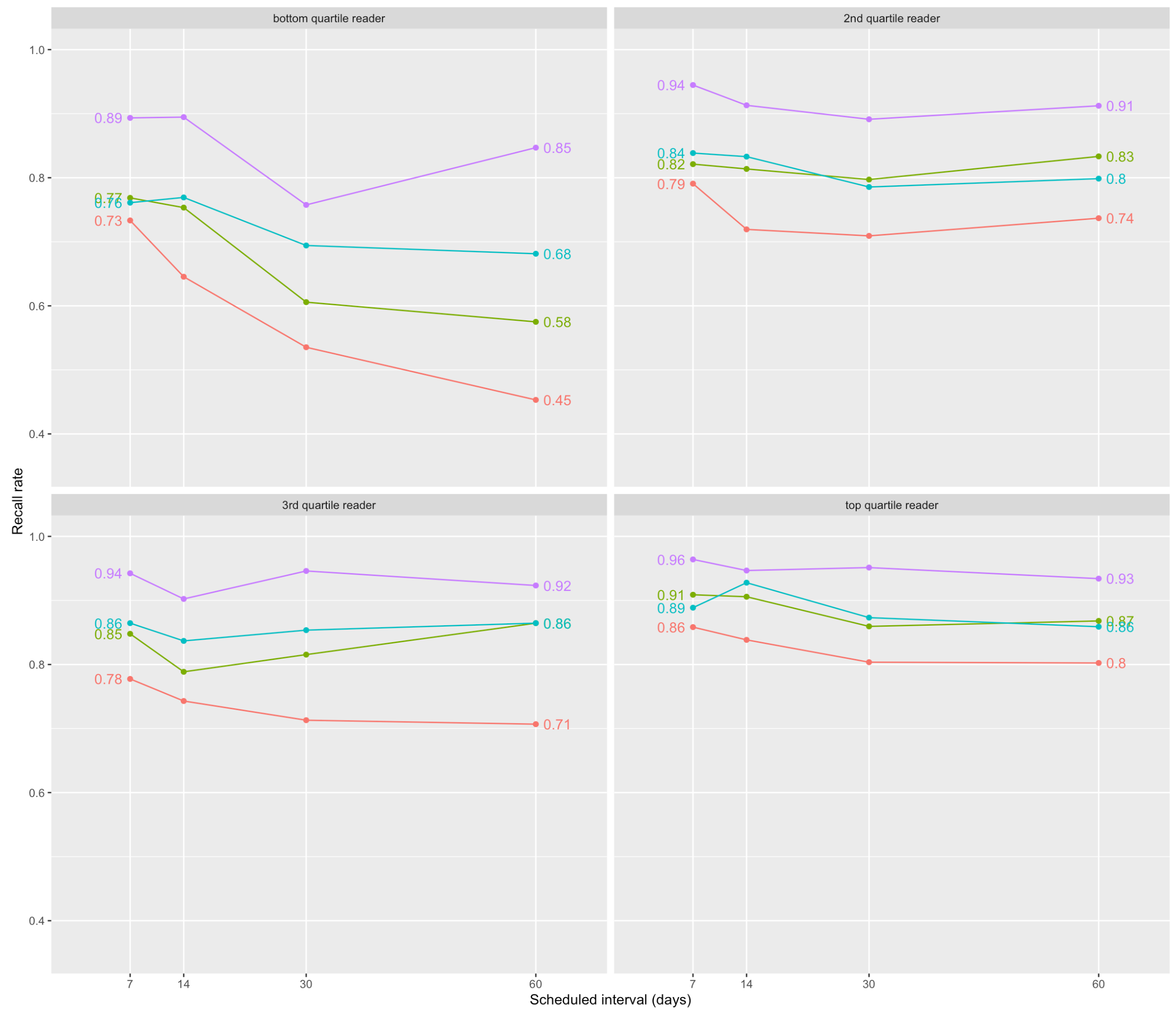

Looking into whether forgetting curves are consistently much steeper for bottom-quartile users.

First off, here are in-essay accuracies by user, among 2021-04 Quantum Country schedule experiment readers who collected at least 80 QCVC cards and completed a delayed review of them all, in-essay accuracies 20220111061948 are 90% (85-96%, N=87).

Note that when I remove these conditions—looking at all users, irrespective of how many prompts they collected or whether they reviewed any of them later—I see 89% (81-95%, N=890). So there’s not apparently that much selection pressure in my much smaller sample.

Bottom quartile (in-essay) accuracies at first delayed repetition: 83% / 78% / 77% at 1 / 2 / 4 weeks, with a user count of 8, 10, and 4 respectively 20220111063752. If I remove the constraint that the user has completed their first review (still requiring 80+ cards collected), I see 79% / 79% / 75% / 72% with 20 / 25 / 17 / 8 users. If I remove the 80+ card requirement, I see 80% / 79% / 74% / 71% with 40 / 45 / 29 / 12 users. Note that this downward skew supports the hypothesis of survivorship bias I’ve articulated a number of times when looking at long-lasting QC accounts.

So the bottom quartile forgets roughly a quarter of the material a month after doing the in-essay reviews (but forgets about half that much as soon as 1 week later). This still seems like a pretty small amount of forgetting to me, considering that we’re talking about the bottom quartile, but it’s maybe in the realm of plausibility, given the survivorship bias involved in this sample.

Combining the second and third quartiles, I see 88% / 84% / 86% / 83% with 50 / 38 / 26 / 22 users. So it really does seem to be true that the bottom quartile has a steeper forgetting curve. The fourth quartile is 91% / 91% / 87% / 83% with 17 / 21 / 14 / 11 users.

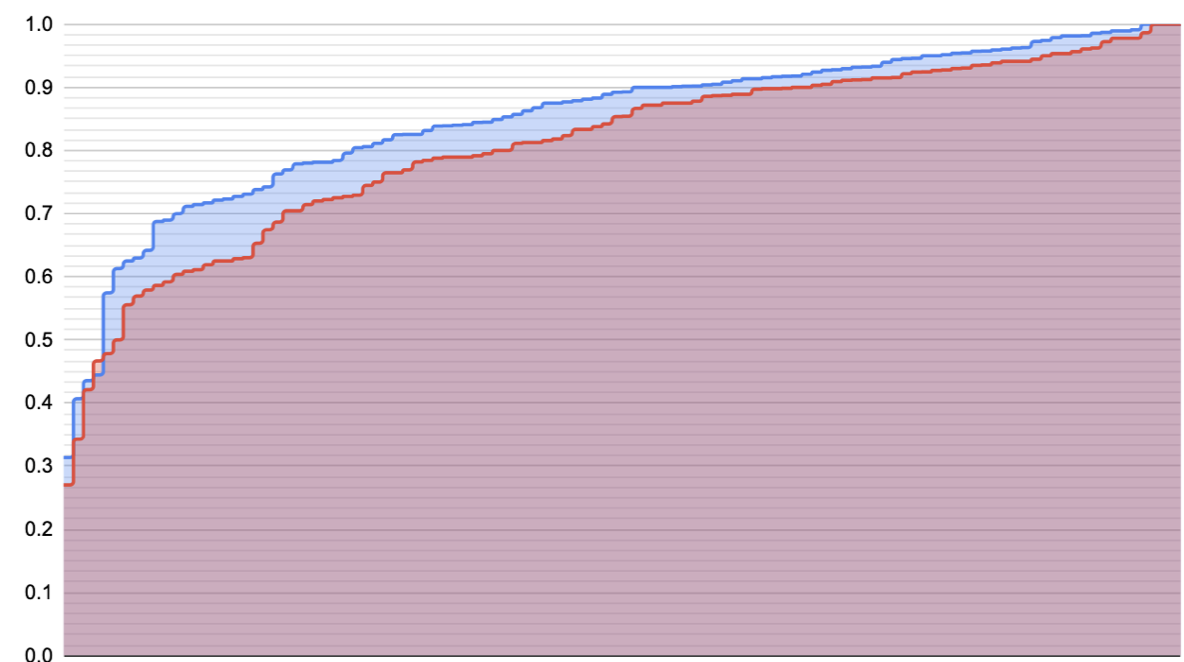

Here’s 1 week (blue) vs. 1 month (red) per-card QCVC accuracies. Top image sorts each by accuracy within the cohort (and so each position on the X axis isn’t necessarily the same card); bottom image aligns 1 month data to 1 week x axis. 20220112085959

What do I see here?

- I certainly don’t see a sigmoid, like I’d drawn. Maybe there’s a left tail five prompts long or something, but that’d be the extent of it. More just a cratering on the left edge.

- Ah: but I shouldn’t see a sigmoid. The sigmoid describes the probability, not the expected sample. If the true probability of the “easy questions” is 0.8, and I draw 100 samples, the 95% range extends 8% to either side. We see that noise echoed in the bottom plot.

- This also (maybe) explains the more or less linear growth in accuracy for much of the entire set of cards.

- Roughly half of the questions are in a part of the curve where the delta is only about 2%. In IRT terms, they’d be on the saturated right edge of the sigmoid. That sounds pretty plausible to me.

- Roughly speaking, as difficulty increases, so does the delta between the conditions. This is also consistent with an IRT-like model.

- I’m not sure what’s going on with the left edge. One story is that this point we’re in the saturated left leg of the IRT plot for most ability levels. Another story is that sampling variance will increase for these values close to 50%.

Indeed: the slopes we see here can be explained by quite a simple model. Assume IRT: accuracy varies with the logistic of a question’s difficulty. Then here’s a plot which draws a similar sample to the live ones above, assuming a stupid simple two-level difficulty model (i.e. “there are a few quite hard questions; almost all the rest are pretty easy”).

Struggling with the “so what”… this doesn’t quite seem to support my notion that there are questions which are so easy that a decline doesn’t shift the distribution much, and some which are likewise so hard.

2022-01-10

A very quick look at response times by delay interval suggests no relationship (median seconds (IQR)) 20220110105021:

- 5 days: 9 (6-16)

- 1 week: 9 (6-16)

- 2 weeks: 9 (5-16)

- 1 month: 9 (6-17)

- 2 months: 9 (6-15)

Each with thousands or tens of thousands of data points. Boy, this really doesn’t make sense!

But maybe if I break it down by card or ability or prior recall or whatever…

2022-01-07

Updated first delayed repetition in QCVC, for readers who have collected at least 50 questions and answered at least 90% of those they collected 20211130174228:

- 1 week: 87% (82-92%, N=46)

- 2 weeks: 85% (81-90%, N=41)

- 1 month: 85% (74-92%, N=27)

- 2 months: 70% (61-79%, ==N=5==)

2021-12-16

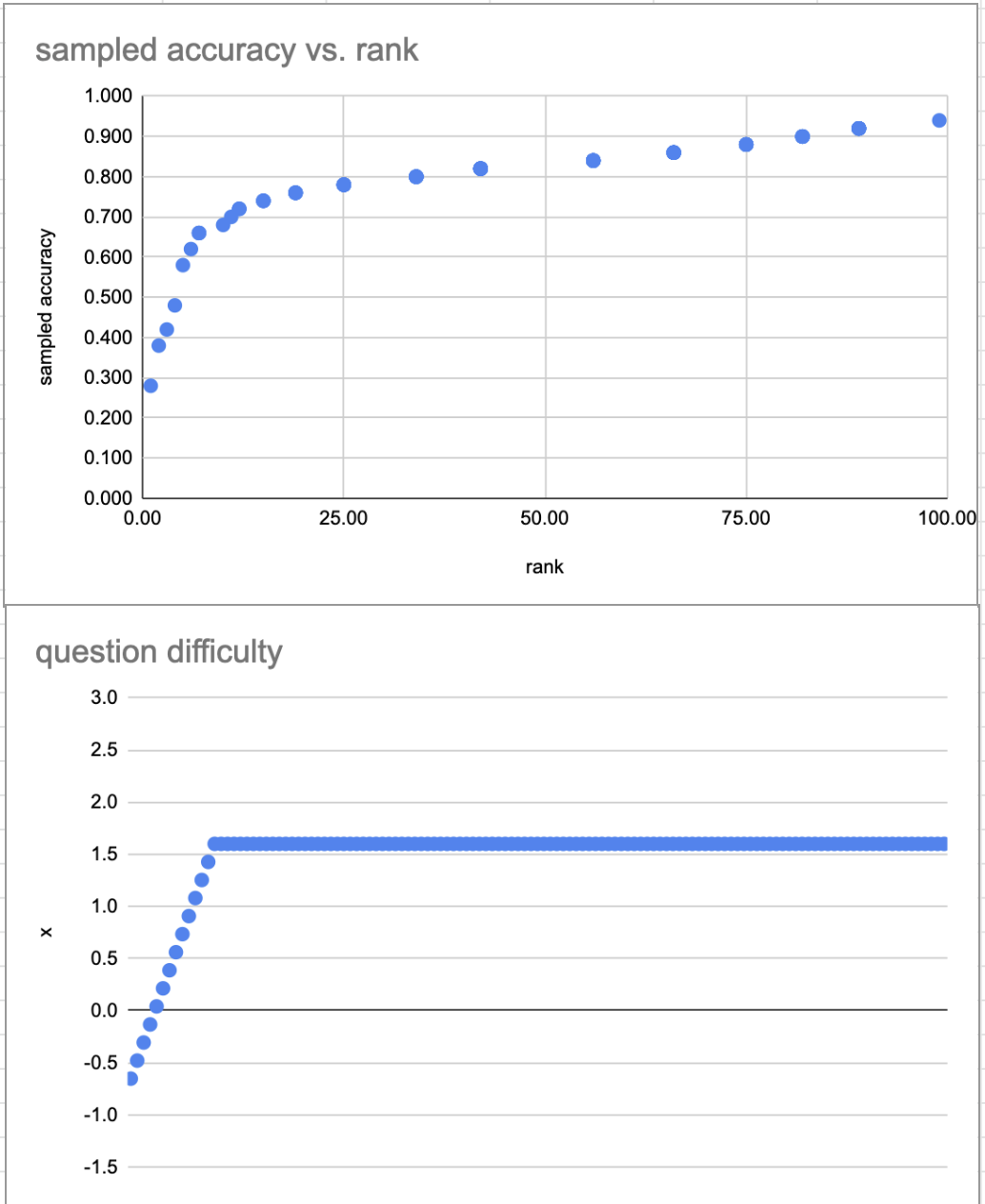

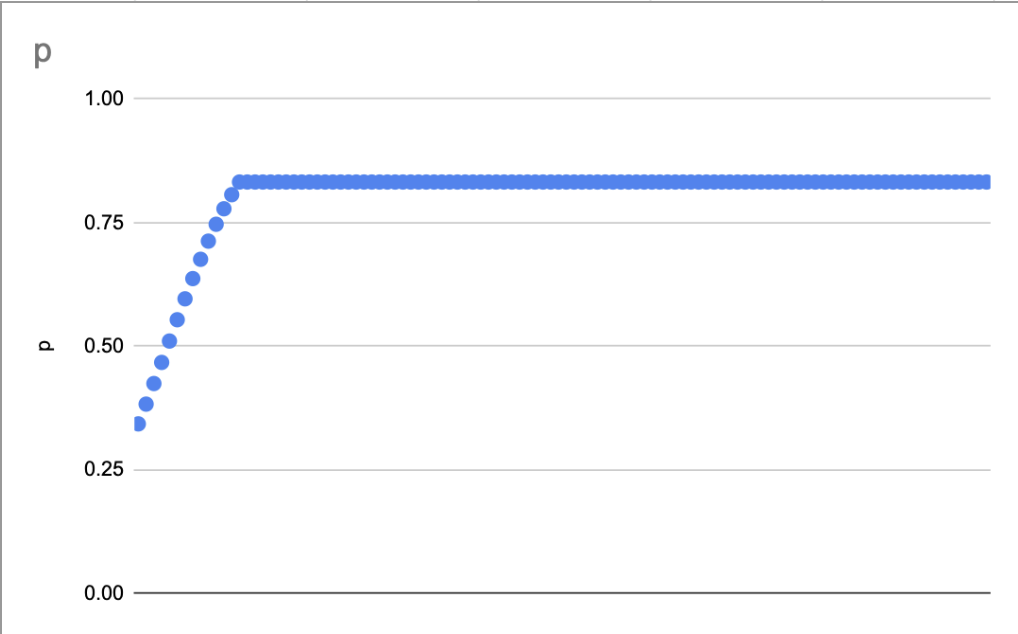

Got interested in how much variability seems to be explained just by question difficulty and reader proficiency.

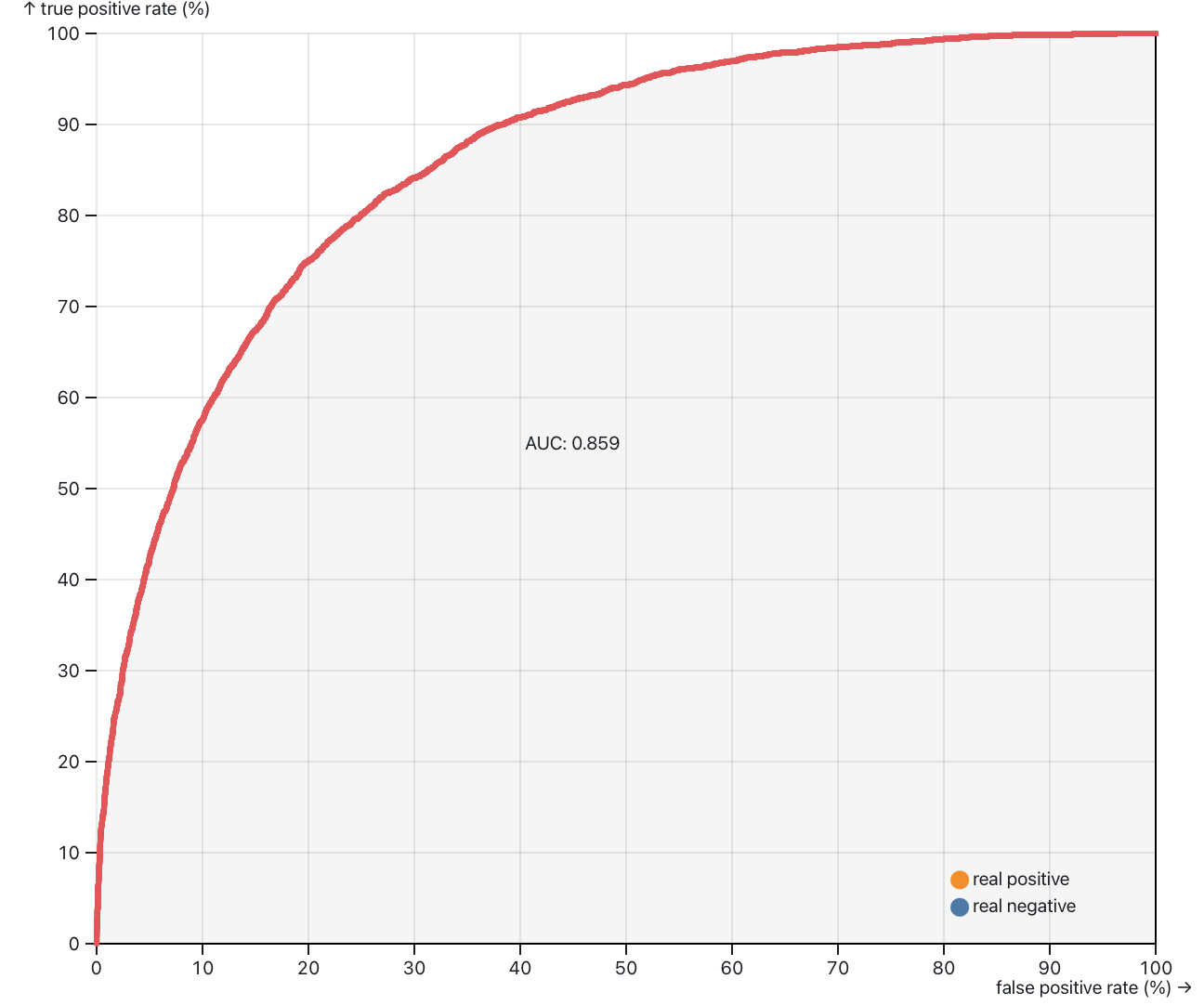

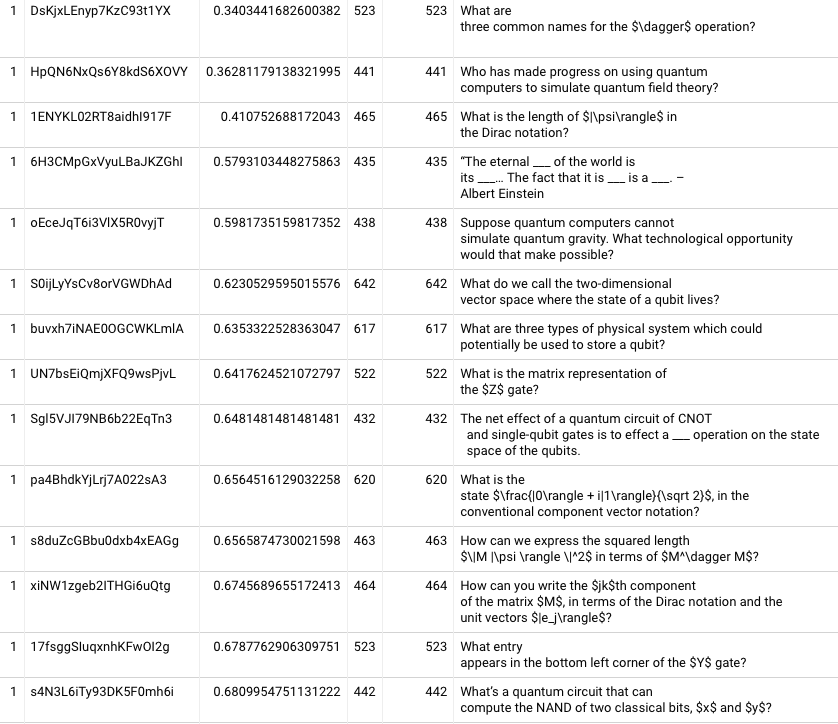

Ran a simple IRT model on in-essay data (20211216124546), put it through py-irt; got an 86% AUC:

Interpreting this… say that we want to catch 90% of the instances in which a student would have forgotten (i.e. a 10% false positive rate). The corresponding point on the vertical axis is roughly a 60% true positive rate, i.e. in 40% of the instances in which a student did remember the answer, we’d assign the question anyway.

The ratio of remembering to forgetting is roughly 7:1. So this means for 112 questions in QC, we’d make you review 39 you could answer correctly, make you review 12 you would have forgotten, and miss ~1 you’d forget. i.e. this isn’t very predictive!

Tried to use this IRT model to make predictions about the first review session (20211216160434), but running into issues with py-irt. Don’t feel like debugging it now; I don’t have a clear enough grasp on why I’m doing what Im’ doing.

2021-11-30

Updated first delayed repetition in QCVC, for readers who have collected at least 50 questions and answered at least 90% of those they collected 20211130174228:

- 1 week: 87% (81-92%, N=35)

- 2 weeks: 87% (81-91%, N=35)

- 1 month: 85% (77-92%, N=25)

Lumping all reviews in each condition into one big bucket and looking at that accuracy rate 20211130174838:

- 1 week: 86% (N=138 readers, 6381 reviews)

- 2 weeks: 84% (N=142 readers, 6319 reviews)

- 1 month: 83% (N=90 readers, 4477 reviews)

- 2 months: 81% (N=50 readers, 1744 reviews)

Looking at original-schedule users for 1 day:

- 1 day: 87% (N=258 readers, 6433 reviews)

In-essay accuracy for people who complete a first review: 93-95% across the board (20211130195455). 90% at 25th %ile, 100% at 75th %ile. 20220127110154

2021-11-26

Some more happy-path second session analysis, through an unprincipled across all schedules 20211126111544:

- 1 day -> 1 day (97 readers, 196 reviews): 90%

- 1 day -> 3 days (1441 readers, 73515 reviews): 97%

- 1 day -> 5 days (39 readers, 634 reviews): 97%

- 1 day -> 7 days (80 readers, 939 reviews): 96%

- 3 days -> 7 days (216 readers, 2260 reviews): 96%

- 3 days -> 14 days (8 readers, 252 reviews): 97%

- 7 days -> 14 days (20 readers, 222 reviews): 96%

- 7 days -> 21 days (37 readers, 1420 reviews): 96%

- 7 days -> 42 days (10 readers, 447 reviews): 93%

- 5 days -> 14 days (2090 readers, 89140 reviews): 95%

- 5 days -> 31 days (112 readers, 803 reviews): 96%

- 5 days -> 62 days (11 readers, 110 reviews): 97%

- 14 days -> 31 days (208 readers, 2023 reviews): 97%

- 30 days -> 90 days (16 readers, 326 reviews): 96%

- 31 days -> 62 days (18 readers, 204 reviews): 97%

Pretty much totally flat, I’d say. No real Spacing effect here, either, as far as I can see, though most of these pairs aren’t directly comparable. They’re also not generally comparable because most of these pairs (the ones not logged in the Quantum Country users seem to forget most prompts quite slowly) involve users whose schedules varied per-question rather than per-user, so there’s lots of likely inter-card interference.

And now looking at third sessions, remembered in-essay and in the first two sessions 20211126112016:

- 1 -> 3 -> 7 (967 / 51975): 98%

- 1 -> 3 -> 14 (39 / 1107): 97%

- 1 -> 5 -> 14 (19 / 322): 98%

- 1 -> 7 -> 14 (54 / 590): 97%

- 3 -> 7 -> 14 (157 / 1617): 98%

- 5 -> 14 -> 31 (1062 / 42850): 97%

- 5 -> 14 -> 62 (30 / 336): 98%

- 5 -> 31 -> 62 (60 / 414): 97%

- 14 -> 31 -> 62 (100 / 1097): 97%

Welp, alright! Nothing to see here, really. This is a natural consequence of Quantum Country users rarely forget after demonstrating five-day retention.

Another interesting question to ask, which might more clearly reveal the trouble for longer delays: how many repetitions necessary before the first successful repetition after a delay?

Because there’s so little forgetting going on, it doesn’t really seem to matter. 20211126122806

Let me try asking this aggregating by user. For users who eventually recall >= 100 cards after a delay, how many total reviews does it take? Null result here… though I can’t shake the feeling that I’m not asking this correctly. 20211126124426

2021-11-22 / 2021-11-23

Continuing my analysis of the compounding effects of the forgetting curve.

I find myself wanting to produce something like the “forking paths” diagram I’d made earlier this year. I’ll combine the people in the A and AX etc cohorts.

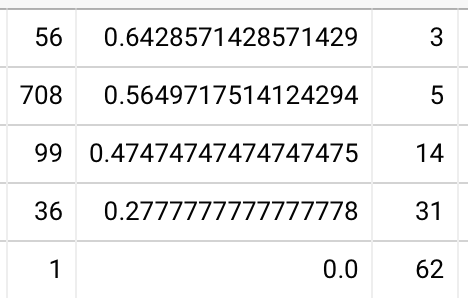

First session accuracies (1 day later) 20211122100841, across the four conditions which will follow:

- 1 week (162 readers, 1287 reviews): 72%

- 2 weeks (165 readers, 1174 reviews): 73%

- 1 month (154 readers, 1159 reviews): 67%

- 2 months (172 readers, 1349 reviews): 68%

This variation should be entirely noise. It’s discouraging that I see a slight downward trend here because I know it’s noise, and yet I’m still seeing a downward slope with a lot more samples than my other results. As far as I can tell, looking at the raw samples, it really is noise. This really calls into question the rest of my analysis. But I suppose at least we can look at what happens next. Update: the noise collapsed after I resolved the bug described a few paragraphs down. A 6% spread is not that unreasonable: the 95% confidence interval for any of these samples is roughly ±3% assuming these reviews are binomial iid.

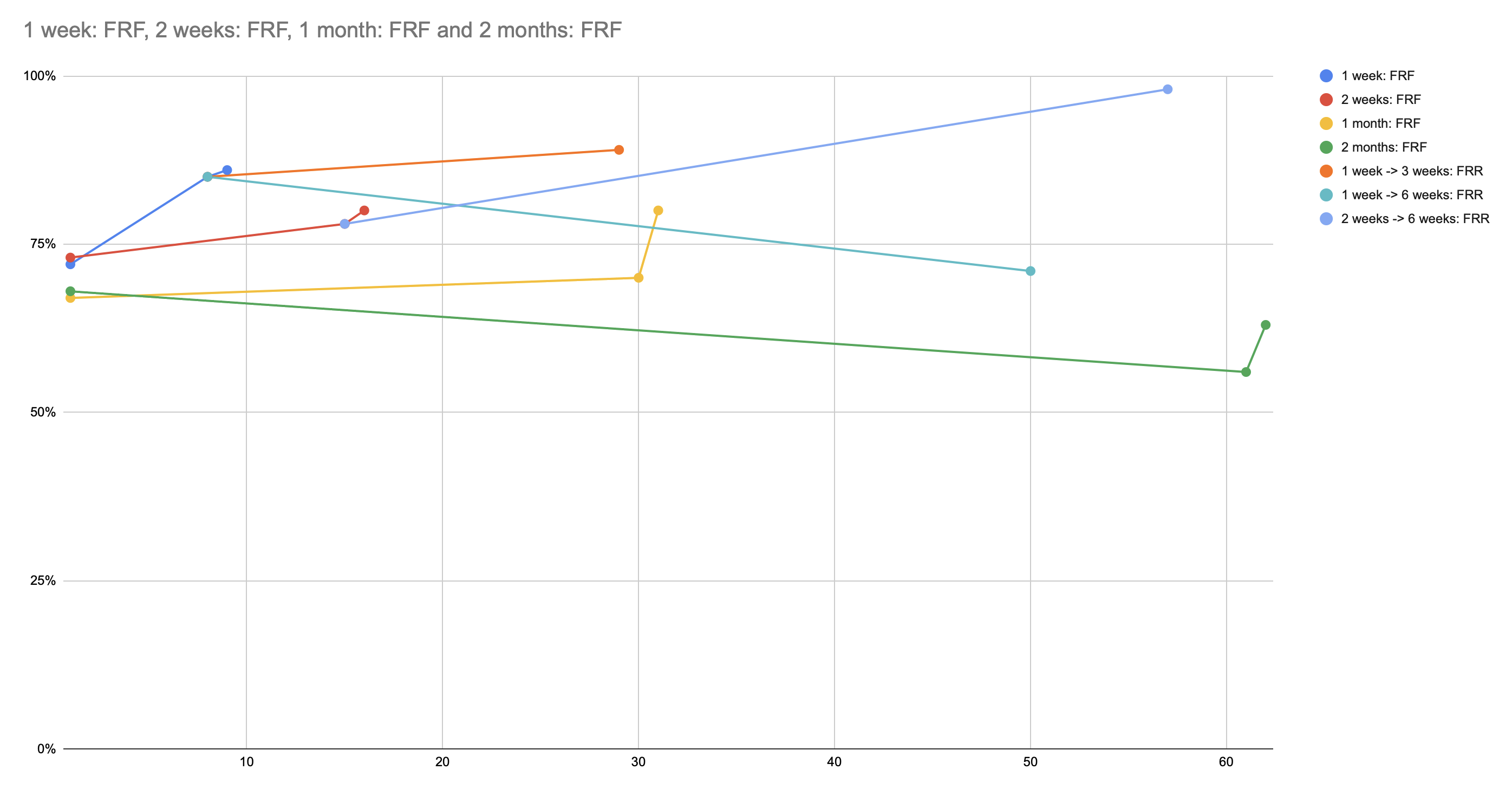

Second session accuracies (assuming remembered in first session) 20211123090637:

- 1 week (75 readers, 451 reviews): 85%

- 2 weeks (65 readers, 300 reviews): 78%

- 1 month (55 readers, 254 reviews): 70%

- 2 months (26 readers, 95 reviews): 56%

Trying to explain the noise I see in the first-session results, I dug into the actual experimental groups behind each of these… and now I’m very confused. The 2-week group contains a handful of A, C, and D folks too. What the heck??

Alright. There were bugs. Particularly at the beginning, it seems. And… older users are being opted into the new schedules. Blugh. I’m going to have to constrain these results much more carefully. Constraining to the actual expected beforeIntervals seems to be sufficient.

Third session accuracies, by second session latency, assuming forgotten in second session (one day later) 20211123103658:

- 1 week (31 readers, 79 reviews): 90%

- 2 weeks (33 readers, 86 reviews): 78%

- 1 month (24 readers, 70 reviews): 83%

- 2 months (9 readers, 44 reviews): 57%

Third session accuracies, by second session latency, assuming remembered in second session 20211123104202:

- 1 week -> 3 weeks (22 readers, 127 reviews): 89%

- 1 week -> 6 weeks (7 readers, 56 reviews): 71%

- 2 weeks -> 6 weeks (18 readers, 82 reviews): 98%

These seem to demonstrate the Spacing effect—first time I’ve seen that in Quantum Country data. Not many samples, though.

Another way to put this, which emphasizes the compounding nature of late scheduling: how often do people forget both in the delayed recall test and in the following recovery session?

- 1 week: 1%

- 2 weeks: 5%

- 1 month: 5%

- 2 months: 19%

Very casual attempt to plot this data here.

Repeating my analysis of remembered -> forgotten prompt recovery rates (20211123163958):

- 1 week (68 readers, 600 reviews): 84%

- 2 weeks (66 readers, 529 reviews): 81%

- 1 month (44 readers, 384 reviews): 85%

- 2 months (16 readers, 147 reviews): 74%

Pretty much the same as I got a few days ago… but I find it more interesting today. The endpoints are far enough apart to suggest a real effect.

And now, what about a fourth session, assuming forgotten in second and recalled in third? Eh… not really enough data for me to believe it. 20211123165933

Now looking at “happy path” traces (remembered in essay and in first review) 20211123171811:

- 1 week -> 3 weeks (37 readers, 1420 reviews): 96%

- 1 week -> 6 weeks (10 readers, 447 reviews: 93%

- 2 weeks -> 6 weeks (38 readers, 1205 reviews): 94%

- 1 month -> 3 months (16 readers, 326 reviews): 96%

Pretty amazing… no real diffs here! And not much demonstration of the Spacing effect.

Extending to aggressiveStart users 20211124102352:

- 5 days -> 14 days: 95%

- 5 days -> 1 month: 97%

2021-11-18

I realized late last night that if I want to see the impact of scheduling “too late”, I really should be looking at comparisons where the accuracies at the point of forgetting are quite disparate. So modifying yesterday’s query to look at questions which are:

- forgotten in-essay

- remembered in some “recovery” review session afterwards

- forgotten in the first “real” review session, after that

- … then reviewed one day later.

Inconclusive, but perhaps suggests a downwards trend.

- 1 week (31 readers, 75 reviews): 89%

- 2 weeks (33 readers, 83 reviews): 67%

- 1 month (20 readers, 62 reviews): 81%

- 2 months (8 readers, 43 reviews): 56%

A 5 days data point shows… 53%? I don’t understand what’s up with these users and why they’re so different. Can it be the stupid 1729 thing? Let me try excluding that data. Nope. Not sure what’s going on here.

OK… it looks like the behavior actually used to be:

- forget a prompt in-essay, then retry: it’s at 5 days

- remember at 5 days: now it’s 2 weeks

And so in the results I’m seeing for aggressiveStart which list both 5 and 14 days, the 5-day people forgot again in their first review session. (I wonder if this explains the weirdly-low 5-day numbers I was seeing the past couple days?)

Refining, for aggressiveStart:

- forgotten in-essay

- remembered in first session (5 days later)

- forgotten in second session (2 weeks later)

- … third session? (5 days later)

20211118111432: 74% (510 readers, 949 reviews)

For original (but n.b. they had no retry!… so not really comparable):

- forgotten in-essay

- remembered in first session (1 day later)

- forgotten in second session (1 day later)

- … third session? (1 day later)

20211118111922: 71% (420 readers, 671 reviews)

This suggests that the impact of retry is greater than the impact of scheduling.

Now looking again at the recent experimental groups, which will have the form:

- forgotten in-essay

- remembered in first session (1 day later)

- forgotten in second session (X days later)

- … third session? (1 day later)

- 1 week (30 readers, 59 reviews): 86%

- 2 weeks (30 readers, 53 reviews): 70%

- 1 month (18 readers, 47 reviews): 81%

- 2 months (8 readers, 30 reviews): 63%

Certainly suggests a causal downwards trend. What I take from this: Retrieval practice, even when repeating the question until it’s remembered, does not fully compensate for forgetting. If you’re going to forget, it’s best to do the retrieval practice as soon as possible.

Amusing: there’s some very weak evidence here for the Spacing effect, comparing to the aggressiveStart data, in which the first session is 5 days (74%), vs. 1 day (70%) in this instance.

I should expect to see this effect anywhere I can measure a real forgetting curve. So I should see it also for “hard” cards forgotten in the first session. But I don’t, really! I see 85 / 85 / 85 / 67%. 20211118121112. < 50 data points per category; 21 for 2 months. Hm.

I tried just repeating the query from 11/16 with this analysis… and I’m getting fewer samples and add flatter curve: 74 / 69 / 65 / 62. Gotta understand why. Oh! I’m pretty sure it’s because I’m only including instances where it was remembered initially. Yep!

2021-11-17

OK. Let’s look at accuracy among users of 2021-04 Quantum Country schedule experiment for questions which are:

- remembered in-essay

- forgotten in first session (X days later)

- … then reviewed one day later.

Looks like a null result. 20211117184120

- 1 week (68 readers; 600 reviews): 84%

- 2 weeks (63 readers; 496 reviews): 82%

- 1 month (43 readers; 379 reviews): 85%

- 2 months (16 readers; 147 reviews): 74%

…but you know, it makes sense that this would be a null result. We measured very little forgetting curve in this case anyway: questions remembered in essay are forgotten surprisingly slowly.

2021-11-16

Thinking again about 2021-04 Quantum Country schedule experiment. Following up on the stats from last time…

First, first repetition accuracies across users who collected all prompts:

- 2021-04-A (1 week, N=25): 79% / 86% / 92%

- 2021-04-B (2 weeks, N=22): 77% / 87% / 92%

- 2021-04-C (1 month, N=13): 70% / 85% / 95%

- (not enough for D)

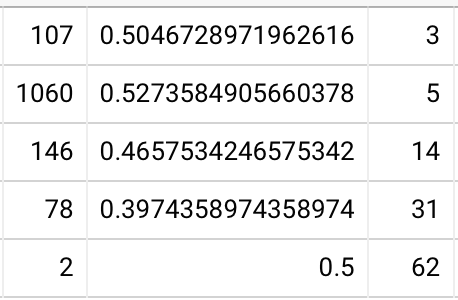

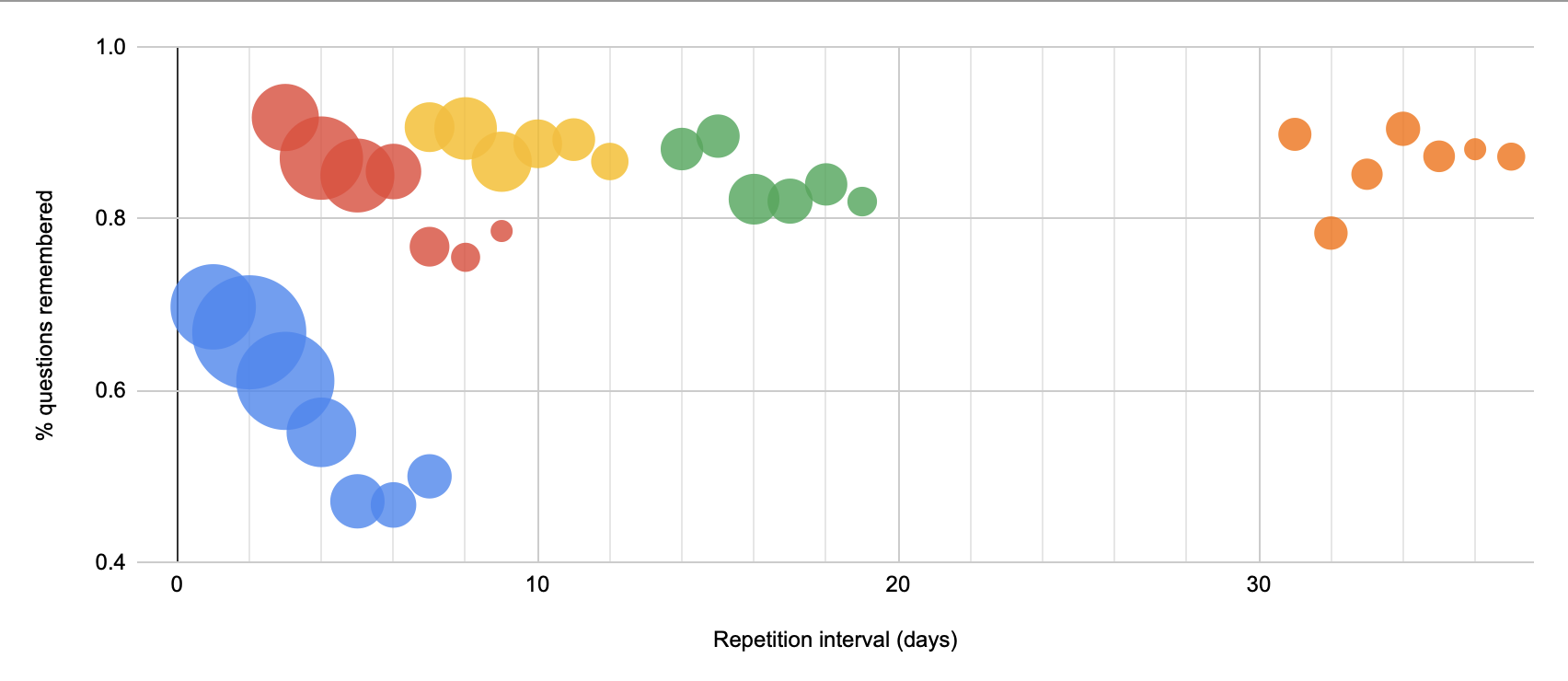

Averaging across all responses, for prompts forgotten during initial read (20211011120323):

- 1 week (79 readers, 626 reviews): 84%

- 2 weeks (73 readers, 447 reviews): 77%

- 1 month (57 readers, 341 reviews): 69%

- 2 months (27 readers, 138 reviews): 56%

Basically the same as a month ago, with a chunk more data.

The trouble with those first numbers is that they’re mixing in the next-day reviews for forgotten prompts, which will damp out variation. So, adapting that last query to include questions which were remembered initially:

- 1 week (131 readers, 6476 reviews): 85%

- 2 weeks (133 readers, 5642 reviews): 84%

- 1 month (87 readers, 4302 reviews): 82%

- 2 months (46 readers, 1609 reviews): 80%

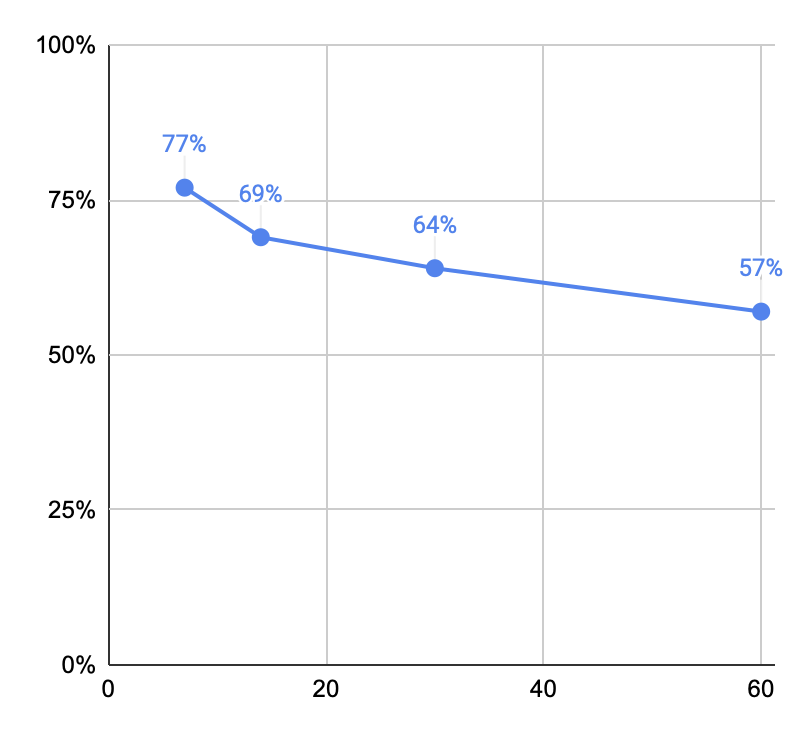

For the hardest ten questions, in terms of in-essay accuracy (20211116152859):

- 1 week (76 readers, 420 reviews): 77%

- 2 weeks (61 readers, 338 reviews): 69%

- 1 month (51 readers, 291 reviews): 64%

- 2 months (28 readers, 118 reviews): 57%

Aha! A real curve. Worth noting that many of these respondents got an extra repetition in (because of the post-forgetting review).

And for the easiest ten (20211116163825):

- 1 week (115 readers, 681 reviews): 95%

- 2 weeks (120 readers, 649 reviews): 95%

- 1 month (77 readers, 443 reviews): 95%

- 2 months (42 readers, 181 reviews): 93%

Basically flat forgetting. Right. So for these questions, we might as well push the first reviews out for months.

Well, maybe. Interestingly, the “easiest” question is

‘After we measure a state $\alpha|0\rangle+\beta|1\rangle$ in the computational basis, is it still in the state $\alpha|0\rangle+\beta|1\rangle$?

It’s worth asking: is retrieval practice really the purpose of this question? Or is it more that these are Salience prompts? Is it a “reminder”—i.e. hey dummy, measurement is destructive! Here’s another theory: the phrasing of this question may cue successful retrieval… but if we’d asked some other question which only incidentally required this knowledge, we might see quite a lot of variation in memory. I suppose this comes back to Retrieval practice and transfer learning.

We can measure, per-question, the size of the delta between the first and last. 20211116172650 Hm… only 40 samples or so per question. Pretty noisy, though 3/4 of questions decline over time.

I wonder if the bottom questions here are so flat because those questions are so extreme that they’re mostly measuring reader properties (prior knowledge, reading diligence), rather than incremental forgetting-over-time.

Does this get clearer if I filter on initial success? Not really.

I thought: maybe I can use the original schedule data to get an extra data point at 1 day for these hard prompts. Interestingly, that also shows 78%. And… 5 days shows 56%?! I think something odd is going on there—I don’t believe that number.

Likewise looking at the original schedule across all prompts at first repetition, we see an accuracy of 89% at one day and 82% at five (again, an odd dip here) 20211116182507. So, roughly, from 90% to 80% across two months. Wild. And for the easiest ten prompts, we see 96% at one day and 95% at five days 20211116182559. Almost no drop across the two month period.

How rapidly does this effect disappear? Taking cards in difficulty 10-20 (again in terms of accuracy at first essay), I see 20211116182921:

- 1 day: 81%

- 5 days: 69% (again… I don’t trust this)

- 1 week: 74%

- 2 weeks: 79%

- 1 month: 72%

- 2 months: 71%

I’d describe this as the effect mostly disappearing for these prompts.

How sensitive is this to my choice of in-essay “hardest” ranking? Taking the ten lowest accuracies at first review, I get 20211116183103:

- 1 day: 62%

- 5 days: 45% (again, I don’t trust this)

- 7 days: 58%

- 2 weeks: 58%

- 1 month: 54%

- 2 months: 44%

OK, so a pretty similar decline, albeit starting from a much lower position.

Arguably, the interval isn’t really what matters here. Waiting an extra month and a half lowers accuracy by 14%… that’s like one and a half fewer questions remembered on average. Not a big difference at all. The big effect here, really, is repetition! Maybe it really doesn’t matter so much when you review—just that you review. Or, well, that’s a hypothesis.

So another way to look at these schedules is: you’re trying to get to the point where you have a 90% chance of actually remembering the answer to the question…

Just realized I can use the same method to look at forgetting rates for original schedule users on originally-forgotten prompts at 1 day: 89%. So we have 89% falling to 56% two months later. OK. Solid. 20211116190100 Interestingly, I see 89% for prompts remembered in-essay, too 20211116190541. So these curves converge at 1 day.

2021-10-11

Checking in again on 2021-04 Quantum Country schedule experiment a couple months later via 20210708114116.

20th/50th/80th percentile accuracies at first review:

- 2021-04-A (1 week, N=22): 79% / 83% / 93%

- 2021-04-B (2 weeks, N=20): 74% / 85% / 91%

- 2021-04-C (1 month, N=11): 70% / 86% / 95%

(2 months only has 3 users—pretty clear that destroyed retention… and likely that 1 month did too… worth exploring/quantifying)

Conditioned on first answer correct? 20211011111522

- 1 week: 81% / 85% / 94%

- 2 weeks: 79% / 87% / 93%

- 1 month: 75% / 84% / 95%

And conditioned on first answer incorrect? I haven’t actually run the numbers, but the deltas here are small enough that I doubt they matter a huge amount.

One more idea: control for selection effects to some degree by lumping everyone together? Yeah, OK:

- 1 week (N=151): 82%

- 2 weeks (N=130): 83%

- 1 month (N=127): 79%

- 2 months (N=133): 75%

The effect should be magnified if I exclude prompts forgotten in the essay, since those ISIs are the same across all groups.

Well… hm. 20211011112217

- 1 week (N=102): 85%

- 2 weeks (N=85): 86%

- 1 month (N=63): 83%

- 2 months (N=43): 83%

The trouble here is that, apparently, most of the users in the prior set were showing up for their missed-question reviews, but not returning. And the selection effects are stronger for the longer intervals—makes sense. But what this means is that anticipated effects of increased forgetting are offset by more intense selection effects. Guh.

I could compare only those 1-week people who actually stick around for 1 month, but it’s not clear that this is a fair comparison: after all, they’ll have spent much more time on net.

Alright. What if I focus on prompts forgotten during the essay, looking at the first review after the “re-review” session? This looks somewhat more reasonable. 20211011120323

- 1 week (66 users, 553 reviews): 84%

- 2 weeks (63 users, 406 reviews): 75%

- 1 month (48 users, 284 reviews): 67%

- 2 months (17 users, 120 reviews): 55%

This is pretty remarkable.

This data supports Quantum Country users seem to forget most prompts quite slowly for the case of questions answered correctly while reading the essay, but not for those forgotten. Still quite surprising.

Can it just be people lying? I don’t think this data can support that: the median user marks 14-17% of their prompts as forgotten in the first review. So they’re not grossly lying, and there is some variation. The question is why the variation isn’t bigger.

OK. Well… hm. This really needs more thought if I’m going to do anything with it.

Also quite striking that since April, only 75 readers have collected and reviewed all prompts at least once. That’s… a very low rate. So low that I’m unlikely to be able to really use QCVC as a passive observatory. I need to understand which parts of the funnel are changing here. Is it just that we’re getting less traffic? Or is it really that people are abandoning to a greater extent? Even if all cohorts behaved like the 1 week cohort, it still wouldn’t be that many people for five months!

2021-07-19

I’m concerned that with 2021-04 Quantum Country schedule experiment, a lot of people are having the following unfortunate experience: they forget five or six questions on their first read through… then have a session due the next day… and they forget two in that session… and then they have another session due! Is this happening?

- How many questions do people tend to forget in their first read, anyway?

- OK, a lot! 20/50/80 is 4/12/23! 20210719095848

- How many already-forgotten questions do experimental-schedule people forget in their first session?

- 20/50/80 is 1/10/25! (N=114) 20210719100936

- And so, presumably, they get stuck in a sequence of reviewing the things they’ve forgotten.

- Not actually that bad. Before first long break, 70% of users have only 1 session. Only 20% have 3+. That’s still a large-ish chunk, I guess. Batching is saving us, I suppose.

- But people are forgetting after their first long session, so 20/50/80 total short sessions after that is 0 / 2 / 4. Note that this is a total count: it includes the short sessions before the first session too. Ohh… and, there’ll also be short sessions here because you’re going through all the prompts, and there’s a cap. So this isn’t really worrisome.

- So in total, before the second long review break, most people have <= 2 short sessions, and 80% of people have <= 4. That seems… fine, I guess. I’m feeling disinclined to fiddle with this for now.

Checking in on 2021-04 Quantum Country schedule experiment today. First crack: 20210708114116